配置指导:基于WireGuard的FullMesh VPN组网方案

1 目标

本文档将简要介绍开源VPN协议WireGuard,与基于WireGuard实现Full-Mesh组网的开源项目Netmaker的基本概念,以及安装部署的具体方法。

2 概要介绍

2.1 关于WireGuard

WireGuard是由Jason Donenfeld等人用C语言编写的一个开源VPN协议,被视为下一代VPN协议,旨在解决许多困扰IPSec/IKEv2、OpenVPN或L2TP等其他VPN协议的问题。它与Tinc和MeshBird等现代VPN产品有一些相似之处,即加密技术先进、配置简单。

从2020年1月开始,它已经并入了Linux内核的5.6版本,这意味着大多数Linux发行版的用户将拥有一个开箱即用的WireGuard。

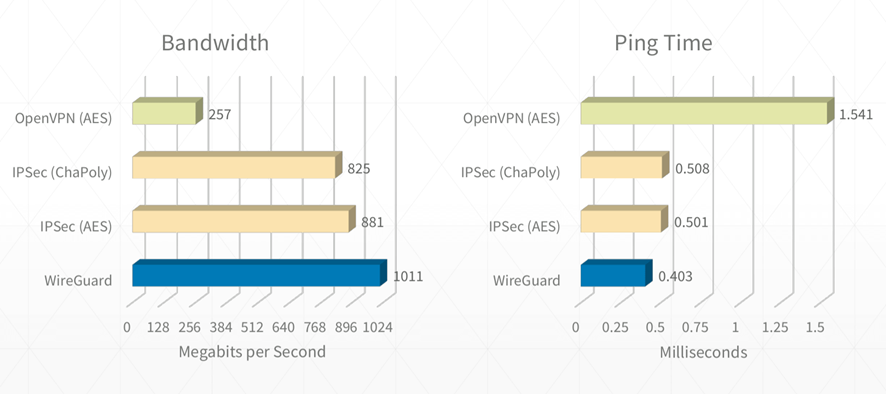

上图是WireGuard官方给出的与目前市面上常用的VPN协议的性能测试对比,测试过程使用万兆物理网卡,服务器的Linux内核版本为4.6.1。可以看到WireGuard的带宽基本接近网卡物理带宽,网络时延在几个对比的协议中是最低的。

关于WireGuard的特性,总结如下:

- 基于UDP协议;

- 核心部分:加密密钥路由

- 公钥和IP地址列表(AllowedIPs)关联起来;

- 每一个WG接口都有一个私钥和一个Peer列表;

- 每一个Peer都有一个公钥和IP地址列表;

- 发送包时,AllowedIPs字段起到路由表的功能:Peer的每个AllowedIPs,都会生成一条静态路由到设备;

- 接收包时,AllowedIPs字段起到权限管理的功能:Packet的Source IP位于服务端的 AllowedIPs 列表时则接收,否则丢弃。

在了解完WireGuard基本概念后,再来看下在实际应用中,如何利用WireGuard来组建比较复杂的网络拓扑,这里介绍3个典型的组网拓扑。

- 点对点(Point-to-point)

这是最简单的拓扑,所有的节点要么在同一个局域网,要么直接通过公网访问,因此WireGuard可以直接连接到对端,不需要中继节点进行跳转。

- 中心辐射型(Hub-and-Spoke)

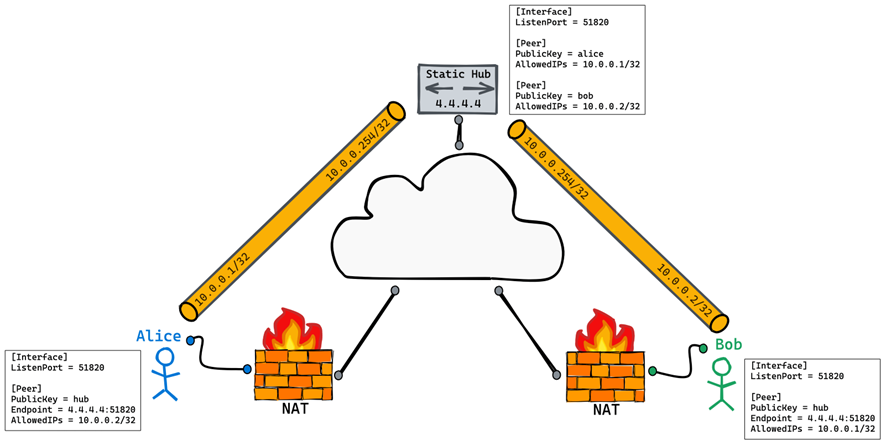

在WireGuard的概念中没有Server和Client之分,所有的节点都是Peer。通常进行组网的做法是找一个拥有公网IP的服务器作为中继节点,也就是VPN网关,然后各个节点之间的通信都由VPN网关进行转发。为了方便理解,我们可以把这种架构中的VPN网关当作Server,其他的节点当作Client,但实际上是不区分Server和Client的,架构示例如下图所示。

这种架构的缺点相当明显,当Peer越来越多时,VPN网关就会变成垂直扩展的瓶颈。并且,通过VPN网关转发流量需要由公网IP和较大的带宽,成本较高。最后,从性能层面考虑,通过VPN网关转发流量会带来很高的延迟与单点故障的风险。

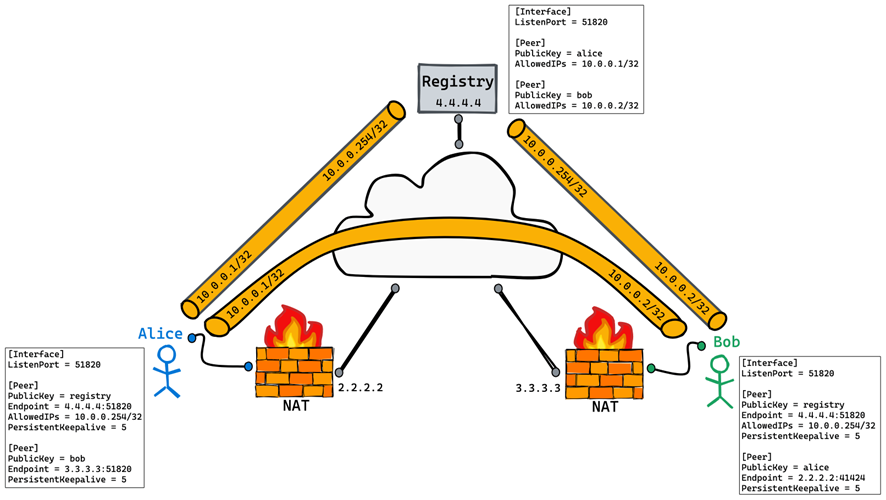

- 全连接网络(FullMesh)

在全互联的架构下,任意一个Peer和其他所有Peer都是直连的,无需经由一个VPN网关来中转流量,基本解决了中心辐射型架构的所有不足。在WireGuard的场景下实现全互联组网,需要在每一个Peer的配置文件中声明除本机以外的所有Peer。这个逻辑并不难理解,难点在于配置的繁琐程度,尤其是在组网变得比较大时,某一个Peer的变更,会带来非常大的配置工作量。

因此,如今已经有很多开源工具被开发出来,以实现WireGuard FullMesh组网的配置和管理,后文中会详细介绍如何通过Netmaker这样一款开源工具实现基于WireGuard的FullMesh组网。

2.2 关于Netmaker

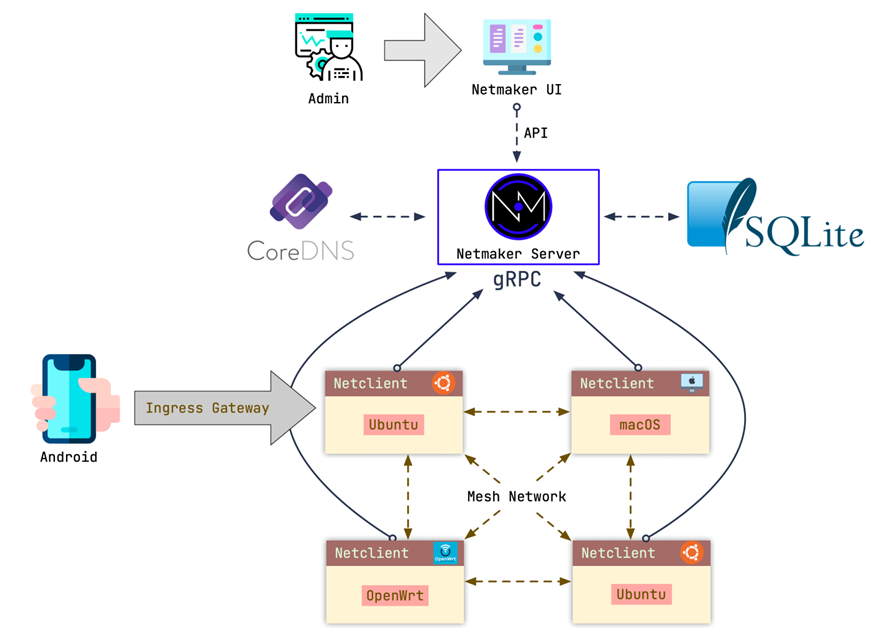

Netmaker是一个用来配置WireGuard全互联模式的可视化工具,它的功能非常强大,支持NAT穿透、UDP打洞、多租户,客户端也几乎适配了所有平台,包括Linux、Mac和Windows,并且还可以通过WireGuard原生客户端连接智能手机(Android和iPhone)。

其最新版本的基准测试结果显示,基于Netmaker的WireGuard网络速度比其他全互联模式的VPN(例如Tailscale和ZeroTier)网络速度快50%以上。

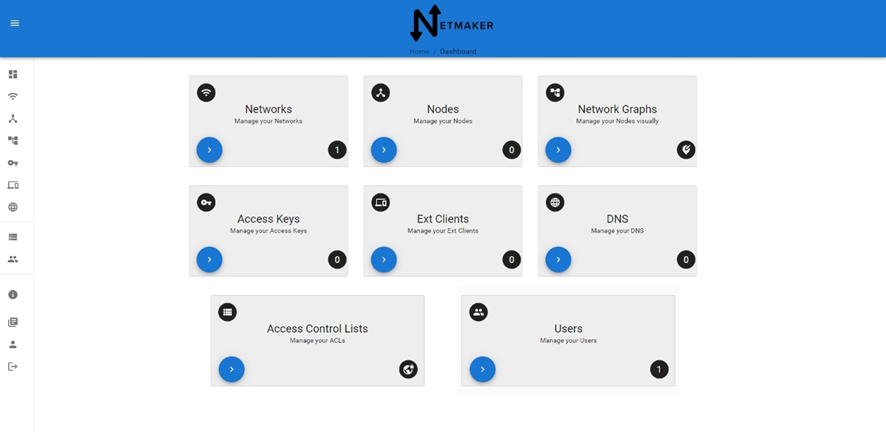

Netmaker使用的是C/S架构,即客户端/服务器架构。NetmakerServer包含两个核心组件:用来管理网络的可视化界面,以及与客户端通信的gRPCServer。也可以选择部署DNS服务器(CoreDNS)来管理私有DNS。

客户端(netclient)是一个二进制文件,可以在绝大多数Linux发行版、macOS和Windows中运行,它的功能就是自动管理WireGuard,动态更新Peer的配置。客户端会周期性地向NetmakerServer签到,以保持本机Peer的配置为最新状态,然后与所有的Peer建立点对点连接,即全互联组网。

3 系统环境

本次验证环境中,使用4台虚拟机进行FullMesh组网测试。其中,一台Ubuntu用作Netmaker的服务器端,另外三台CentOS用作客户端。

3.1 服务器端

操作系统:Ubuntu 20.04.4 LTS;

内核版本:5.15.0-46-generic;

WireGuard版本:1.0.20220627;

Docker CE版本:20.10.12;

Netmaker版本:0.12.0。

3.2 客户端

操作系统:CentOS Linux release 7.9.2009(Core);

内核版本:3.10.0-1160.66.1.el7.x86_64;

WireGuard版本:1.0.20200513;

NetClient版本:0.12.0。

4 安装部署

4.1 安装配置WireGuard

在组网中的所有Client中,都需要完成WireGuard的安装,此处仅展示node-01的安装步骤,其他Client的安装配置同理。

4.1.1 安装内核模块

[root@node-01 ~]# yum install https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpm

[root@node-01 ~]# yum install kmod-wireguard wireguard-tools

[root@node-01 ~]# reboot

...

[root@node-01 ~]# modprobe wireguard4.1.2 开启IP转发

[root@node-01 ~]# cat <<'EOF'>> /etc/sysctl.conf

net.ipv4.ip_forward = 1

net.ipv4.conf.all.proxy_arp = 1

EOF

[root@node-01 ~]# sysctl -p /etc/sysctl.conf

4.2 安装配置Netmaker服务器端

本小结中的配置步骤,仅需要在Netmaker服务器端完成,Client端无需配置。

4.2.1 配置DockerCE容器运行环境

root@open-source:~# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

root@open-source:~# yum remove -y docker docker-common docker-selinux docker-engine

root@open-source:~# yum update

root@open-source:~# yum install -y yum-utils device-mapper-persistent-data lvm2

root@open-source:~# yum install -y docker-ce docker-ce-cli containerd.io docker-compose-plugin

root@open-source:~# systemctl enable docker

root@open-source:~# systemctl retart docker4.2.2 准备DockerCompose配置文件

root@open-source:~# cat docker-compose.yml

version: "3.4"

services:

netmaker:

container_name: netmaker

image: gravitl/netmaker:v0.12.0

volumes:

- dnsconfig:/root/config/dnsconfig

- sqldata:/root/data

cap_add:

- NET_ADMIN

- NET_RAW

- SYS_MODULE

sysctls:

- net.ipv4.ip_forward=1

- net.ipv4.conf.all.src_valid_mark=1

restart: always

environment:

SERVER_HOST: "192.168.4.44"

SERVER_HTTP_HOST: "192.168.4.44"

SERVER_GRPC_HOST: "192.168.4.44"

COREDNS_ADDR: "192.168.4.44"

SERVER_API_CONN_STRING: "192.168.4.44:8081"

SERVER_GRPC_CONN_STRING: "192.168.4.44:50051"

GRPC_SSL: "off"

DNS_MODE: "on"

API_PORT: "8081"

GRPC_PORT: "50051"

CLIENT_MODE: "on"

MASTER_KEY: "36lGTBLyp8itKCYeh7mzTYWej9RgF0"

CORS_ALLOWED_ORIGIN: "*"

DISPLAY_KEYS: "on"

DATABASE: "sqlite"

NODE_ID: "netmaker-server-1"

MQ_HOST: "mq"

HOST_NETWORK: "off"

MANAGE_IPTABLES: "on"

PORT_FORWARD_SERVICES: "mq"

VERBOSITY: "1"

ports:

- "51821-51830:51821-51830/udp"

- "8081:8081"

- "50051:50051"

netmaker-ui:

container_name: netmaker-ui

depends_on:

- netmaker

image: gravitl/netmaker-ui:v0.12.0

links:

- "netmaker:api"

ports:

- "8082:80"

environment:

BACKEND_URL: "http://192.168.4.44:8081"

restart: always

coredns:

depends_on:

- netmaker

image: coredns/coredns

command: -conf /root/dnsconfig/Corefile

container_name: coredns

restart: always

volumes:

- dnsconfig:/root/dnsconfig

caddy:

image: caddy:latest

container_name: caddy

restart: unless-stopped

network_mode: host

volumes:

- /root/Caddyfile:/etc/caddy/Caddyfile

- caddy_data:/data

- caddy_conf:/config

mq:

image: eclipse-mosquitto:2.0.14

container_name: mq

restart: unless-stopped

ports:

- "1883:1883"

volumes:

- /root/mosquitto.conf:/mosquitto/config/mosquitto.conf

- mosquitto_data:/mosquitto/data

- mosquitto_logs:/mosquitto/log

volumes:

caddy_data: {}

caddy_conf: {}

sqldata: {}

dnsconfig: {}

mosquitto_data: {}

mosquitto_logs: {}

root@open-source:~# 4.2.3 启动Netmaker的容器组

root@open-source:~# docker-compose up -d

Creating network "root_default" with the default driver

Creating mq ...

Creating netmaker ...

Creating caddy ...

Creating netmaker-ui ...

Creating coredns ...

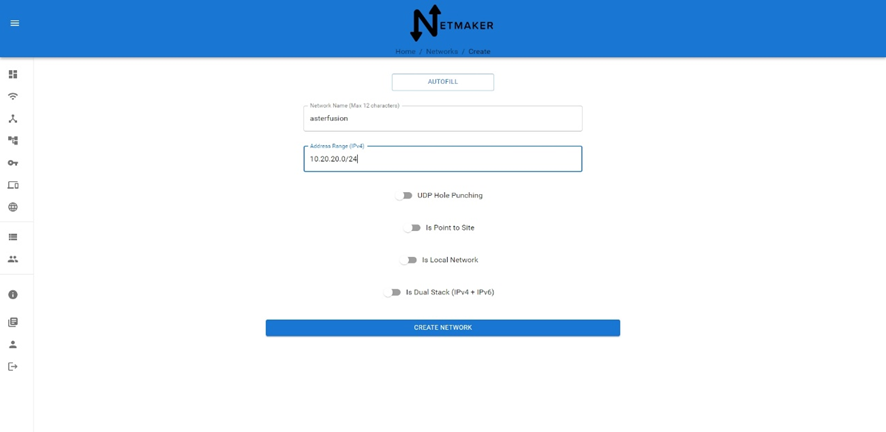

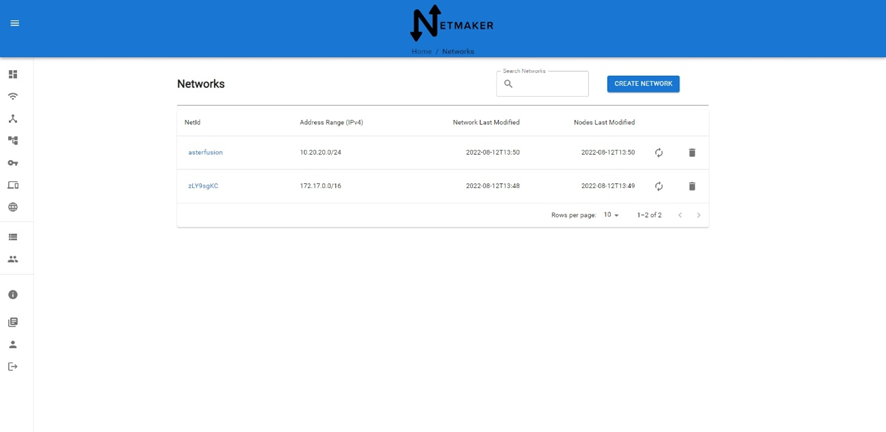

root@open-source:~# 4.2.4 创建Full-Mesh网络

4.3 安装配置Netmaker客户端

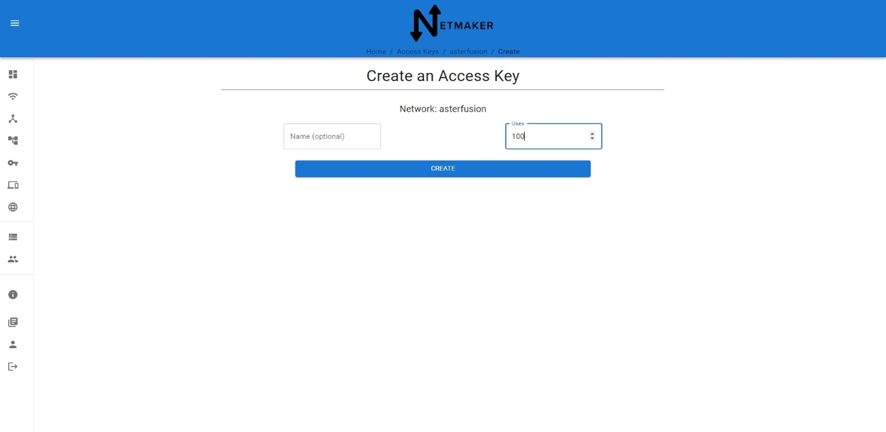

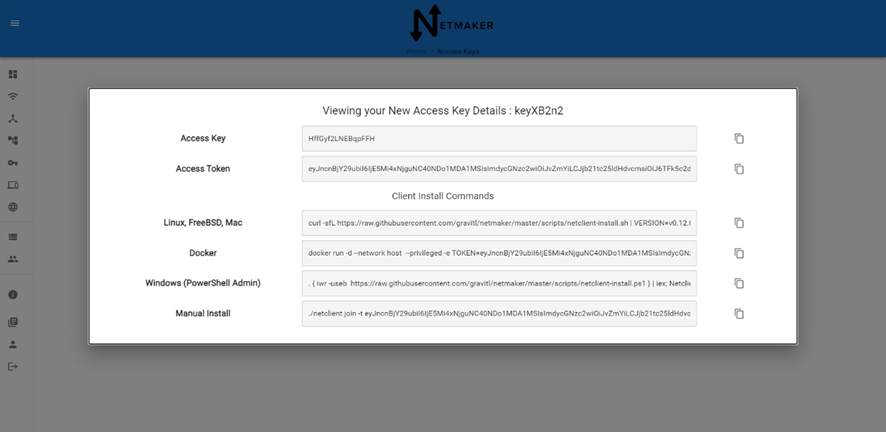

4.3.1 下载Netclient客户端,使用Access Token加入测试网络

Node-01:

[root@node-01 ~]# wget https://github.com/gravitl/netmaker/releases/download/v0.12.0/netclient

[root@node-01 ~]# chmod +x netclient

[root@node-01 ~]# ./netclient join -t eyJncnBjY29ubiI6IjE5Mi4xNjguNC40NDo1MDA1MSIsImdycGNzc2wiOiJvZmYiLCJjb21tc25ldHdvcmsiOiJ6TFk5c2dLQyIsIm5ldHdvcmsiOiJhc3RlcmZ1c2lvbiIsImtleSI6IkhmZkd5ZjJMTkVCcXBGRkgiLCJsb2NhbHJhbmdlIjoiIn0=

2022/08/12 14:34:10 [netclient] joining asterfusion at 192.168.4.44:50051

2022/08/12 14:34:10 [netclient] node created on remote server...updating configs

2022/08/12 14:34:10 [netclient] retrieving peers

2022/08/12 14:34:10 [netclient] starting wireguard

2022/08/12 14:34:10 [netclient] waiting for interface...

2022/08/12 14:34:10 [netclient] interface ready - netclient.. ENGAGE

2022/08/12 14:34:12 [netclient] restarting netclient.service

2022/08/12 14:34:13 [netclient] joined asterfusion

[root@node-01 ~]#

Node-02:

[root@node-02 ~]# ./netclient join -t eyJncnBjY29ubiI6IjE5Mi4xNjguNC40NDo1MDA1MSIsImdycGNzc2wiOiJvZmYiLCJjb21tc25ldHdvcmsiOiJ6TFk5c2dLQyIsIm5ldHdvcmsiOiJhc3RlcmZ1c2lvbiIsImtleSI6IkhmZkd5ZjJMTkVCcXBGRkgiLCJsb2NhbHJhbmdlIjoiIn0=

2022/08/12 14:41:49 [netclient] joining asterfusion at 192.168.4.44:50051

2022/08/12 14:41:49 [netclient] node created on remote server...updating configs

2022/08/12 14:41:49 [netclient] retrieving peers

2022/08/12 14:41:49 [netclient] starting wireguard

2022/08/12 14:41:49 [netclient] waiting for interface...

2022/08/12 14:41:49 [netclient] interface ready - netclient.. ENGAGE

2022/08/12 14:41:50 [netclient] restarting netclient.service

2022/08/12 14:41:52 [netclient] joined asterfusion

[root@node-02 ~]#

Node-03:

[root@node-03 ~]# ./netclient join -t eyJncnBjY29ubiI6IjE5Mi4xNjguNC40NDo1MDA1MSIsImdycGNzc2wiOiJvZmYiLCJjb21tc25ldHdvcmsiOiJ6TFk5c2dLQyIsIm5ldHdvcmsiOiJhc3RlcmZ1c2lvbiIsImtleSI6IkhmZkd5ZjJMTkVCcXBGRkgiLCJsb2NhbHJhbmdlIjoiIn0=

2022/08/12 14:42:06 [netclient] joining asterfusion at 192.168.4.44:50051

2022/08/12 14:42:06 [netclient] node created on remote server...updating configs

2022/08/12 14:42:06 [netclient] retrieving peers

2022/08/12 14:42:06 [netclient] starting wireguard

2022/08/12 14:42:06 [netclient] waiting for interface...

2022/08/12 14:42:06 [netclient] interface ready - netclient.. ENGAGE

2022/08/12 14:42:08 [netclient] restarting netclient.service

2022/08/12 14:42:09 [netclient] joined asterfusion

[root@node-03 ~]#

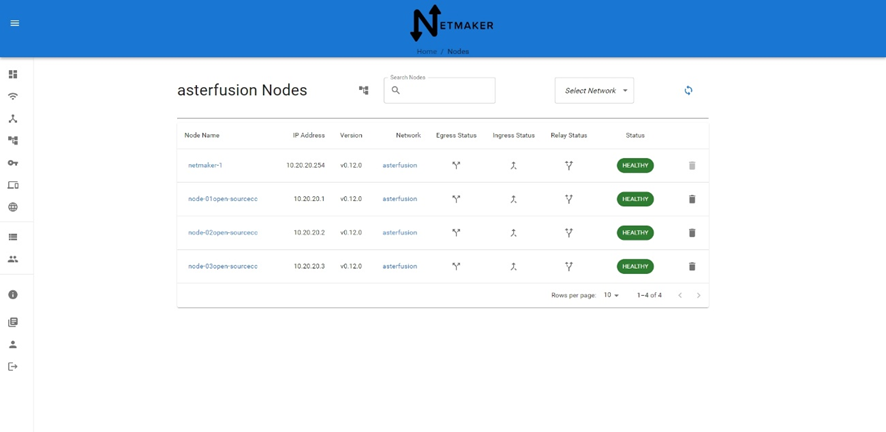

4.3.2 在控制器界面中检查网络状态

4.4 组网测试

4.4.1 在客户端节点检查WG信息与路由信息

Node-01:

[root@node-01 ~]# wg

interface: nm-asterfusion

public key: qmPw+9r2+S94EjMAkNwMm9YV8ZDoSay8Fyi1HgyqFlg=

private key: (hidden)

listening port: 51821

peer: KnwIOGgWWCvXDqgrWfpc0xvlSf7GN/LjvUeJlpJhMy0=

endpoint: 192.168.4.103:51821

allowed ips: 10.20.20.3/32

latest handshake: 2 seconds ago

transfer: 304 B received, 272 B sent

persistent keepalive: every 20 seconds

peer: rsAp8gC+vW63ET7YPpFT2oWMgrelFM1nO+9pAS2KLmQ=

endpoint: 192.168.4.102:51821

allowed ips: 10.20.20.2/32

latest handshake: 5 seconds ago

transfer: 304 B received, 272 B sent

persistent keepalive: every 20 seconds

interface: nm-zLY9sgKC

public key: B9QORmZw9PmhsHwDmZzxyzXZKxMTmx2qCAmlRWIzGG0=

private key: (hidden)

listening port: 55829

[root@node-01 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.4.1 0.0.0.0 UG 100 0 0 ens192

10.20.20.0 0.0.0.0 255.255.255.0 U 0 0 0 nm-asterfusion

10.20.20.2 0.0.0.0 255.255.255.255 UH 0 0 0 nm-asterfusion

10.20.20.3 0.0.0.0 255.255.255.255 UH 0 0 0 nm-asterfusion

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.4.0 0.0.0.0 255.255.255.0 U 100 0 0 ens192

[root@node-01 ~]#

Node-02:

[root@node-02 ~]# wg

interface: nm-asterfusion

public key: rsAp8gC+vW63ET7YPpFT2oWMgrelFM1nO+9pAS2KLmQ=

private key: (hidden)

listening port: 51821

peer: KnwIOGgWWCvXDqgrWfpc0xvlSf7GN/LjvUeJlpJhMy0=

endpoint: 192.168.4.103:51821

allowed ips: 10.20.20.3/32

latest handshake: 10 seconds ago

transfer: 304 B received, 272 B sent

persistent keepalive: every 20 seconds

peer: qmPw+9r2+S94EjMAkNwMm9YV8ZDoSay8Fyi1HgyqFlg=

endpoint: 192.168.4.101:51821

allowed ips: 10.20.20.1/32

latest handshake: 13 seconds ago

transfer: 92 B received, 180 B sent

persistent keepalive: every 20 seconds

interface: nm-zLY9sgKC

public key: asu7DXf5slyqN7xjzo1BQ+OinxbG2ECgf38SSY6u9xM=

private key: (hidden)

listening port: 37758

[root@node-02 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.4.1 0.0.0.0 UG 100 0 0 ens192

10.20.20.0 0.0.0.0 255.255.255.0 U 0 0 0 nm-asterfusion

10.20.20.1 0.0.0.0 255.255.255.255 UH 0 0 0 nm-asterfusion

10.20.20.3 0.0.0.0 255.255.255.255 UH 0 0 0 nm-asterfusion

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.4.0 0.0.0.0 255.255.255.0 U 100 0 0 ens192

[root@node-02 ~]#

Node-03:

[root@node-03 ~]# wg

interface: nm-asterfusion

public key: KnwIOGgWWCvXDqgrWfpc0xvlSf7GN/LjvUeJlpJhMy0=

private key: (hidden)

listening port: 51821

peer: qmPw+9r2+S94EjMAkNwMm9YV8ZDoSay8Fyi1HgyqFlg=

endpoint: 192.168.4.101:51821

allowed ips: 10.20.20.1/32

latest handshake: 15 seconds ago

transfer: 92 B received, 180 B sent

persistent keepalive: every 20 seconds

peer: rsAp8gC+vW63ET7YPpFT2oWMgrelFM1nO+9pAS2KLmQ=

endpoint: 192.168.4.102:51821

allowed ips: 10.20.20.2/32

latest handshake: 15 seconds ago

transfer: 92 B received, 180 B sent

persistent keepalive: every 20 seconds

interface: nm-zLY9sgKC

public key: tKLd9l1H8NmZmvv5C8amrt5FJNGc/rmfv8pxY1eWdis=

private key: (hidden)

listening port: 44378

[root@node-03 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.4.1 0.0.0.0 UG 100 0 0 ens192

10.20.20.0 0.0.0.0 255.255.255.0 U 0 0 0 nm-asterfusion

10.20.20.1 0.0.0.0 255.255.255.255 UH 0 0 0 nm-asterfusion

10.20.20.2 0.0.0.0 255.255.255.255 UH 0 0 0 nm-asterfusion

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.4.0 0.0.0.0 255.255.255.0 U 100 0 0 ens192

[root@node-03 ~]#4.4.2 客户端之间使用VPN网段IP进行互Ping测试

[root@node-01 ~]# ping 10.20.20.1

PING 10.20.20.1 (10.20.20.1) 56(84) bytes of data.

64 bytes from 10.20.20.1: icmp_seq=1 ttl=64 time=0.046 ms

64 bytes from 10.20.20.1: icmp_seq=2 ttl=64 time=0.041 ms

^C

--- 10.20.20.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.041/0.043/0.046/0.007 ms

[root@node-01 ~]# ping 10.20.20.2

PING 10.20.20.2 (10.20.20.2) 56(84) bytes of data.

64 bytes from 10.20.20.2: icmp_seq=1 ttl=64 time=0.637 ms

64 bytes from 10.20.20.2: icmp_seq=2 ttl=64 time=0.912 ms

^C

--- 10.20.20.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.637/0.774/0.912/0.140 ms

[root@node-01 ~]# ping 10.20.20.3

PING 10.20.20.3 (10.20.20.3) 56(84) bytes of data.

64 bytes from 10.20.20.3: icmp_seq=1 ttl=64 time=0.738 ms

64 bytes from 10.20.20.3: icmp_seq=2 ttl=64 time=0.760 ms

^C

--- 10.20.20.3 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.738/0.749/0.760/0.011 ms

[root@node-01 ~]#5 参考文档

- Some Unofficial WireGuard Documentation;

- WireGuard 的工作原理;

- WireGuard 的搭建使用与配置详解;

- Netmaker official documentation;

- 使用 Netmaker 来管理 WireGuard 的配置;

更多内容请关注:A-Lab