配置指导:HPC场景性能测试常用工具

1 HPC测试方案介绍

本文主要介绍在HPC高性能计算场景中的几种测试方案,具体方案如下:

- E2E转发测试

测试HPC方案在E2E(End to End)场景下的转发时延和带宽,方案测试点可以采用Mellanox IB发包工具、Qperf(支持RDMA)和Perftest工具集,测试遍历2~8388608字节。

- MPI基准测试

MPI基准测试常用于评估高性能计算网络性能。方案测试点采用OSU Micro-Benchmarks来评估HPC方案在MPI场景下的转发时延和带宽,测试遍历2~8388608字节。

- Linpack性能测试

Linpack性能测试常用于测试高性能计算机系统浮点性能,方案测试点采用HPL和HPCG来评估HPC方案在Linpack场景下的服务器性能。

- HPC应用测试

本次测试方案在不同场景下运行HPC应用,方案测试点采用WRF和LAMMPS评估不同HPC方案的HPC应用并行运行效率。

2 不同场景测试工具介绍

HPC不同场景测试过程中所涉及到的测试软件以及版本如表1所示:

| 应用场景 | 工具名称 | 版本 |

| E2E转发测试 | Mellanox IB工具包 | 工具包版本与驱动版本相同 |

| Qperf | 0.4.9 | |

| Perftest | V4.5-0.20 | |

| MPI基准测试 | OSU Micro-Benchmarks | V5.6.3 |

| Linpack性能测试 | HPL | V2.3 |

| HPCG | V3.1 | |

| HPC应用测试 | WRF | V4.0 |

| LAMMPS | LAMMPS (3 Mar 2020) |

3 E2E场景测试工具部署及介绍

3.1 Mellanox IB工具包

在Server服务器上安装Mellanox网卡的MLNX_OFED驱动程序,驱动安装完成后自带IB测试工具包(ib_read_lat、ib_send_lat、ib_write_lat等网络性能测试工具)。详细安装驱动过程可参考联合实验室发布的《解决方案-Mellanox网卡驱动安装》。IB工具包包含的主要测试集如表2:

| RDMA操作 | 带宽测试程序 | 时延测试程序 |

| RDMA Send | ib_send_bw | ib_send_lat |

| RDMA Read | ib_read_bw | ib_read_lat |

| RDMA Write | ib_write_bw | ib_write_lat |

3.1.1 网卡MLNX_OFED驱动程序安装

[root@server ~]# wget \

https://content.mellanox.com/ofed/MLNX_OFED-5.0-1.0.0.0/MLNX_OFED_LINUX-5.0-1.0.0.0-rhel7.8-x86_64.tgz

[root@server ~]# tar -zxvf MLNX_OFED_LINUX-5.0-1.0.0.0-rhel7.8-x86_64.tgz

[root@server ~]# cd MLNX_OFED_LINUX-5.0-1.0.0.0-rhel7.8-x86_64

[root@server ~]# ./mlnx_add_kernel_support.sh -m \

/root/MLNX_OFED_LINUX-5.0-1.0.0.0-rhel7.8-x86_64 -v

[root@server ~]# tar xzvf \

MLNX_OFED_LINUX-5.0-1.0.0.0-rhel7.8-x86_64-ext.tgz

[root@server ~]# cd MLNX_OFED_LINUX-5.0-1.0.0.0-rhel7.8-x86_64-ext

[root@server ~]# ./mlnxofedinstall3.1.2 检查网卡及网卡驱动状态

[root@server ~]# /etc/init.d/openibd start

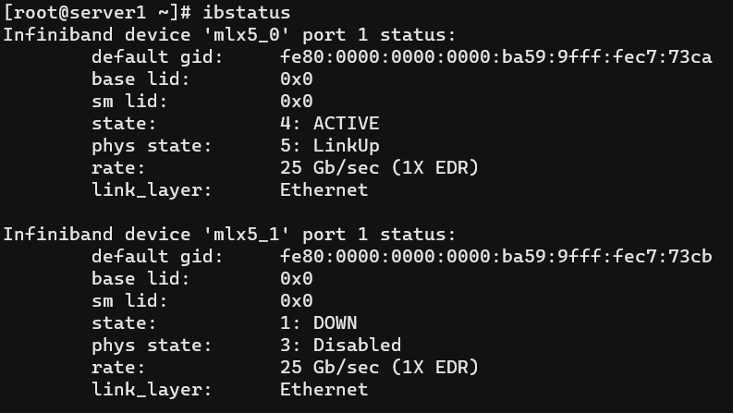

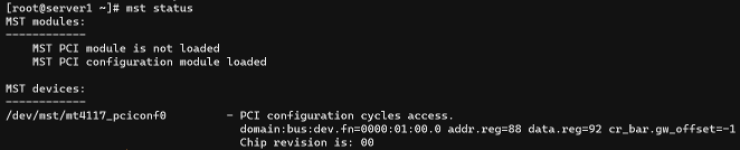

[root@server ~]# ibstatus

[root@server ~]# systemctl start mst

[root@server ~]# mst status

3.1.3 IB工具包测试

[root@server1 ~]# ib_send_bw -R -d mlx5_2 -F --report_gbits -a

[root@server2 ~]# ib_send_bw -a -R -x 5 -d mlx5_2 -F -f 2 10.230.1.113.2 Qperf

qperf和iperf/netperf一样可以测试两个节点之间的带宽和延时,相比netperf和iperf,支持RDMA是qperf工具的独有特性。测试的性能相较于Mellanox IB工具略差,可以用于国产RDMA网卡性能测试。Qperf包含的主要测试集如表3:

| RDMA操作 | 带宽测试程序 | 时延测试程序 |

| RMDA Send | rc_bw | rc_lat |

| RDMA Read | rc_rdma_read_bw | rc_rdma_read_lat |

| RDMA Write | rc_rdma_write_bw | rc_rdma_write_lat |

3.2.1 Qperf安装

[root@server ~]# yum -y install qperf*3.2.2 Qperf RDMA测试

服务端:

[root@server ~]# qperf

客户端:

send/receive:

qperf -cm1 -oo msg_size:1:64K:*2 10.230.1.11 rc_lat

qperf -cm1 -oo msg_size:1:64K:*2 10.230.1.11 rc_bw

wirite/read:

qperf -cm1 -oo msg_size:1:64K:*2 10.230.1.11 rc_rdma_write_lat

qperf -cm1 -oo msg_size:1:64K:*2 10.230.1.11 rc_rdma_write_bw3.3 Perftest

Perftest是一组基于uverbs编写的测试程序,是RDMA性能相关的benchmark。可用于软硬件调优以及功能测试。Perftest测试软件包含的测试集如表4:

| RDMA操作 | 带宽测试程序 | 时延测试程序 |

| Send | ib_send_bw | ib_send_lat |

| RDMA Read | ib_read_bw | ib_read_lat |

| RDMA Write | ib_write_bw | ib_write_lat |

| RDMA Atomic | ib_atmoic_bw | ib_atomic_lat |

| Native Ethernet(纯以太网测试) | raw_ethernet_bw | raw_ethernet_lat |

3.3.1 Perftest

[root@Server ~]# git clone https://github.com/linux-rdma/perftest.git

[root@Server ~]# cd perftest

[root@Server perftest]# ./autogen.sh

[root@Server perftest]# ./configure

[root@Server perftest]# make

[root@Server perftest]# make install3.3.2 Perftest RDMA测试

[root@Server ~]# ib_read_lat -R -d rdmap2s0f0 -F --report_gbits -a

[root@Server ~]# ib_read_lat -a -R -x 5 -d rdmap3s0f0 -F -f 2 10.230.1.114 MPI场景测试工具部署及介绍

在Server服务器上安装OSU MPI Benchmarks MPI通信效率测评工具,测试方式分为点对点通信和组网通信两种方式,通过执行各种不同模式的MPI,来测试带宽和时延。

4.1 OSU MPI Benchamarks工具安装

[root@server ~]# yum -y install openmpi3 openmpi3-devel -y

[root@server ~]# wget \

http://mvapich.cse.ohio-state.edu/download/mvapich/osu-micro-benchmarks-5.6.3.tar.gz

[root@server ~]# tar zxvf osu-micro-benchmarks-5.6.3.tar.gz

[root@server ~]# cd osu-micro-benchmarks-5.6.3

[root@server ~]# ./configure

[root@server ~]# make -j

[root@server ~]# make install

[root@server ~]# mkdir /osu

[root@server ~]# cp -rf \

/usr/mpi/gcc/openmpi-4.0.3rc4/tests/osu-micro-benchmarks-5.3.2/* /osu4.2 OSU MPI Benchamark使用

带宽测试:

[root@Server ~]# mpirun -np 2 --allow-run-as-root \

--host 10.230.1.11,10.230.1.12 /osu_bw

时延测试:

[root@Server ~]# mpirun -np 2 --allow-run-as-root \

--host 10.230.1.11,10.230.1.12 /osu_latency5 Linpack测试工具部署及介绍

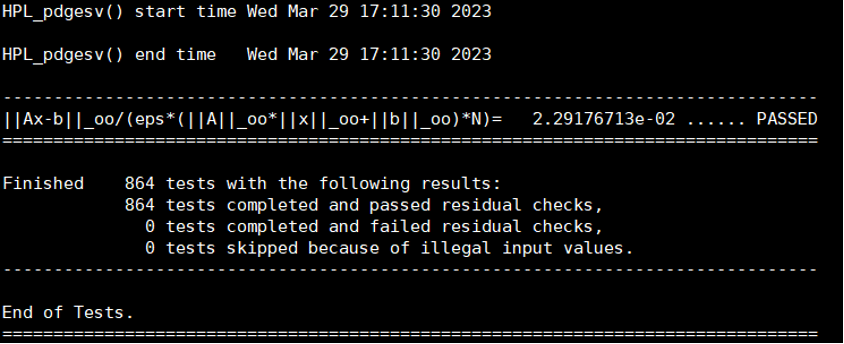

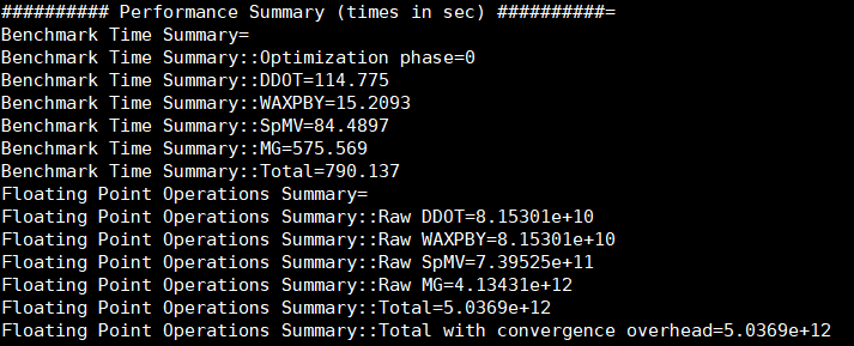

Linpack现在国际上已经成为最流行的用于测试高性能计算机系统浮点性能的工具,Linpack关注线性方程的计算性能,更考验超算的处理器理论性能。Linpack测试包括三类:Linpack100、Linpack1000和HPL。HPCG使用更复杂的微分方程计算方式,更看重实际性能,所以任何HPC超算测出来的HPCG性能要比Linpack性能低很多。

5.1 HPL安装及使用

5.1.1 基础环境准备

在安装HPL之前需要配置好GCC/Fortran77编译器、BLAS/CBLAS/ATLAS库和Mpich并行环境。

GCC/Fortan77:

[root@Server ~]# yum -y install gcc gcc-gfortran

BLAS:

[root@Server ~]# mkdir ~/prepare && cd ~/prepare

[root@Server prepare]# wget http://www.netlib.org/blas/blas-3.8.0.tgz

[root@Server prepare]# tar -xzf blas-3.8.0.tgz

[root@Server prepare]# cd BLAS-3.8.0

[root@Server BLAS-3.8.0]# make

[root@Server BLAS-3.8.0]# ar rv libblas.a *.o

[root@Server BLAS-3.8.0]# cd ~/prepare

[root@Server prepare]# wget http://www.netlib.org/blas/blast-forum/cblas.tgz

[root@Server prepare]# tar -xzf cblas.tgz

[root@Server prepare]# cd CBLAS

[root@Server CBLAS]# cp ~/prepare/BLAS-3.8.0/blas_LINUX.a ./

[root@Server CBLAS]# vim Makefile.in

BLLIB = ~/prepare/BLAS-3.8.0/blas_LINUX.a

[root@Server CBLAS]# make

[root@Server CBLAS]# ./testing/xzcblat1

MPICH2:

[root@Server ~]# cd ~/prepare

[root@Server prepare]# wget \

http://www.mpich.org/static/downloads/3.2.1/mpich-3.2.1.tar.gz

[root@Server prepare]# tar xzf mpich-3.2.1.tar.gz

[root@Server prepare]# cd mpich-3.2.1

[root@Server mpich-3.2.1]# ./configure –disable-cxx

[root@Server mpich-3.2.1]# make

[root@Server mpich-3.2.1]# make install

[root@Server mpich-3.2.1]# mkdir machinefile

[root@Server mpich-3.2.1]# mpiexec -f machinefile -n 3 hostname && mpiexec -n 5 -f machinefile ./examples/cpi

5.1.2 HPL安装及并行测试

[root@Server ~]# cd ~/prepare

[root@Server prepare]# cp CBLAS/lib/* /usr/local/lib

[root@Server prepare]# cp BLAS-3.8.0/blas_LINUX.a /usr/local/lib

[root@Server prepare]# wget http://www.netlib.org/benchmark/hpl/hpl-2.3.tar.gz

[root@Server prepare]# tar -xzf hpl-2.3.tar.gz

[root@Server prepare]# cd hpl-2.3

[root@Server hpl-2.3]# cp setup/Make.Linux_PII_CBLAS ./

[root@Server hpl-2.3]# cp include/* /usr/local/include

[root@Server hpl-2.3]# vim Make.top

arch = Linux_PII_CBLAS

[root@Server hpl-2.3]# vim Makefile

arch = Linux_PII_CBLAS

[root@Server hpl-2.3]# vim Make.Linux_PII_CBLAS

LN_S = ln -sf

ARCH = Linux_PII_CBLAS

TOPdir = /root/prepare/hpl-2.3

MPdir = /usr/local

MPlib = $(MPdir)/lib/libmpich.so

LAdir = /usr/local/lib

LAlib = $(LAdir)/cblas_LINUX.a $(LAdir)/blas_LINUX.a

CC = /usr/local/bin/mpicc

LINKER = /usr/local/bin/mpif77

[root@Server hpl-2.3]# make arch=Linux_PII_CBLAS

[root@Server hpl-2.3]# cd /bin/Linux_PII_CBLAS

[root@Server Linux_PII_CBLAS]# mpirun -np 4 ./xhpl

5.1.3 HPL配置文件解读

HPL程序运行结果取决于配置文件参数。

[root@Server ~]# cd /root/prepare/hpl-2.3/bin/Linux_PII_CBLAS

[root@server1 Linux_PII_CBLAS]# cat HPL.dat

HPLinpack benchmark input file

Innovative Computing Laboratory, University of Tennessee

HPL.out output file name (if any)

6 device out (6=stdout,7=stderr,file)

4 # of problems sizes (N)

29 30 34 35 Ns

4 # of NBs

1 2 3 4 NBs

0 PMAP process mapping (0=Row-,1=Column-major)

3 # of process grids (P x Q)

2 1 4 Ps

2 4 1 Qs

16.0 threshold

3 # of panel fact

0 1 2 PFACTs (0=left, 1=Crout, 2=Right)

2 # of recursive stopping criterium

2 4 NBMINs (>= 1)

1 # of panels in recursion

2 NDIVs

3 # of recursive panel fact.

0 1 2 RFACTs (0=left, 1=Crout, 2=Right)

1 # of broadcast

0 BCASTs (0=1rg,1=1rM,2=2rg,3=2rM,4=Lng,5=LnM)

1 # of lookahead depth

0 DEPTHs (>=0)

2 SWAP (0=bin-exch,1=long,2=mix)

64 swapping threshold

0 L1 in (0=transposed,1=no-transposed) form

0 U in (0=transposed,1=no-transposed) form

1 Equilibration (0=no,1=yes)

8 memory alignment in double (> 0)- 第5~6行内容:N表示求解的矩阵数量与规模。矩阵规模N越大,有效计算所占的比例也越大,系统浮点处理性能也就越高。但矩阵规模越大会导致内存消耗量越多,如果系统实际内存空间不足,使用缓存、性能会大幅度降低。矩阵占用系统总内存的80%左

右为最佳,即N×N×8=系统总内存×80%。 - 第7-8行内容:NB值的选择主要是通过实际测试得出最优值。NB的值一般小于384并且NB*8一定是缓存行的倍数。

- 第10~12行内容:P表示水平方向处理器个数,Q表示垂直方向处理器个数。P×Q表示二维处理器网格。P×Q=系统CPU数=进程数。一般情况下一个进程对应一个CPU,可以得到最佳性能。

5.2 HPCG

5.2.1 基础环境准备

在安装HPCG之前需要配置好CXX编译器和Mpich并行环境。

CXX编译器:

[root@server1 ~]# c++ -v

Using built-in specs.

COLLECT_GCC=c++

Target: x86_64-redhat-linux

gcc version 4.8.5 20150623 (Red Hat 4.8.5-44) (GCC)

MPICH2:

[root@Server ~]# cd ~/prepare

[root@Server prepare]# wget \

http://www.mpich.org/static/downloads/3.2.1/mpich-3.2.1.tar.gz

[root@Server prepare]# tar xzf mpich-3.2.1.tar.gz

[root@Server prepare]# cd mpich-3.2.1

[root@Server mpich-3.2.1]# ./configure --disable-cxx

[root@Server mpich-3.2.1]# make

[root@Server mpich-3.2.1]# make install

[root@Server mpich-3.2.1]# mkdir machinefile

[root@Server mpich-3.2.1]# mpiexec -f machinefile -n 3 hostname && mpiexec -n 5 -f machinefile ./examples/cpi5.2.3 HPCG配置文件解读

HPCG程序运行结果取决于配置文件参数,测试完成会生成HPCG-Benchmark报告文件,运行结果主要看Performance Summary (times in sec)。

[root@Server ~]# cd /root/prepare/hpcg/setup/build/bin

[root@server1 bin]# cat hpcg.dat

HPCG benchmark input file

Sandia National Laboratories; University of Tennessee, Knoxville

104 104 104 #测试规模

1800 #测试时间,运行必须要1800s才能得到正式结果

[root@Server ~]# cat HPCG-Benchmark_3.1_2023-03-23_15-30-40.txt

6 HPC应用部署及并行测试

6.1 WRF

6.1.1 基础环境准备

基础环境需要在Server服务器上完成编译器的安装以及基础环境变量的配置。

[root@Server ~]# cd /data/home/wrf01/202302test/

[root@Server 202302test]# mkdir Build_WRF

[root@Server 202302test]# mkdir TESTS

[root@Server ~]# yum -y install gcc cpp gcc-gfortran gcc-g++ m4 make csh

[root@Server ~]# vi ~/.bashrc

export DIR=/data/home/wrf01/202302test/Build_WRF/LIBRARIES

export CC=gcc

export CXX=g++

export FC=gfortran

export CFLAGS='-m64'

export F77=gfortran

export FFLAGS='-m64'

export PATH=$DIR/mpich/bin:$PATH

export PATH=$DIR/netcdf/bin:$PATH

export NETCDF=$DIR/netcdf

export JASPERLIB=$DIR/grib2/lib

export JASPERINC=$DIR/grib2/include

export LDFLAGS=-L$DIR/grib2/lib

export CPPFLAGS=-I$DIR/grib2/include

export LD_LIBRARY_PATH=$DIR/grib2/lib:$LD_LIBRARY_PATH

[root@Server ~]# source ~/.bashrc6.1.2 安装三方依赖库

在Server服务器上安装第三方库以及完成zlib、libpng、mpich、jasper和netcdf软件的编译。并对依赖库就行测试。

[root@Server ~]# cd /data/home/wrf01/202302test/Build_WRF

[root@Server Build_WRF]# mkdir LIBRARIES

下载第三方库:

[root@Server Build_WRF]# wget \ https://www2.mmm.ucar.edu/wrf/OnLineTutorial/compile_tutorial/tar_files/zlib-1.2.7.tar.gz

[root@Server Build_WRF]# wget \ https://www2.mmm.ucar.edu/wrf/OnLineTutorial/compile_tutorial/tar_files/mpich-3.0.4.tar.gz

[root@Server Build_WRF]# wget \ https://www2.mmm.ucar.edu/wrf/OnLineTutorial/compile_tutorial/tar_files/netcdf-4.1.3.tar.gz

[root@Server Build_WRF]# wget \ https://www2.mmm.ucar.edu/wrf/OnLineTutorial/compile_tutorial/tar_files/jasper-1.900.1.tar.gz

[root@Server Build_WRF]# wget \ https://www2.mmm.ucar.edu/wrf/OnLineTutorial/compile_tutorial/tar_files/libpng-1.2.50.tar.gz

编译安装zlib:

[root@Server Build_WRF]# tar xzvf zlib-1.2.7.tar.gz

[root@Server Build_WRF]# cd zlib-1.2.7

[root@Server zlib-1.2.7]# ./configure --prefix=$DIR/grib2

[root@Server zlib-1.2.7]# make

[root@Server zlib-1.2.7]# make install

编译安装libpng:

[root@Server Build_WRF]# tar xzvf libpng-1.2.50.tar.gz

[root@Server Build_WRF]# cd libpng-1.2.50

[root@Server libpng-1.2.50]# ./configure --prefix=$DIR/grib2

[root@Server libpng-1.2.50]# make

[root@Server libpng-1.2.50]# make install

编译安装mpich:

[root@Server Build_WRF]# tar xzvf mpich-3.0.4.tar.gz

[root@Server Build_WRF]# cd mpich-3.0.4

[root@Server mpich-3.0.4]# ./configure --prefix=$DIR/mpich

[root@Server mpich-3.0.4]# make

[root@Server mpich-3.0.4]# make install

编译安装jasper:

[root@Server Build_WRF]# tar xzvf jasper-1.900.1.tar.gz

[root@Server Build_WRF]# cd jasper-1.900.1

[root@Server jasper-1.900.1]# ./configure --prefix=$DIR/grib2

[root@Server jasper-1.900.1]# make

[root@Server jasper-1.900.1]# make install

编译安装netcdf:

[root@Server Build_WRF]# tar xzvf netcdf-4.1.3.tar.gz

[root@Server Build_WRF]# cd netcdf-4.1.3

[root@Server netcdf-4.1.3]# ./configure --prefix=$DIR/netcdf \

--disable-dap --disable-netcdf-4 --disable-shared

[root@Server netcdf-4.1.3]# make

[root@Server netcdf-4.1.3]# make install6.1.3 依赖库测试

在Server服务器上完成对所安装依赖库的可用性测试。

[root@Server Build_WRF]# cd TESTS

[root@Server TESTS]# wget \ https://www2.mmm.ucar.edu/wrf/OnLineTutorial/compile_tutorial/tar_files/Fortran_C_NETCDF_MPI_tests.tar

[root@Server TESTS]# tar -xf Fortran_C_NETCDF_MPI_tests.tar

测试Fortran+C+NetCDF:

[root@Server TESTS]# cp ${NETCDF}/include/netcdf.inc .

[root@Server TESTS]# gfortran -c 01_fortran+c+netcdf_f.f

[root@Server TESTS]# gcc -c 01_fortran+c+netcdf_c.c

[root@Server TESTS]# gfortran 01_fortran+c+netcdf_f.o \ 01_fortran+c+netcdf_c.o \-L${NETCDF}/lib -lnetcdff -lnetcdf

[root@Server TESTS]# ./a.out

测试Fortran+C+NetCDF+MPI:

[root@Server TESTS]# cp ${NETCDF}/include/netcdf.inc .

[root@Server TESTS]# mpif90 -c 02_fortran+c+netcdf+mpi_f.f

[root@Server TESTS]# mpicc -c 02_fortran+c+netcdf+mpi_c.c

[root@Server TESTS]# mpif90 02_fortran+c+netcdf+mpi_f.o 02_fortran+c+netcdf+mpi_c.o -L${NETCDF}/lib -lnetcdff -lnetcdf

[root@Server TESTS]# mpirun ./a.out6.1.4 安装WRF

[root@Server ~]# cd /data/home/wrf01/202302test/Build_WRF

[root@Server Build_WRF]# wget \ https://www2.mmm.ucar.edu/wrf/src/WRFV4.0.TAR.gz

[root@Server Build_WRF]# tar xzvf WRFV4.0.TAR.gz

[root@Server Build_WRF]# cd WRF

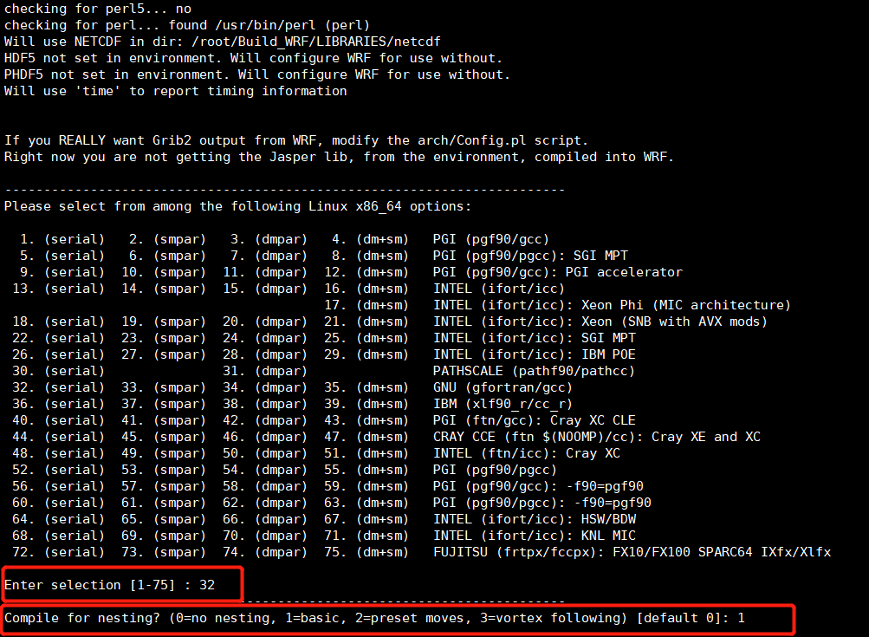

[root@Server WRF]# ./configure

[root@Server WRF]# ./compile

[root@Server WRF]# ls -ls main/*.exe6.1.5 安装WPS

[root@Server ~]# cd /data/home/wrf01/202302test/Build_WRF

[root@Server Build_WRF]# wget \

https://www2.mmm.ucar.edu/wrf/src/WPSV4.0.TAR.gz

[root@Server Build_WRF]# tar xzvf WRFV4.0.TAR.gz

[root@Server Build_WRF]# cd WPS

[root@Server WPS]# ./clean

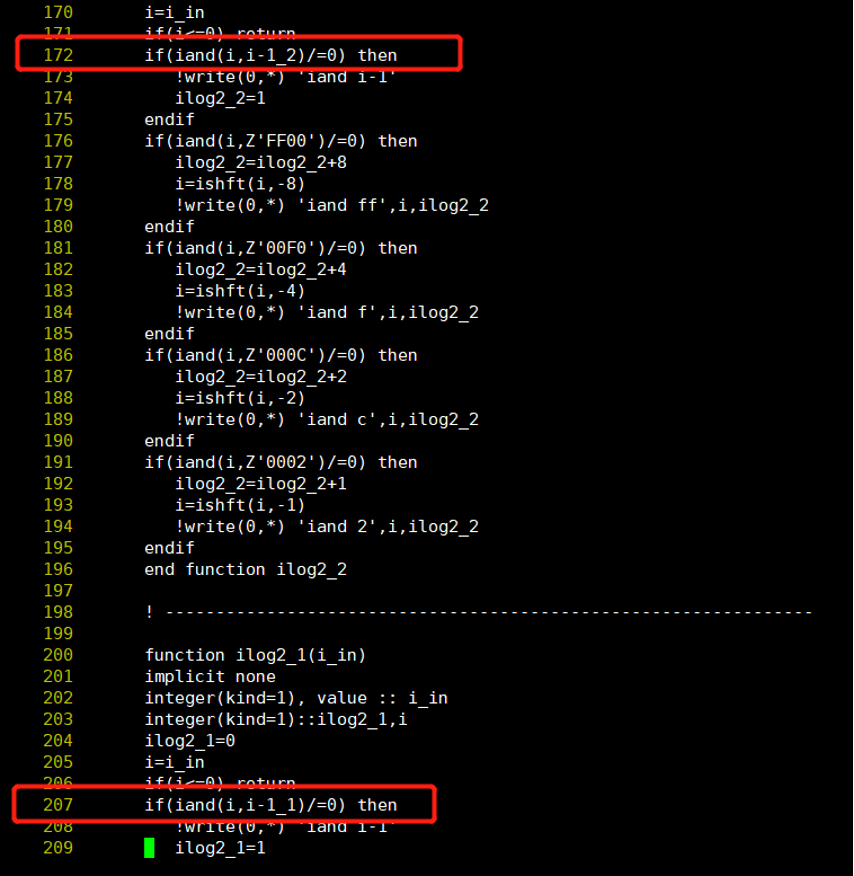

修改intmath.f文件

[root@Server WPS]# cat ./ungrib/src/ngl/g2/intmath.f

编译安装WPS:

[root@Server WPS]# ./configure

Enter selection [1-40] : 1

[root@Server WPS]# ./compile

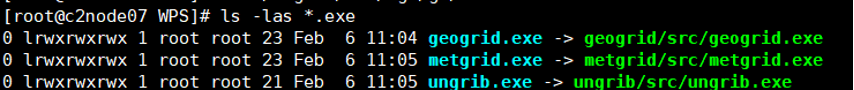

[root@Server WPS]# ls -las *.exe

[root@Server WPS]# vi namelist.wps

&share

wrf_core = 'ARW',

max_dom = 1,

start_date = '2000-01-24_12:00:00',

end_date = '2000-01-26_00:00:00',

interval_seconds = 21600

io_form_geogrid = 2,

/

&geogrid

parent_id = 1, 1,

parent_grid_ratio = 1, 3,

i_parent_start = 1, 31,

j_parent_start = 1, 17,

e_we = 104, 142,

e_sn = 61, 97,

geog_data_res = '10m','2m',

dx = 30000,

dy = 30000,

map_proj = 'lambert',

ref_lat = 34.83,

ref_lon = -81.03,

truelat1 = 30.0,

truelat2 = 60.0,

stand_lon = -98.0,

geog_data_path =

[root@Server ~]# cd /data/home/wrf01/202302test/Build_WRF

[root@Server Build_WRF]# wget \

https://www2.mmm.ucar.edu/wrf/src/WPSV4.0.TAR.gz

[root@Server Build_WRF]# tar xzvf WRFV4.0.TAR.gz

[root@Server Build_WRF]# cd WPS

[root@Server WPS]# ./clean

修改intmath.f文件

[root@Server WPS]# cat ./ungrib/src/ngl/g2/intmath.f

编译安装WPS:

[root@Server WPS]# ./configure

Enter selection [1-40] : 1

[root@Server WPS]# ./compile

[root@Server WPS]# ls -las *.exe

[root@Server WPS]# vi namelist.wps

&share

wrf_core = 'ARW',

max_dom = 1,

start_date = '2000-01-24_12:00:00',

end_date = '2000-01-26_00:00:00',

interval_seconds = 21600

io_form_geogrid = 2,

/

&geogrid

parent_id = 1, 1,

parent_grid_ratio = 1, 3,

i_parent_start = 1, 31,

j_parent_start = 1, 17,

e_we = 104, 142,

e_sn = 61, 97,

geog_data_res = '10m','2m',

dx = 30000,

dy = 30000,

map_proj = 'lambert',

ref_lat = 34.83,

ref_lon = -81.03,

truelat1 = 30.0,

truelat2 = 60.0,

stand_lon = -98.0,

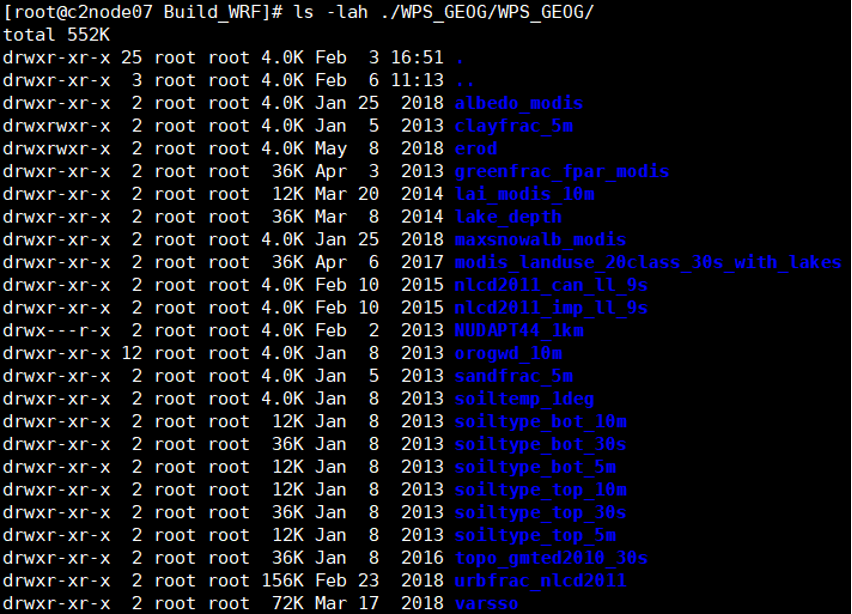

geog_data_path = '/data/home/wrf01/202302test/Build_WRF/WPS_GEOG/WPS_GEOG/'

/

&ungrib

out_format = 'WPS',

prefix = 'FILE',

/

&metgrid

fg_name = 'FILE'

io_form_metgrid = 2,

/

下载静态地理数据:

[root@Server ~]# cd /data/home/wrf01/202302test/Build_WRF

[root@Server Build_WRF]# mkdir WPS_GEOG

下载链接:https://www2.mmm.ucar.edu/wrf/users/download/get_sources_wps_geog.html

6.1.6 生成WRF可执行文件

[root@Server ~]# cd /data/home/wrf01/202302test/Build_WRF

生成地理数据:

[root@Server ~]# cd /data/home/wrf01/202302test/Build_WRF/WPS

[root@Server WPS]# ./geogrid.exe

[root@Server WPS]# ls -lah geo_em.d01.nc

下载并链接气象数据:

气象数据下载网址:https://rda.ucar.edu/。

[root@Server Build_WRF]# mkdir DATA

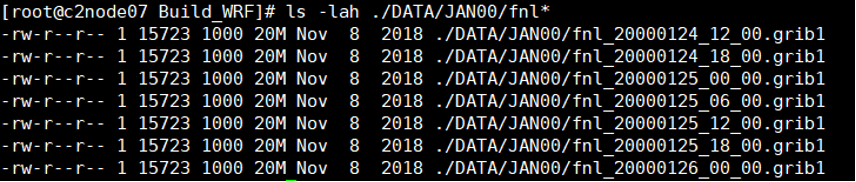

[root@Server Build_WRF]# ls -lah ./DATA/JAN00/fnl*

[root@Server Build_WRF]# cd WPS

[root@Server WPS]# ./link_grib.csh ../DATA/JAN00/fnl

[root@Server WPS]# ln -sf ungrib/Variable_Tables/Vtable.GFS Vtable

[root@Server WPS]# ./ungrib.exe

[root@Server WPS]# ls -lah FILE*

融合气象和地理数据:

[root@Server WPS]# ./metgrid.exe

链接WPS到WRF:

[root@Server WPS]# cd ../WRF/test/em_real/

[root@Server em_real]# ln -sf ~/Build_WRF/WPS/met_em* .

[root@Server em_real]# mpirun -np 1 ./real.exe

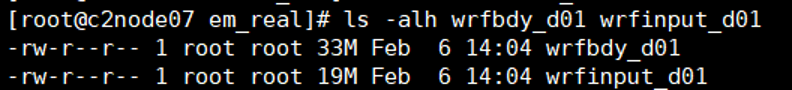

[root@Server em_real]# ls -alh wrfbdy_d01 wrfinput_d01

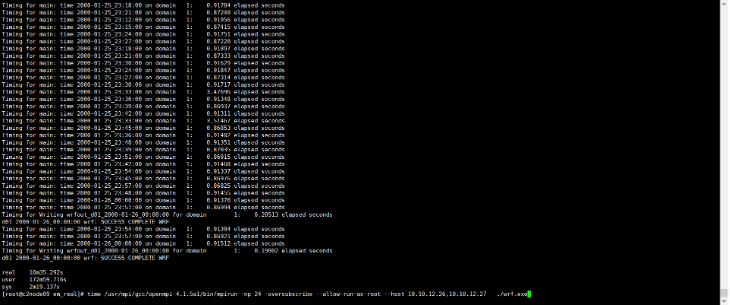

6.1.7 执行WRF并行测试

[root@Server em_real]# time /usr/mpi/gcc/openmpi-4.1.5a1/bin/mpirun -np 24 -oversubscribe --allow-run-as-root \

--host 10.230.1.11,10.230.1.12 ./wrf.exe

6.2 LAMMPS

LAMMPS即Large-scale Atomic/Molecular Massively Parallel Simulator,大规模原子分子并行模拟器,主要用于分子动力学相关的一些计算和模拟工作。

6.2.1 编译安装GCC-7.3

[root@server ~]# yum -y install gcc gcc-c++ gcc-gfortran texinfo

[root@server ~]# wget http://mirrors.ustc.edu.cn/gnu/gcc/gcc-7.3.0/gcc-7.3.0.tar.gz

[root@server ~]# tar zxvf gcc-7.3.0.tar.gz

[root@server ~]# cd gcc-7.3.0

[root@server ~]# sh ./contrib/download_prerequisites

[root@server ~]# mkdir build && cd build

[root@server ~]# ../configure \

--prefix=/usr/local/gcc-7.3 \

--disable-bootstrap \

--enable-languages=c,c++,fortran \

--disable-multilib

[root@server ~]# make -j

[root@server ~]# make install

[root@server ~]# vi ~/.bashrc

export GCC_HOME=/usr/local/gcc-7.3

export PATH=$GCC_HOME/bin:$PATH

export MANPATH=$GCC_HOME/share/man

export CPATH=$GCC_HOME/include:$CPATH

export LD_LIBRARY_PATH=$GCC_HOME/lib:$GCC_HOME/lib64:LD_LIBRARY_PATH

export LIBRARY_PATH=$GCC_HOME/lib:$GCC_HOME/lib64:LIBRARY_PATH

[root@server ~]# source ~/.bashrc

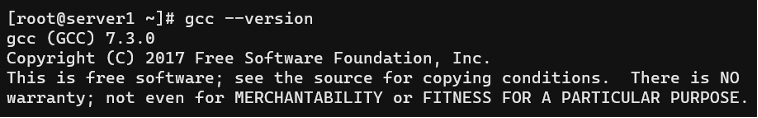

[root@server ~]# gcc --verison

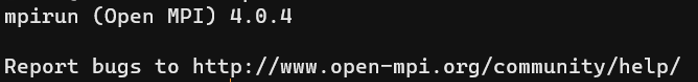

6.2.2 编译安装OpenMPI

[root@server ~]# yum install -y gcc gcc-c++ gcc-gfortran

[root@server ~]# wget \

https://download.open-mpi.org/release/open-mpi/v4.0/openmpi-4.0.4.tar.bz2

[root@server ~]# tar jxvf openmpi-4.04.tar.bz2

[root@server ~]# cd openmpi-4.0.4

[root@server ~]# mkdir build && cd build

[root@server ~]# ../configure \

--prefix=/usr/local/openmpi-4.0.4 CC=gcc CXX=g++ \

FC=gfortran F77=gfortran

[root@server ~]# make -j

[root@server ~]# make install

[root@server ~]# vi ~/.bashrc

export PATH=$PATH:/usr/local/share/openvswitch/scripts

export GCC_HOME=/usr/local/gcc-7.3

export PATH=$GCC_HOME/bin:$PATH

export MANPATH=$GCC_HOME/share/man

export CPATH=$GCC_HOME/include:$CPATH

export LD_LIBRARY_PATH=$GCC_HOME/lib:$GCC_HOME/lib64:LD_LIBRARY_PATH

export LIBRARY_PATH=$GCC_HOME/lib:$GCC_HOME/lib64:LIBRARY_PATH

export PATH=/usr/local/openmpi-4.0.4/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/openmpi-4.0.4/lib:$LD_LIBRARY_PATH

export MANPATH=/usr/local/openmpi-4.0.4/share/man:$MANPATH

[root@server ~]# source ~/.bashrc

[root@server ~]# mpirun --version

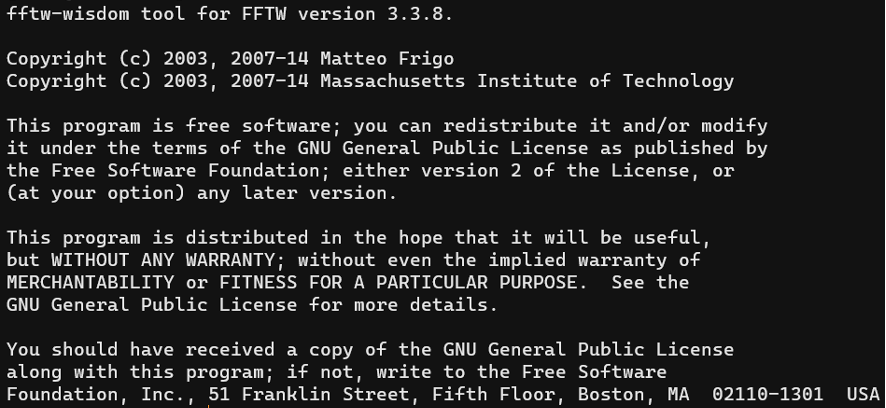

6.2.3 编译安装FFTW

[root@server ~]# wget ftp://ftp.fftw.org/pub/fftw/fftw-3.3.8.tar.gz

[root@server ~]# tar zxvf fftw-3.3.8.tar.gz

[root@server ~]# cd fftw-3.3.8

[root@server ~]# mkdir build && cd build

[root@server ~]# ../configure \

--prefix=/usr/local/fftw \

--enable-mpi \

--enable-openmp \

--enable-shared \

--enable-static

[root@server ~]# make -j

[root@server ~]# make install

[root@server ~]# vi ~/.bashrc

export PATH=/usr/local/fftw/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/fftw/lib:$LD_LIBRARY_PATH

[root@server ~]# source ~/.bashrc

[root@server ~]# fftw-wisdom --version

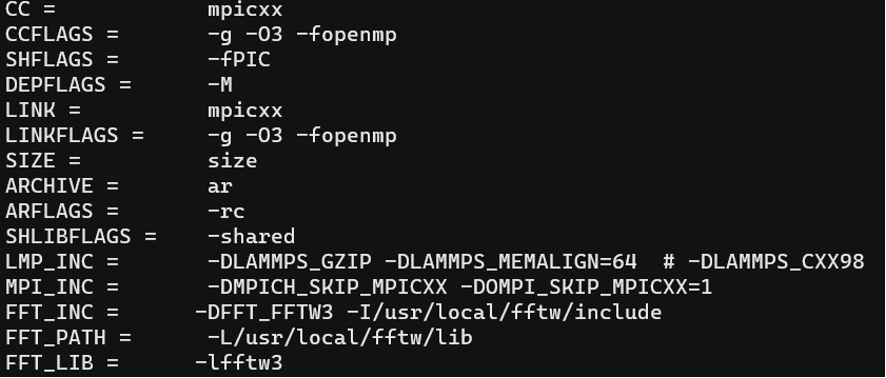

6.2.4 编译安装LAMMPS

[root@server ~]# yum -y install libjpeg-devel libpng-devel

[root@server ~]# wget https://lammps.sandia.gov/tars/lammps-3Mar20.tar.gz

[root@server ~]# tar zxvf lammps-3Mar20.tar.gz

[root@server ~]# cd lammps-3Mar20/src

[root@server ~]# vi MAKE/Makefile.mpi

[root@server ~]# make yes-MANYBODY

[root@server ~]# make -j mpi

[root@server ~]# mkdir -p /usr/local/lammps/bin

[root@server ~]# cp lmp_mpi /usr/local/lammps/bin/

[root@server ~]# vi ~/.bashrc

export PATH=/usr/local/lammps/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/lammps/lib:$LD_LIBRARY_PATH

[root@server ~]# source ~/.bashrc6.2.5 执行LAMMPS并行测试

[root@server1 ~]# cd ~/lammps/lammps-stable_3Mar2020/examples/shear

[root@server1 ~]# vi in.shear

atom_style atomic

region box block 0 16.0 0 10.0 0 2.828427

create_box 100 box

thermo 25

thermo_modify temp new3d

timestep 0.001

thermo_modify temp new2d

reset_timestep 0

run 340000

[root@server1 ~]# mpirun --allow-run-as-root -np 4 –oversubscribe \

--host 10.230.1.11,10.230.1.12 lmp_mpi \

< /root/lammps/lammps-3Mar20/examples/shear/in.shear