配置指导:OpenStack-OpenvSwitch 安装部署

- 1 OpenStack简介

- 2 OpenStack架构图

- 3 OpenStack必要组件

- 4 OpenStack基础服务

- 5 硬件配置

- 6 系统优化

- 7 基础服务部署

- 8 Keystone(控制节点)

- 9 Glance(控制节点)

- 10 Nova(控制节点)

- 11 Nova(计算节点)

- 12 Neutron(控制节点)

- 13 Neutron(计算节点)

- 14 Horizon(控制节点)

- 15 参考文献

1 OpenStack简介

OpenStack是一个由NASA(美国国家航空航天局)和Rackspace合作研发并发起的,以Apache许可证授权的自由软件和开放源代码项目。OpenStack支持几乎所有类型的云环境,项目目标是提供实施简单、可大规模扩展、丰富、标准统一的云计算管理平台。OpenStack通过各种互补的服务提供了基础设施即服务(IaaS)的解决方案,每个服务提供API以进行集成。

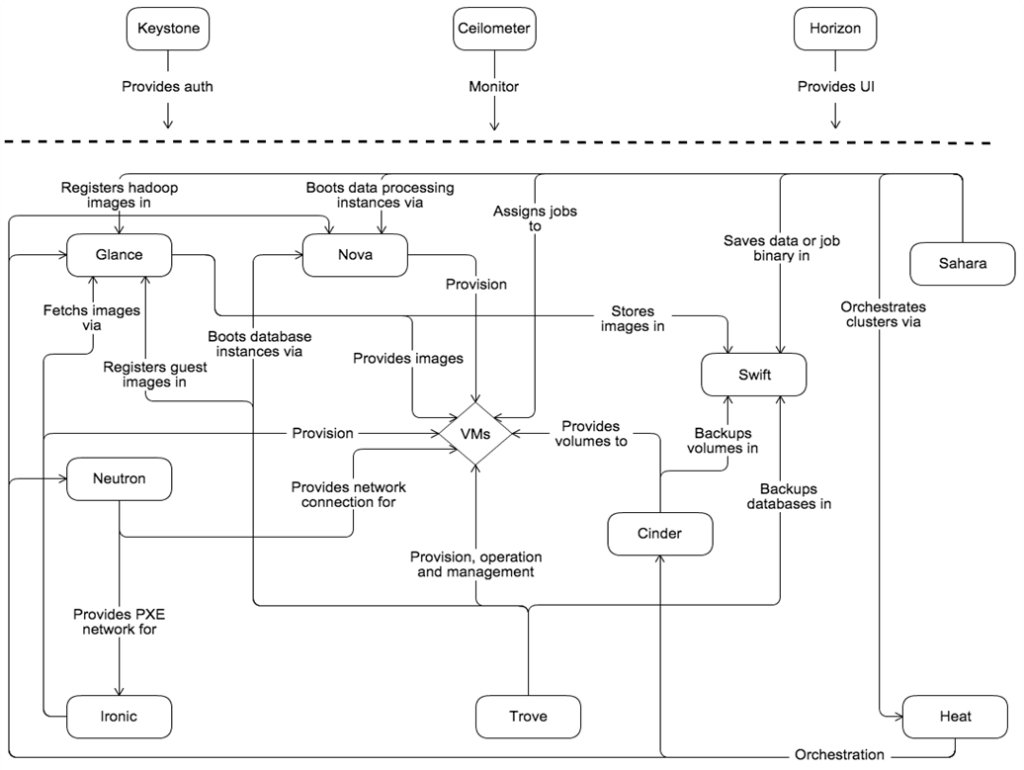

2 OpenStack架构图

整个OpenStack是由控制节点,计算节点,网络节点,存储节点四大部分组成。控制节点负责对其余节点的控制,包含虚拟机建立,迁移,网络分配,存储分配等等。计算节点负责虚拟机运行网络节点负责对外网络与内网络之间的通信存储节点负责对虚拟机的额外存储管理。其中各组件架构图如下:

3 OpenStack必要组件

- 认证管理服务,提供了Openstack其余所有组件的认证信息/令牌的管理、创建、修改等等,使用MySQL等数据库存储认证信息

- 计算管理服务,是Openstack计算的弹性控制,提供虚拟机的创建、运行、迁移、快照等围绕虚拟机的服务,并提供API与控制节点对接,由控制节点下发任务,使用nova-api进行通信

- 网络管理服务,提供了对网络节点的网络拓扑管理,负责管理私有网络与公有网络的通信,以及管理虚拟机网络之间通信/拓扑、管理虚拟机之上的防火墙等,同时提供Neutron在Horizon的管理界面

- 镜像管理服务,用于管理虚拟机部署时所能提供的镜像,包含镜像的导入、格式以及制作相应的模板,是一套虚拟机镜像发现、注册、检索系统,所有计算实例都是从Glance镜像启动的

- 控制台服务,是一个用以管理、控制Openstack服务的Web控制面板,它可以管理实例、镜像、创建密匙对,对实例添加卷、操作Swift容器等,用户还可以在控制面板中使用终端(console)或VNC直接访问实例

4 OpenStack基础服务

- OpenStack使用SQL数据库来存储信息。该数据库通常在控制器节点上运行

- OpenStack使用消息队列来协调服务之间的操作和状态信息。消息队列服务通常在控制器节点上运行

- 服务的身份服务、身份验证机制使用Memcached来缓存令牌。Memcached服务通常在控制器节点上运行

- OpenStack服务使用Etcd(分布式可靠的键值存储)进行分布式键锁定,存储配置,跟踪服务活动性和其他情况

5 硬件配置

| 主机 | 系统 | 网卡1:eno1 | 网卡2:eno2 |

| openstack | Centos7.6 | 192.168.4.145 | 192.168.5.145 |

| compute | Centos7.6 | 192.168.4.144 | 192.168.5.144 |

服务器具体配置要求如下:

- 2个千兆网口

- 至少8G内存

- 磁盘至少40G

- 计算节点的BISO中开启CPU嵌套虚拟化(INTEL叫VT-x,AMD的叫AMD-V)

6 系统优化

主机的优化不单纯只是软硬件的优化,基于操作系统的性能优化也是多方面的,可以从几个方面进行衡量,以更好的提高主机的性能。

6.1 关闭SELinux(控制节点、计算节点)

SELinux不关闭的情况下无法实现,会限制ssh免密码登录。

[root@localhost ~]# setenforce 0

[root@localhost ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux6.2 关闭防火墙(控制节点、计算节点)

防止安装时出现各个组件的端口不能访问的问题。

[root@localhost ~]#systemctl stop firewalld && systemctl disable firewalld 6.3 时间同步(控制节点、计算节点)

同步时间可以有效解决因时间不同而造成的不同步。

[root@localshot ~]# yum -y install ntp

[root@localhost ~]# ntpdate ntp1.aliyun.com

[root@localhost ~]# timedatectl set-timezone Asia/Shanghai

6.4 内核隔离(控制节点、计算节点)

OpenStack部分机器用于做控制节点,大部分机器都是需要运行虚拟化软件,虚拟化平台有大量VM,而宿主机本身的系统也会跑一些服务,那么这就势必会造成VM与宿主机系统之间资源的抢占。我们可以通过修改内核参数指定操作系统使用指定的几个核,解决抢占问题。

[root@localhost ~]# vi /etc/default/grub

GRUB_CMDLINE_LINUX_DEFAULT="isolcpus=8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47"

[root@localhost ~]# update-grub

6.5 关闭KVM内存共享(计算节点)

内存配置方面,关闭KVM内存共享,打开透明大页,提升主机CPU性能。

[root@localhost ~]# echo 0>/sys/kernel/mm/ksm/pages_shared

echo 0>/sys/kernel/mm/ksm/pages_sharing

echo always > /sys/kernel/mm/transparent_hugepage/enabled

echo always>/sys/kernel/mm/transparent_hugepage/enabled

echo never > /sys/kernel/mm/transparent_hugepage/defrag

[root@localhost ~]# echo 0>/sys/kernel/mm/ksm/pages_shared

echo 0>/sys/kernel/mm/ksm/pages_sharing

echo always > /sys/kernel/mm/transparent_hugepage/enabled

echo always>/sys/kernel/mm/transparent_hugepage/enabled

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo 0 > /sys/kernel/mm/transparent_hugepage/khugepaged/defrag

6.6 修改系统打开文件最大数量(计算节点、控制节点)

放开系统打开文件最大数量,防止因达到上限值而导致的进程终止。

[root@localhost ~]# vi /etc/security/limits.conf

* soft nofile 65535

* hard nofile 65535

6.7 降低Swap分区使用率(控制节点、计算节点)

现在服务器的内存一般是上百GB,所以我们可以把这个参数值设置的低一些(如10-30之间),让操作系统尽可能的使用物理内存,降低系统对swap的使用,从而提高宿主机系统和虚拟机的性能。

[root@localhost ~]# echo 'vm.swappiness=10' >>/etc/sysctl.conf7 基础服务部署

7.1 安装OpenStack国内yum源(控制节点、计算节点)

安装阿里的OpenStack yum源可以加快各组件的下载速度。

[root@localhost ~]# cat << EOF >> /etc/yum.repos.d/openstack.repo

[openstack-rocky]

name=openstack-rocky

baseurl=https://mirrors.aliyun.com/centos/7/cloud/x86_64/openstack-rocky/

enabled=1

gpgcheck=0

[qume-kvm]

name=qemu-kvm

baseurl= https://mirrors.aliyun.com/centos/7/virt/x86_64/kvm-common/

enabled=1

gpgcheck=0

EOF

7.2 部署OpenStackClient(控制节点)

[root@localhost ~]# yum upgrade -y

[root@localhost ~]# yum install -y python-openstackclient openstack-selinux

7.3 部署MySQL(控制节点)

部署MySQL并且通过修改配置文件更改存储路径。

[root@localhost ~]# yum install -y mariadb mariadb-server python2-PyMySQL

[root@localhost ~]# vi /etc/my.cnf.d/openstack.cnf

[mysqld]

bind-address=192.168.4.145

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

[root@localhost ~]# vi /etc/my.cnf

[client-mariadb]

socket=/var/lib/mysql/mysql.sock

[mysqld]

query_cache_size = 0

query_cache_type = OFF

max_connections = 4096

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

log-error=/var/log/mariadb/mariadb.log

pid-file=/var/run/mariadb/mariadb.pid

7.4 启动MySQL(控制节点)、

修改MySQL用户密码,并且system管理MySQL。

[root@localhost ~]# systemctl enable mariadb

[root@localhost ~]# systemctl start mariadb

[root@localhost ~]# mysql -uroot

MariaDB [(none)]> update mysql.user set password=password('tera123') where user = 'root';

MariaDB [(none)]> flush privileges;

7.5 部署RabbitMq(控制节点)

部署RabbitMq来协调服务之间的操作和状态信息,并且修改RabbitMq存储路径。

[root@localhost ~]# yum -y install rabbitmq-server

[root@localhost ~]# vi /etc/rabbitmq/rabbitmq-env.conf

RABBITMQ_MNESIA_BASE=/var/lib/rabbitmq/mnesia

RABBITMQ_LOG_BASE=/var/lib/rabbitmq/log

[root@localhost ~]# mkdir -p /var/lib/rabbitmq/mnesia

[root@localhost ~]# mkdir -p /var/lib/rabbitmq/log

[root@localhost ~]# chmod -R 755 /var/lib/rabbitmq

[root@localhost ~]# systemctl enable rabbitmq-server.service

[root@localhost ~]# systemctl start rabbitmq-server.service

7.6 配置RabbitMq用户 (控制节点)

添加OpenStack用户并且配置用户权限

[root@localhost ~]# rabbitmqctl add_user openstack tera123

Creating user “openstack” …

[root@localhost ~]# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

Setting permissions for user “openstack” in vhost “/”…

7.7 部署Memcached(控制节点)

部署Memcached,并修改配置文件。

[root@localhost ~]# yum -y install memcached

[root@localhost ~]# vi /etc/sysconfig/memcached

OPTIONS="-l 127.0.0.1,::1,192.168.4.145"

[root@localhost ~]# systemctl enable memcached

[root@localhost ~]# systemctl start memcached

7.8 部署Etcd(控制节点)

部署Etcd,修改配置文件,并且使用system管理。

[root@localhost ~]# yum -y install etcd

[root@localhost ~]# vi /etc/etcd/etcd.conf

ETCD_LISTEN_PEER_URLS=http://192.168.4.145:2380

ETCD_LISTEN_CLIENT_URLS="http://192.168.4.145:2379,http://127.0.0.1:2379"

ETCD_NAME="controller"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.4.145:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.4.145:2379"

ETCD_INITIAL_CLUSTER="controller=http://192.168.4.145:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@localhost ~]# systemctl enable etcd

[root@localhost ~]# systemctl start etcd

8 Keystone(控制节点)

OpenStack Identity服务提供了一个集成点,用于管理身份验证,授权和服务目录。

Keystone通常是用户与之交互的第一项服务。身份验证后,最终用户可以使用其身份访问其他OpenStack服务。同样,其他OpenStack服务利用Keystone来确保用户是他们所说的人,并发现其他服务在部署中的位置用户和服务可以使用由Keystone管理的服务目录来查找其他服务。

服务目录是OpenStack部署中可用服务的集合。每个服务可以具有一个或多个端点,并且每个端点可以是以下三种类型之一:admin,internal或public。在生产环境中,出于安全原因,不同的终结点类型可能驻留在暴露给不同类型用户的单独网络上。

8.1 MySQL中创建库和权限

在数据库中创建Keystone用户,并且授予用户访问库的权限。

[root@localhost ~]# mysql -uroot -p

MariaDB [(none)]> create database keystone;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' identified by 'tera123';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' identified by 'tera123';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'openstack' identified by 'tera123';

8.2 安装Keystone组件服务

部署openstack-keystone、http、http模块,配置Keystone。

[root@localhost ~]# yum install openstack-keystone httpd mod_wsgi

[root@localhost ~]# vi /etc/keystone/keystone.conf

[database]

connection=mysql+pymysql://keystone:tera123@192.168.4.145/keystone

[token]

provider=fernet

8.3 配置keystone库

导入keytone库SQL,初始化Fernet秘钥库存储,引导身份服务。

[root@localhost ~]# su -s /bin/sh -c "keystone-manage db_sync" keystone

[root@localhost ~]# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

[root@localhost~]# keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

[root@localhost ~]# keystone-manage bootstrap --bootstrap-password tera123\

--bootstrap-admin-url http://192.168.4.145:5000/v3/ \

--bootstrap-internal-url http://192.168.4.145:5000/v3/ \

--bootstrap-public-url http://192.168.4.145:5000/v3/ \

--bootstrap-region-id RegionOne

8.4 配置Apache HTTP服务器

[root@localhost ~]# vi /etc/httpd/conf/httpd.conf

ServerName 192.168.4.145

[root@localhost ~]# ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

8.5 完成部署

System管理Keystone并且配置管理账户。

[root@localhost ~]# systemctl enable httpd.service

[root@localhost ~]# systemctl start httpd.service

[root@localhost ~]# vi admin-openrc

export OS_USERNAME=admin

export OS_PASSWORD=tera123

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://192.168.4.145:5000/v3

export OS_IDENTITY_API_VERSION=3

8.6 创建Service项目

[root@localhost ~]# openstack project create --domain default \

--description "Service Project" service

[root@localhost ~]# openstack role create user

8.7 验证Keystone

[root@localhost ~]# openstack user list

+----------------------------------------------------------------+--------------+

| ID | Name |

| 57341d3e37eb4dc997624f9502495e44 | admin |

+-----------------------------------------------------------------+--------------+

9 Glance(控制节点)

Glance使用户可以发现、注册和检索虚拟机镜像。它提供了 REST API,使您可以查询虚拟机镜像元数据并检索实际镜像。您可以将通过Image服务提供的虚拟机存储在从简单文件系统到对象存储系统(如OpenStack Object Storage)的各种位置。Glance由以下部分组成:

- glance-api:用于接收Glance发现、检索和存储的Image API调用

- glance-registry:存储、处理和检索有关Glance的元数据。元数据包括大小和类型等项目

9.1 MySQL中创建库和权限

在数据库中创建Glance用户,并且授予用户访问库的权限。

[root@localhost ~]# mysql -uroot -p

MariaDB [(none)]> create database glance;

MariaDB [(none)]>GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' identified by 'tera123';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' identified by 'tera123';

MariaDB [(none)]>GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'openstack' identified by 'tera123';

9.2 创建用户、服务

创建Glance用户,并且给Glance授权Admin role,创建服务向Keystone暴露Endpoint。

[root@localhost ~]# openstack user create --domain default --password-prompt glance

[root@localhost ~]# openstack role add --project service --user glance admin

[root@localhost ~]# openstack service create --name glance \

--description "OpenStack Image" image

[root@localhost ~]# openstack endpoint create --region RegionOne image public \

http://192.168.4.145:9292

[root@localhost ~]# openstack endpoint create --region RegionOne image internal \

http://192.168.4.145:9292

[root@localhost ~]# openstack endpoint create --region RegionOne image admin \

http://192.168.4.145:9292

9.3 安装Glance组件服务

安装软件包,编辑Glance配置文件。

[root@localhost ~]# yum install -y openstack-glance

[root@localhost ~]# vi /etc/glance/glance-api.conf

[database]

connection = mysql+pymysql://glance:tera123@192.168.4.145/glance

[glance_store]

stores = file,http

default_store = file

[keystone_authtoken]

www_authenticate_uri = http://192.168.4.145:5000

auth_url = http://192.168.4.145:5000

memcached_servers = 192.168.4.145:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = 123456

[paste_deploy]

flavor = keystone

[root@loaclhost ~]# vi /etc/glance/glance-registry.conf

[database]

connection = mysql+pymysql://glance:tera123@192.168.4.145/glance

[keystone_authtoken]

www_authenticate_uri = http://192.168.4.145:5000

auth_url = http://192.168.4.145:5000

memcached_servers = 192.168.4.145:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = 123456

[paste_deploy]

flavor = keystone

9.4 同步数据库

导入Glance库的SQL文件,启动Glance服务。

[root@localhost ~]# su -s /bin/sh -c "glance-manage db_sync" glance

[root@localhost ~]# systemctl start openstack-glance-api openstack-glance-registry

[root@localhost ~]# systemctl enable openstack-glance-api openstack-glance-registry

9.5 验证Glance服务

上传镜像验证Glance服务可用性。

[root@localhost ~]# wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

[root@localhost ~]# openstack image create "cirros" --file cirros-0.3.4-x86_64-disk.img --disk-format qcow2 --container-format bare --public

[root@localhost ~]# openstack image list

+------------------------------------------------------------------+----------------------------+------------+

| ID | Name | Status |

+------------------------------------------------------------------+----------------------------+------------+

| 6a1ba286-5ef2-409f-af11-5135e21adb4d | cirros | active |

+------------------------------------------------------------------+----------------------------+-------------+

10 Nova(控制节点)

使用OpenStack Compute托管和管理云计算系统。OpenStack Compute是基础架构即服务(IaaS)系统的主要部分。主要模块是用Python实现的。Nova由以下部分组成:

- nova-api:接收并响应最终用户的compute调用。该服务支持OpenStack Compute API。它执行一些策略并启动大多数编排活动,例如运行实例

- nova-api-metadata:接收来自实例的元数据请求

- nova-compute:通过守护程序API创建和终止虚拟机实例的辅助程序守护程序

- nova-placement-api:跟踪每个提供商的库存和使用情况

- nova-scheduler:从队列中获取虚拟机实例请求,并通过计算确定它在哪台服务器主机上运行

- nova-conductor:中介nova-compute服务与数据库之间的交互。消除了对数据库的直接访问

- nova-consoleauth:为控制台代理提供的用户授权令牌

- nova-novncproxy:提供用于通过VNC连接访问正在运行的实例的代理

10.1 MySQL中创建库和权限

在数据库中创建Nova用户,并且授予用户访问库的权限。

[root@localhost ~]# mysql -uroot -p

MariaDB [(none)]> create database nova_api;

MariaDB [(none)]> create database nova;

MariaDB [(none)]> create database nova_cell0;

MariaDB [(none)]> create database placement;

MariaDB[(none)]>GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'openstack' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'openstack' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'openstack' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'openstack' identified by 'tera123';

10.2 创建Nova用户、服务

创建Nova用户,并且给Glance授权Admin role,创建服务向Keystone暴露Endpoint。

[root@localhost ~]# openstack user create --domain default --password-prompt nova

[root@localhost ~]# openstack role add --project service --user nova admin

[root@localhost ~]# openstack service create --name nova \

--description "OpenStack compute" compute

[root@localhost ~]# openstack endpoint create --region RegionOne compute public \

http://192.168.4.145:8774/v2.1

[root@localhost ~]# openstack endpoint create --region RegionOne compute internal \

http://192.168.4.145:8774/v2.1

[root@localhost ~]# openstack endpoint create --region RegionOne compute admin \

http://192.168.4.145:8774/v2.1

10.3 创建Placement用户、服务

创建Placement用户,并给Placement授权Admin role,创建服务向Keystone暴露Endpoint。

[root@localhost ~]# openstack user create --domain default --password-prompt placement

[root@localhost ~]# openstack role add --project service --user placement admin

[root@localhost ~]# openstack service create --name placement \

–description "Placement API" placement

[root@localhost ~]# openstack endpoint create --region RegionOne placement public \

http://192.168.4.145:8778

[root@localhost ~]# openstack endpoint create --region RegionOne placement internal \

http://192.168.4.145:8778

[root@localhost ~]# openstack endpoint create --region RegionOne placement admin \

http://192.168.4.145:8778

10.4 安装Nova组件服务

安装Nova组件并且编辑配置文件。

[root@localhost ~]# yum install openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler openstack-nova-placement-api -y

[root@localhost ~]# vi /etc/nova/nova.conf

[DEFAULT]

my_ip=192.168.4.145

use_neutron=true

firewall_driver=nova.virt.firewall.NoopFirewallDriver

enabled_apis=osapi_compute,metadata

transport_url=rabbit://openstack:tera123@192.168.4.145

[api]

auth_strategy=keystone

[api_database]

connection=mysql+pymysql://nova:tera123@192.168.4.145/nova_api

[database]

connection= mysql+pymysql://nova:tera123@192.168.4.145/nova

[glance]

api_servers= http://192.168.4.145:9292

[keystone_authtoken]

www_authenticate_uri = http://192.168.4.145:5000

auth_url=http://192.168.4.145:5000/v3

memcached_servers= 192.168.4.145:11211

auth_type=password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = 123456

[placement]

region_name = RegionOne

user_domain_name = Default

auth_type=password

auth_url=http://192.168.4.145:5000/v3

project_name=service

project_domain_name=Default

username=placement

password=123456

[placement_database]

connection= mysql+pymysql://placement:tera123@192.168.4.145/placement

[scheduler]

discover_hosts_in_cells_interval= 300

[vnc]

enabled=true

server_listen=$my_ip

server_proxyclient_address=$my_ip

10.5 配置Placement API

通过将以下配置添加到来启用对Placement API的访问。

[root@localhost ~]# vi /etc/httpd/conf.d/00-nova-placement-api.conf

Listen 8778

<VirtualHost *:8778>

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

WSGIProcessGroup nova-placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

WSGIDaemonProcess nova-placement-api processes=3 threads=1 user=nova group=nova

WSGIScriptAlias / /usr/bin/nova-placement-api

<IfVersion >= 2.4>

ErrorLogFormat "%M"

</IfVersion>

ErrorLog /var/log/nova/nova-placement-api.log

#SSLEngine On

#SSLCertificateFile ...

#SSLCertificateKeyFile ...

</VirtualHost>

Alias /nova-placement-api /usr/bin/nova-placement-api

<Location /nova-placement-api>

SetHandler wsgi-script

Options +ExecCGI

WSGIProcessGroup nova-placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

</Location>

10.6 同步数据库

导入nova-api、nova、cell0、placement库SQL。

[root@localhost ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

[root@localhost ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

[root@localhost ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

[root@localhost ~]# su -s /bin/sh -c "nova-manage db sync" nova

10.7 验证Nova服务

验证nova cell0和cell1是否正确注册,并启动Nova服务。

[root@openstack ~]# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+---------------------------------------+----------------------------------------------------+----------+

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+---------------------------------------+----------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@192.168.4.145/nova_cell0 | False |

| cell1 | 510ecc1a-4112-4832-b5a5-4f6d4581c545 | rabbit://openstack:****@192.168.4.145 | mysql+pymysql://nova:****@192.168.4.145/nova | False |

+-------+--------------------------------------+---------------------------------------+----------------------------------------------------+----------+

[root@localhost ~]# systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

[root@localhost ~]# systemctl start openstack-nova-api.service \

openstack-nova-consoleauth openstack-nova-scheduler.service\

openstack-nova-conductor.service openstack-nova-novncproxy.service

11 Nova(计算节点)

11.1 安装Nova组件服务

安装Nova组件并且编辑配置文件。

[root@localhost ~]# yum install openstack-nova-compute -y

[root@localhost ~]# vi /etc/nova/nova.conf

[DEFAULT]

my_ip=192.168.4.144

use_neutron=true

firewall_driver=nova.virt.firewall.NoopFirewallDriver

enabled_apis=osapi_compute,metadata

transport_url=rabbit://openstack:tera123@192.168.4.145

[api]

auth_strategy=keystone

[glance]

api_servers=http://192.168.4.145:9292

[keystone_authtoken]

www_authenticate_uri = http://192.168.4.145:5000

auth_url=http://192.168.4.145:5000/v3

memcached_servers=192.168.4.145:11211

auth_type=password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = 123456

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://192.168.4.145:5000/v3

username = placement

password = 123456

[vnc]

enabled=true

server_listen=0.0.0.0

server_proxyclient_address=$my_ip

novncproxy_base_url=http://192.168.4.145:6080/vnc_auto.html

11.2 检测是否支持虚拟化

如果不支持虚拟化,则要配置新的参数。

[root@localhost ~]# egrep -c ‘(vmx|svm)’ /proc/cpuinfo

[root@localshot ~]# vi /etc/nova/nova.conf

[libvirt]

virt_type = qemu

11.3 启动Nova服务

System管理Nova。

[root@localhost ~]# systemctl enable libvirtd openstack-nova-compute

[root@localshot ~]# systemctl start libvirtd openstack-nova-compute

11.4 发现计算节点(控制节点)

在控制节点确认计算节点。

[root@localhost ~]# openstack compute service list --service nova-compute

[root@localshot ~]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': 54e6c270-7390-4390-8702-02b72874c5a7

Checking host mapping for compute host 'compute': 39d80423-6001-4036-a546-5287c1e93ec5

Creating host mapping for compute host 'compute': 39d80423-6001-4036-a546-5287c1e93ec5

Found 1 unmapped computes in cell: 54e6c270-7390-4390-8702-02b72874c5a7

12 Neutron(控制节点)

OpenStack Networking(neutron)允许创建由其他OpenStack服务管理的接口设备并将其连接到网络。可以实施插件来容纳不同的网络设备和软件,从而为OpenStack体系结构和部署提供灵活性。它包括以下组件:

- neutron-server:接收API服务器请求并将其路由到适合的OpenStack网络设备以采取措施

- OpenStack Networking plug-ins and agents:OpenStack通用代理是L3,DHCP和插件代理

12.1 MySQL中创建库和权限

在数据库中创建Neutron用户,并且授予用户访问库的权限。

[root@localhost ~]# mysql -uroot -p

MariaDB [(none)]> create database neutron;

MariaDB[(none)]>GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' identified by 'tera123';

MariaDB[(none)]>GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'openstack' identified by 'tera123';

12.2 创建Neutron用户、服务

创建Neutron用户,并且给Neutron授权Admin role,创建服务向Keystone暴露Endpoint。

[root@localhost ~]# openstack user create --domain default --password-prompt neutron

[root@localhost ~]# openstack role add --project service --user neutron admin

[root@localhost ~]# openstack service create --name neutron \

–description "OpenStack Networking" network

[root@localhost ~]# openstack endpoint create --region RegionOne network public \

http://192.168.4.145:9696

[root@localhost ~]# openstack endpoint create --region RegionOne network internal \

http://192.168.4.145:9696

[root@localhost ~]# openstack endpoint create --region RegionOne network admin \

http://192.168.4.145:9696

12.3 安装Neutron组件服务

安装软件包,编辑Neutron配置文件。

[root@localhost ~]# yum install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-openvswitch ebtables

[root@localhost ~]# vi /etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

transport_url = rabbit://openstack:tera123@192.168.4.145

[database]

connection = mysql+pymysql://neutron:tera123@192.168.4.145/neutron

[keystone_authtoken]

www_authenticate_uri = http://192.168.4.145:5000

auth_url = http://192.168.4.145:5000

memcached_servers = 192.168.4.145:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[nova]

auth_url = http://192.168.4.145:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = 123456

[root@localhost ~]# vi /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

enable_ipset = true

enable_security_group = true

[root@localhost ~]# vi /etc/neutron/plugins/ml2/openvswitch_agent.ini

[agent]

tunnel_types = vxlan

l2_population = true

arp_responder = true

[ovs]

bridge_mappings = provider:br-provider

datapath_type = system

local_ip = 192.168.4.145

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[root@localhost ~]# vi /etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = openvswitch

external_network_bridge =

[root@localshot ~]# vi /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = openvswitch

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

[root@localshot ~]# vi /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_host = 192.168.4.145

metadata_proxy_shared_secret = tera123

[root@localhost ~]# vi /etc/nova/nova.conf

[neutron]

url=http://192.168.4.145:9696

auth_type=password

auth_url=http://192.168.4.145:5000

project_name=service

project_domain_name=default

username=neutron

user_domain_name=default

password=123456

region_name=RegionOne

service_metadata_proxy = true

metadata_proxy_shared_secret = tera123

12.4 网桥绑定物理网卡

添加br-provider网桥并且绑定物理网卡eno2。

[root@localhost ~]# ovs-vsctl add-br br-provider

[root@localhost ~]# ovs-vsctl add-port br-provider eno2

12.5 修改系统内核参数

修改内核参数确保Linux操作系统内核支持网桥过滤器。

[root@localhost ~]# vi /etc/sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@localhost ~]# modprobe br_netfilter

[root@localhost ~]# sysctl -p

12.6 创建符号链接

网络符文初始化脚本需要plugin.ini指向ML2插件配置文件的符号链接。

[root@localhost ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini12.7 同步数据库

导入Neutron库SQL。

[root@localhost ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \ --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron12.8 启动Neutron服务、

System管理Neutron服务。

[root@localhost ~]# systemctl restart openstack-nova-api

[root@localshot ~]# systemctl start neutron-server neutron-openvswitch-agent \

neutron-dhcp-agent neutron-metadata-agent neutron-l3-agent

[root@localshot ~]# systemctl enable neutron-server neutron-openvswitch-agent \

neutron-dhcp-agent neutron-metadata-agent neutron-l3-agent

13 Neutron(计算节点)

13.1 安装Neutron组件服务

安装Neutron服务,并编辑配置文件。

[root@localhost ~]# yum -y install openstack-neutron-openvswitch ebtables ipset -y

[root@localhost ~]# vi /etc/neutron/neutron.conf

[DEFAULT]

state_path = /var/lib/neutron

auth_strategy = keystone

transport_url = rabbit://openstack:tera123@192.168.4.145

[keystone_authtoken]

www_authenticate_uri = http://192.168.4.145:5000

auth_url = http://192.168.4.145:5000

memcached_servers = 192.168.4.145:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[oslo_concurrency]

lock_path = $state_path/lock

[root@localhost ~]# vi /etc/neutron/plugins/ml2/openvswitch_agent.ini

[ovs]

local_ip = 192.168.4.144

[agent]

tunnel_types = vxlan

l2_population = True

prevent_arp_spoofing = True

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[root@localhost ~]# vi /etc/noca/nova.conf

[neutron]

url=http://192.168.4.145:9696

auth_type=password

auth_url=http://192.168.4.145:5000

project_name=service

project_domain_name=default

username=neutron

user_domain_name = default

password=123456

region_name=RegionOne

13.2 修改系统内核参数

修改内核参数确保Linux操作系统内核支持网桥过滤器。

[root@localhost ~]# vi /etc/sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@localhost ~]# modprobe br_netfilter

[root@localhost ~]# sysctl -p

13.3 启动Neutron服务

启动Neutron服务。

[root@localhost ~]# systemctl restart openstack-nova-compute

[root@localshot ~]# systemctl start neutron-openvswitch-agent

[root@localshot ~]# systemctl enable neutron-openvswitch-agent

13.4 验证Neutron服务(控制节点)

验证Neutron agent启动状态。

[root@localhost ~]# openstack network agent list

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| 2df818b0-e911-4e5a-8c53-1ae85e387caa | Open vSwitch agent | controller | None | :-) | UP | neutron-openvswitch-agent |

| 8a1711ee-9772-4931-af61-eed89024ff04 | L3 agent | controller | nova | :-) | UP | neutron-l3-agent |

| cff39707-e34b-4cc7-9be0-95bbe281a5b9 | Metadata agent | scontroller | None | :-) | UP | neutron-metadata-agent |

| e4949796-9f35-40b4-aba0-781ee7ce9a31 | DHCP agent | controller | nova | :-) | UP | neutron-dhcp-agent |

| efd5fa9a-9b0e-447f-8ddc-13755efdd999 | Open vSwitch agent | compute | None | :-) | UP | neutron-openvswitch-agent |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

14 Horizon(控制节点)

14.1 安装Horizon软件包

安装软件包并编辑配置文件。

[root@localhost ~]# yum install -y openstack-dashboard

[root@localhost ~]# vi /etc/openstack-dashboard/local_settings

OPENSTACK_HOST = "192.168.4.145"

ALLOWED_HOSTS = ['*', 'localhost']

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': '192.168.4.145:11211',

},

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = 'Default'

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

OPENSTACK_NEUTRON_NETWORK = {

'enable_router': True,

'enable_quotas': True,

'enable_ipv6': True,

'enable_distributed_router': False,

'enable_ha_router': False,

'enable_fip_topology_check': True,

}

[root@localhost ~]# vi /etc/httpd/conf.d/openstack-dashboard.conf

WSGIDaemonProcess dashboard

WSGIProcessGroup dashboard

WSGISocketPrefix run/wsgi

WSGIApplicationGroup %{GLOBAL}

WSGIScriptAlias /dashboard \

usr/share/openstack-dashboard/openstack_dashboard/wsgi/django.wsgi

Alias /dashboard/static /usr/share/openstack-dashboard/static

<Directory /usr/share/openstack-dashboard/openstack_dashboard/wsgi>

Options All

AllowOverride All

Require all granted

</Directory>

<Directory /usr/share/openstack-dashboard/static>

Options All

AllowOverride All

Require all granted

</Directory>

14.2 启动Horizon

启动Horizon服务。

[root@localhost ~]# systemctl restart httpd memcached14.3 验证Horizon

登录Web端URL:http://192.168.4.145/dashboard,验证Horizon组件。

15 参考文献

OpenStack官网:www.openstack.org