功能验证:Helium智能网卡 OVS、vFW与SSL加解密的卸载

1 方案概述

本文主要讲解Helium智能网卡(下文统一简称为“智能网卡”)相关解决方案,验证其对VNF功能的卸载能力。整个验证过程涵盖以下三个功能点:

- 智能网卡对OVS的卸载;

- 智能网卡对基于虚拟机的VNF(vFW)功能卸载;

- 智能网卡对基于容器的VNF(SSL加解密)功能卸载。

2 硬件与软件环境

验证过程中涉及到的硬件和软件环境如表2-1和表2-2所示。

| 名称 | 型号 | 硬件指标 | 数量 |

|---|---|---|---|

| 智能网卡 | EC2004Y | 【参见产品彩页】 | 1 |

| 服务器 | X86 | 需兼容全高尺寸网卡 | 2 |

| 光模块 | 25G | SFP28 | 2 |

| 光纤 | 多模 | 10G/25G适用 | 1 |

表2-1:硬件环境

| 软件 | 版本 | 备注 |

|---|---|---|

| 宿主机操作系统 | CentOS 7.8.2003 | 无 |

| 安装包 | helium-V1.0.zip | 从support.asterfusion.com下载 |

表2-2:软件环境

3 验证思路及过程

3.1 将OVS卸载到智能网卡

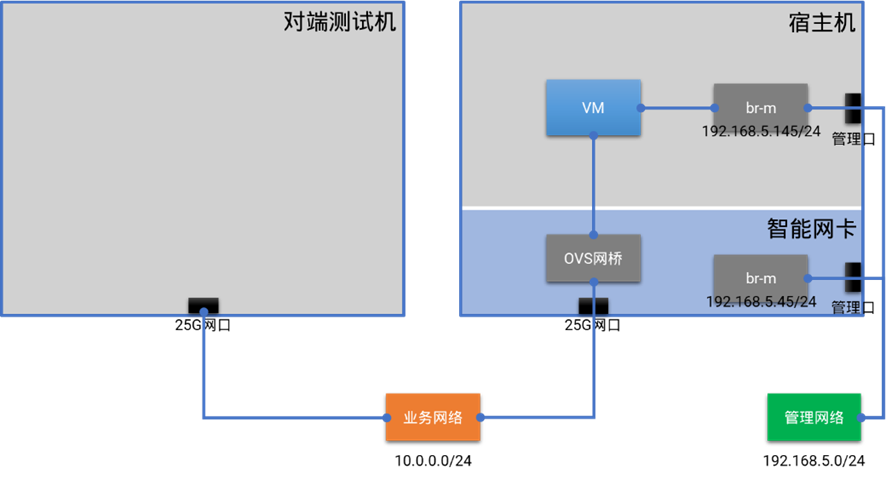

3.1.1 验证思路

网卡出厂时会默认安装好系统,我们需要进行管理网口配置、驱动安装调试等基本配置操作。

完成以上基本配置后,在智能网卡侧运行OVS,创建测试网桥,然后在宿主机侧启动两台虚拟机连接到测试网桥上。经过验证测试,两台虚拟机之间可以通过位于智能网卡侧的OVS网桥进行通信,证明OVS卸载到智能网卡后可以正常提供服务。

3.1.2 验证过程

3.1.2.1 对宿主机和智能网卡进行基本配置

#修改cpu内核启动参数

#编辑/etc/default/grub,修改其中GRUB_CMDLINE_LINUX_DEFAULT,新增内容如下:

intel_iommu=on iommu=pt pci=assign-busses pcie_acs_override=downstream

[root@asterfusion ~]# cat /etc/default/grub

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)"

GRUB_DEFAULT=saved

GRUB_DISABLE_SUBMENU=true

GRUB_TERMINAL_OUTPUT="console"

GRUB_CMDLINE_LINUX="intel_iommu=on iommu=pt pci=assign-busses pcie_acs_override=downstream crashkernel=auto rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet"

GRUB_DISABLE_RECOVERY="true"

#最后执行如下命令后重启宿主机

[root@asterfusion ~]#grub2-mkconfig -o /boot/grub2/grub.cfg

#智能网卡配置pci上游总线地址

root@OCTEONTX:~# ifconfig mvmgmt0 down 2>/dev/null

root@OCTEONTX:~# echo 0 > /sys/bus/pci/devices/0000\:05\:00.0/sriov_numvfs

root@OCTEONTX:~# rmmod mgmt_net 2>/dev/null

root@OCTEONTX:~# rmmod pcie_ep

root@OCTEONTX:~# rmmod dpi_dma

root@OCTEONTX:~# echo 1 > /sys/bus/pci/devices/0000\:05\:00.0/sriov_numvfs

root@OCTEONTX:~# modprobe dpi_dma

# lspci -tv | grep -C2 b200获取网卡host-sid,本机为03则下面的host_sid为ox30300

root@OCTEONTX:~# modprobe pcie_ep host_sid=0x30300 pem_num=0 epf_num=0

#配置mvmgmt0端口

root@OCTEONTX:~#modprobe mgmt_net

root@OCTEONTX:~#ifconfig mvmgmt0 12.12.12.12

root@OCTEONTX:~#ifconfig mvmgmt0 up

#宿主机加载网卡驱动

[root@asterfusion ~]#tar -xvf Helium-Driver-V1.0R1.tar.gz

[root@asterfusion ~]#cd Helium-ep-driver

[root@asterfusion ~]#make

[root@asterfusion ~]#insmod ./drivers/legacy/modules/driver/src/host/linux/kernel/drv/octeon_drv.ko num_vfs=4

[root@asterfusion~]#insmod ./drivers/mgmt_net/mgmt_net.ko

[root@asterfusion~]#ifconfig mvmgmt0 12.12.12.1

[root@asterfusion~]#ifconfig mvmgmt0 up

#上述配置完后确认智能网卡和宿主机虚拟网口信息

#智能网卡确认加载完成

root@OCTEONTX:~#lspci -nn -d 177d:a0f7

#宿主机确认驱动加载完成

[root@asterfusion~]#lspci | grep b203

#宿主机如果加载有问题或需要关闭更改虚拟端口,需要先卸载驱动再重新按上面步骤加载

[root@asterfusion~]#ifconfig mvmgmt0 down

[root@asterfusion~]#rmmod mgmt_net

[root@asterfusion~]#rmmod octeon_drv

#注意一定要先在网卡加载驱动后再在宿主机加载驱动

3.1.2.2 安装OVS及相关服务

宿主机和智能网卡都需要安装DPDK和OVS。

# 拷贝helium-V1.0.zip压缩包上传到宿主机目录,并解压。

[root@asterfusion~]: unzip helium-V1.0.zip

#宿主机安装dpdk

[root@asterfusion~]# tar -zxvf helium-v1.0/Helium-DPDK19.11-V1.0R1.tar.gz

[root@asterfusion~]# cd helium-v1.0/dpdk-19.11

[root@asterfusion~]# export RTE_SDK=$PWD

[root@asterfusion~]# export RTE_TARGET=build

[root@asterfusion~]# make config T=x86_64-native-linuxapp-gcc

[root@asterfusion~]# make

#VF口pci地址查看

[root@asterfusion~]#lspci | grep b203

#宿主机加载vfio驱动

[root@asterfusion~]#modprobe vfio-pci

[root@asterfusion~]#echo 1 > /sys/module/vfio/parameters/enable_unsafe_noiommu_mode

[root@asterfusion~]# helium-v1.0/dpdk-19.11/usertools/dpdk-devbind.py -b vfio-pci 0000:03:02.0 0000:03:02.1 0000:03:02.2 0000:03:02.3

#宿主机安装virtio-forwarder相关包

[root@asterfusion~]yum install protobuf.x86_64 -y

[root@asterfusion~]yum install protobuf-c.x86_64 -y

[root@asterfusion~]yum install czmq.x86_64 -y

[root@asterfusion~] helium-v1.0/Helium-VirtioForwarder-V1.0R1-intel-ivb.bin

#宿主机开启大页内存

[root@asterfusion~]# sysctl vm.nr_hugepages=20480

#开启virtio-forwarder服务

[root@asterfusion~]systemctl start virtio-forwarder.service

#新增vhost和VF端口

[root@asterfusion~]/usr/local/lib/virtio-forwarder/virtioforwarder_port_control add_sock --vhost-path="/tmp/vhost1.sock" --pci-addr="03:02.0" --tso=on --mtu=9000

[root@asterfusion~]/usr/local/lib/virtio-forwarder/virtioforwarder_port_control add_sock --vhost-path="/tmp/vhost2.sock" --pci-addr="03:02.1" --tso=on --mtu=9000

#验证添加的端口配置

[root@asterfusion~]/usr/local/lib/virtio-forwarder/virtioforwarder_stats -d 0

# 拷贝helium-V1.0.zip压缩包上传到网卡data目录,并解压。

root@OCTEONTX:/data/helium-v1.0# unzip helium-V1.0.zip

#智能网卡安装dpdk

root@OCTEONTX:/data# tar -zxvf Helium-DPDK19.11-V1.0R1.tar.gz

root@OCTEONTX:/data# cd dpdk-19.11

root@OCTEONTX:/data# export RTE_SDK=$PWD

root@OCTEONTX:/data# export RTE_TARGET=build

root@OCTEONTX:/data# make config T=arm64-octeontx2-linux-gcc

root@OCTEONTX:/data# make -j8

#智能网卡开启大页内存

root@OCTEONTX:/data# sysctl vm.nr_hugepages=32

#绑定端口

root@OCTEONTX:/data# /data/helium-v1.0/dpdk-19.11/usertools/dpdk-devbind.py -b vfio-pci 0002:02:00.0 0002:0f:00.2 0002:0f:00.3

#智能网卡安装ovs

root@OCTEONTX:/data# chmod +x Helium-OvS-V1.0R1.bin

root@OCTEONTX:/data# ./Helium-OvS-V1.0R1.bin

3.1.2.3 验证OVS

# 智能网卡启动OVS

root@OCTEONTX:/data# cd ovs_install

root@OCTEONTX:/data/ovs_install# chmod +x ovs_start.sh

root@OCTEONTX:/data/ovs_install# ./ovs_start.sh

# 验证OVS和DPDK的版本

root@OCTEONTX:/data/ovs_install# ovs-vsctl get Open_vSwitch . dpdk_initialized

true

root@OCTEONTX:/data/ovs_install# ovs-vsctl get Open_vSwitch . dpdk_version

"DPDK 19.11.0"

root@OCTEONTX:/data/ovs_install# ovs-vswitchd --version

ovs-vswitchd (Open vSwitch) 2.11.1

DPDK 19.11.0

3.1.2.4 在智能网卡侧配置管理网与业务网的网桥

# 创建并配置管理网的网桥,并将智能网卡的管理网IP放到此网桥上

root@OCTEONTX:~# ovs-vsctl add-br br-m -- set bridge br-m datapath_type=netdev

root@OCTEONTX:~# ip add del dev eth4 192.168.5.45/24

root@OCTEONTX:~# ovs-vsctl add-port br-m eth4

root@OCTEONTX:~# ip link set dev br-m up

root@OCTEONTX:~# ip add add dev br-m 192.168.5.45/24

root@OCTEONTX:~# ip route add default via 192.168.5.1 dev br-m

# 创建并配置业务网的网桥,将智能网卡的物理网口eth0连接到此网桥上

#查看智能网卡物理口PCI地址

root@OCTEONTX:/data/helium-v1.0# lspci|grep a063

0002:02:00.0 Ethernet controller: Cavium, Inc. Device a063 (rev 09)

0002:03:00.0 Ethernet controller: Cavium, Inc. Device a063 (rev 09)

0002:04:00.0 Ethernet controller: Cavium, Inc. Device a063 (rev 09)

0002:05:00.0 Ethernet controller: Cavium, Inc. Device a063 (rev 09)

root@OCTEONTX:~# ovs-vsctl add-br br-net -- set bridge br-net datapath_type=netdev

root@OCTEONTX:~# ovs-vsctl add-port br-net eth0 -- set Interface eth0 type=dpdk options:dpdk-devargs=0002:02:00.0 mtu_request=9000

root@OCTEONTX:~# ip link set dev br-net up3.1.2.5 在宿主机侧创建两台虚拟机,连接到智能网卡侧的业务网桥

# 修改虚拟机的xml配置文件,添加一个vhost-user的虚拟网卡。

# centos-00:

<domain type='kvm' id='16'>

<name>centos-00</name>

<uuid>549a2cc5-0b8b-4b7a-acd5-6171d0e85000</uuid>

<memory unit='KiB'>2194432</memory>

<currentMemory unit='KiB'>2194304</currentMemory>

<memoryBacking>

<hugepages>

<page size='2048' unit='KiB' nodeset='0'/>

</hugepages>

</memoryBacking>

<vcpu placement='static'>4</vcpu>

<resource>

<partition>/machine</partition>

</resource>

<os>

<type arch='x86_64' machine='pc-i440fx-rhel7.6.0'>hvm</type>

<boot dev='hd'/>

</os>

<features>

<acpi/>

<apic/>

<vmport state='off'/>

</features>

<cpu mode='custom' match='exact' check='full'>

<model fallback='forbid'>Haswell-noTSX-IBRS</model>

<vendor>Intel</vendor>

<feature policy='require' name='vme'/>

<feature policy='require' name='ss'/>

<feature policy='require' name='f16c'/>

<feature policy='require' name='rdrand'/>

<feature policy='require' name='hypervisor'/>

<feature policy='require' name='arat'/>

<feature policy='require' name='tsc_adjust'/>

<feature policy='require' name='md-clear'/>

<feature policy='require' name='stibp'/>

<feature policy='require' name='ssbd'/>

<feature policy='require' name='xsaveopt'/>

<feature policy='require' name='pdpe1gb'/>

<feature policy='require' name='abm'/>

<feature policy='require' name='ibpb'/>

<numa>

<cell id='0' cpus='0-3' memory='2194432' unit='KiB' memAccess='shared'/>

</numa>

</cpu>

<clock offset='utc'>

<timer name='rtc' tickpolicy='catchup'/>

<timer name='pit' tickpolicy='delay'/>

<timer name='hpet' present='no'/>

</clock>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>destroy</on_crash>

<pm>

<suspend-to-mem enabled='no'/>

<suspend-to-disk enabled='no'/>

</pm>

<devices>

<emulator>/usr/libexec/qemu-kvm</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2'/>

<source file='/home/CentOS-7-x86_64-GenericCloud-01.qcow2'/>

<backingStore/>

<target dev='hda' bus='ide'/>

<alias name='ide0-0-0'/>

<address type='drive' controller='0' bus='0' target='0' unit='0'/>

</disk>

<controller type='usb' index='0' model='ich9-ehci1'>

<alias name='usb'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x7'/>

</controller>

<controller type='usb' index='0' model='ich9-uhci1'>

<alias name='usb'/>

<master startport='0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0' multifunction='on'/>

</controller>

<controller type='usb' index='0' model='ich9-uhci2'>

<alias name='usb'/>

<master startport='2'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x1'/>

</controller>

<controller type='usb' index='0' model='ich9-uhci3'>

<alias name='usb'/>

<master startport='4'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x2'/>

</controller>

<controller type='pci' index='0' model='pci-root'>

<alias name='pci.0'/>

</controller>

<controller type='pci' index='1' model='pci-bridge'>

<model name='pci-bridge'/>

<target chassisNr='1'/>

<alias name='pci.1'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/>

</controller>

<controller type='pci' index='2' model='pci-bridge'>

<model name='pci-bridge'/>

<target chassisNr='2'/>

<alias name='pci.2'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x09' function='0x0'/>

</controller>

<controller type='pci' index='3' model='pci-bridge'>

<model name='pci-bridge'/>

<target chassisNr='3'/>

<alias name='pci.3'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x0a' function='0x0'/>

</controller>

<controller type='ide' index='0'>

<alias name='ide'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/>

</controller>

<controller type='virtio-serial' index='0'>

<alias name='virtio-serial0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/>

</controller>

<interface type='vhostuser'>

<source type='unix' path='/tmp/vhost1.sock' mode='server'/>

<model type='virtio'/>

<mtu size='9000'/>

</interface>

<serial type='pty'>

<source path='/dev/pts/4'/>

<target type='isa-serial' port='0'>

<model name='isa-serial'/>

</target>

<alias name='serial0'/>

</serial>

<console type='pty' tty='/dev/pts/4'>

<source path='/dev/pts/4'/>

<target type='serial' port='0'/>

<alias name='serial0'/>

</console>

<channel type='spicevmc'>

<target type='virtio' name='com.redhat.spice.0' state='disconnected'/>

<alias name='channel0'/>

<address type='virtio-serial' controller='0' bus='0' port='1'/>

</channel>

<input type='mouse' bus='ps2'>

<alias name='input0'/>

</input>

<input type='keyboard' bus='ps2'>

<alias name='input1'/>

</input>

<graphics type='spice' port='5900' autoport='yes' listen='127.0.0.1'>

<listen type='address' address='127.0.0.1'/>

<image compression='off'/>

</graphics>

<sound model='ich6'>

<alias name='sound0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/>

</sound>

<video>

<model type='qxl' ram='65536' vram='65536' vgamem='16384' heads='1' primary='yes'/>

<alias name='video0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/>

</video>

<redirdev bus='usb' type='spicevmc'>

<alias name='redir0'/>

<address type='usb' bus='0' port='1'/>

</redirdev>

<redirdev bus='usb' type='spicevmc'>

<alias name='redir1'/>

<address type='usb' bus='0' port='2'/>

</redirdev>

<memballoon model='virtio'>

<alias name='balloon0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/>

</memballoon>

</devices>

<seclabel type='dynamic' model='dac' relabel='yes'>

<label>+107:+107</label>

<imagelabel>+107:+107</imagelabel>

</seclabel>

</domain>

# centos-01:

<domain type='kvm' id='15'>

<name>centos-01</name>

<uuid>549a2cc5-0b8b-4b7a-acd5-6171d0e85001</uuid>

<memory unit='KiB'>2194432</memory>

<currentMemory unit='KiB'>2194304</currentMemory>

<memoryBacking>

<hugepages>

<page size='2048' unit='KiB' nodeset='0'/>

</hugepages>

</memoryBacking>

<vcpu placement='static'>4</vcpu>

<resource>

<partition>/machine</partition>

</resource>

<os>

<type arch='x86_64' machine='pc-i440fx-rhel7.6.0'>hvm</type>

<boot dev='hd'/>

</os>

<features>

<acpi/>

<apic/>

<vmport state='off'/>

</features>

<cpu mode='custom' match='exact' check='full'>

<model fallback='forbid'>Haswell-noTSX-IBRS</model>

<vendor>Intel</vendor>

<feature policy='require' name='vme'/>

<feature policy='require' name='ss'/>

<feature policy='require' name='f16c'/>

<feature policy='require' name='rdrand'/>

<feature policy='require' name='hypervisor'/>

<feature policy='require' name='arat'/>

<feature policy='require' name='tsc_adjust'/>

<feature policy='require' name='md-clear'/>

<feature policy='require' name='stibp'/>

<feature policy='require' name='ssbd'/>

<feature policy='require' name='xsaveopt'/>

<feature policy='require' name='pdpe1gb'/>

<feature policy='require' name='abm'/>

<feature policy='require' name='ibpb'/>

<numa>

<cell id='0' cpus='0-3' memory='2194432' unit='KiB' memAccess='shared'/>

</numa>

</cpu>

<clock offset='utc'>

<timer name='rtc' tickpolicy='catchup'/>

<timer name='pit' tickpolicy='delay'/>

<timer name='hpet' present='no'/>

</clock>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>destroy</on_crash>

<pm>

<suspend-to-mem enabled='no'/>

<suspend-to-disk enabled='no'/>

</pm>

<devices>

<emulator>/usr/libexec/qemu-kvm</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2'/>

<source file='/home/CentOS-7-x86_64-GenericCloud-02.qcow2'/>

<backingStore/>

<target dev='hda' bus='ide'/>

<alias name='ide0-0-0'/>

<address type='drive' controller='0' bus='0' target='0' unit='0'/>

</disk>

<controller type='usb' index='0' model='ich9-ehci1'>

<alias name='usb'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x7'/>

</controller>

<controller type='usb' index='0' model='ich9-uhci1'>

<alias name='usb'/>

<master startport='0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0' multifunction='on'/>

</controller>

<controller type='usb' index='0' model='ich9-uhci2'>

<alias name='usb'/>

<master startport='2'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x1'/>

</controller>

<controller type='usb' index='0' model='ich9-uhci3'>

<alias name='usb'/>

<master startport='4'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x2'/>

</controller>

<controller type='pci' index='0' model='pci-root'>

<alias name='pci.0'/>

</controller>

<controller type='ide' index='0'>

<alias name='ide'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/>

</controller>

<controller type='virtio-serial' index='0'>

<alias name='virtio-serial0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/>

</controller>

<interface type='vhostuser'>

<source type='unix' path='/tmp/vhost2.sock' mode='server'/>

<model type='virtio'/>

<mtu size='9000'/>

</interface>

<serial type='pty'>

<source path='/dev/pts/5'/>

<target type='isa-serial' port='0'>

<model name='isa-serial'/>

</target>

<alias name='serial0'/>

</serial>

<console type='pty' tty='/dev/pts/5'>

<source path='/dev/pts/5'/>

<target type='serial' port='0'/>

<alias name='serial0'/>

</console>

<channel type='spicevmc'>

<target type='virtio' name='com.redhat.spice.0' state='disconnected'/>

<alias name='channel0'/>

<address type='virtio-serial' controller='0' bus='0' port='1'/>

</channel>

<input type='mouse' bus='ps2'>

<alias name='input0'/>

</input>

<input type='keyboard' bus='ps2'>

<alias name='input1'/>

</input>

<graphics type='spice' port='5901' autoport='yes' listen='127.0.0.1'>

<listen type='address' address='127.0.0.1'/>

<image compression='off'/>

</graphics>

<sound model='ich6'>

<alias name='sound0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/>

</sound>

<video>

<model type='qxl' ram='65536' vram='65536' vgamem='16384' heads='1' primary='yes'/>

<alias name='video0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/>

</video>

<redirdev bus='usb' type='spicevmc'>

<alias name='redir0'/>

<address type='usb' bus='0' port='1'/>

</redirdev>

<redirdev bus='usb' type='spicevmc'>

<alias name='redir1'/>

<address type='usb' bus='0' port='2'/>

</redirdev>

<memballoon model='virtio'>

<alias name='balloon0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/>

</memballoon>

</devices>

<seclabel type='dynamic' model='dac' relabel='yes'>

<label>+107:+107</label>

<imagelabel>+107:+107</imagelabel>

</seclabel>

</domain>

#相关镜像CentOS-7-x86_64-GenericCloud-XXXX.qcow2需要自己从网上下载。

# 创建两台CentOS7虚拟机并启动。

[root@asterfusion ~]# virsh define centos-00.xml

[root@asterfusion ~]# virsh define centos-01.xml

[root@asterfusion ~]# virsh start centos-00

[root@asterfusion ~]# virsh start centos-01

[root@asterfusion ~]# virsh list --all

Id Name State

----------------------------------------------------

13 centos-00 running

14 centos-01 running

# 将虚拟机连接到宿主机侧的管理网桥。

[root@asterfusion ~]# ip link add centos-00-m type veth peer name centos-00-m-s

[root@asterfusion ~]# ip link add centos-01-m type veth peer name centos-01-m-s

[root@asterfusion ~]# ovs-vsctl add-br br-m

[root@asterfusion ~]# ip link set dev br-m up

[root@asterfusion ~]# ip address add dev br-m 192.168.5.145/24

[root@asterfusion ~]# ovs-vsctl add-port br-m eno2

[root@asterfusion ~]# ip link set dev eno2 up

[root@asterfusion ~]# ovs-vsctl add-port br-m centos-00-m-s

[root@asterfusion ~]# ovs-vsctl add-port br-m centos-01-m-s

[root@asterfusion ~]# virsh attach-interface centos-00 --type direct --source centos-00-m --config

[root@asterfusion ~]# virsh attach-interface centos-00 --type direct --source centos-00-m --live

[root@asterfusion ~]# virsh attach-interface centos-01 --type direct --source centos-01-m --config

[root@asterfusion ~]# virsh attach-interface centos-01 --type direct --source centos-01-m --live

[root@asterfusion ~]# ip link set dev centos-00-m up

[root@asterfusion ~]# ip link set dev centos-01-m up

[root@asterfusion ~]# ip link set dev centos-00-m-s up

[root@asterfusion ~]# ip link set dev centos-01-m-s up

# 分别给两台虚拟机配置业务IP。

# centos-00:

[root@centos-00 ~]# ip link set dev eth0 up

[root@centos-00 ~]# ip add add dev eth0 172.0.0.100/24

# centos-01:

[root@centos-01 ~]# ip link set dev eth0 up

[root@centos-01 ~]# ip add add dev eth0 172.0.0.200/24

# 分别给两台虚拟机配置管理IP。

# centos-00:

[root@centos-00 ~]# ip link set dev eth1 up

[root@centos-00 ~]# ip add add dev eth1 192.168.5.155/24

[root@centos-00 ~]# ip route add default via 192.168.5.1 dev eth1

# centos-01:

[root@centos-01 ~]# ip link set dev eth1 up

[root@centos-01 ~]# ip add add dev eth1 192.168.5.165/24

[root@centos-01 ~]# ip route add default via 192.168.5.1 dev eth1

#查看智能网卡侧的VF口PCI地址,列出的VF口是从第二条开始和宿主机VF口一一对应。

root@OCTEONTX:/data/helium-v1.0# lspci -nn -d 177d:a0f7

0002:0f:00.1 System peripheral [0880]: Cavium, Inc. Device [177d:a0f7]

0002:0f:00.2 System peripheral [0880]: Cavium, Inc. Device [177d:a0f7]

0002:0f:00.3 System peripheral [0880]: Cavium, Inc. Device [177d:a0f7]

0002:0f:00.4 System peripheral [0880]: Cavium, Inc. Device [177d:a0f7]

0002:0f:00.5 System peripheral [0880]: Cavium, Inc. Device [177d:a0f7]

# 在智能网卡侧将虚拟机使用的两个VF绑定到业务网桥br-net。

root@OCTEONTX:~# ovs-vsctl add-port br-net sdp1 -- set Interface sdp1 type=dpdk options:dpdk-devargs=0002:0f:00.2 mtu_request=9000

root@OCTEONTX:~# ovs-vsctl add-port br-net sdp2 -- set Interface sdp2 type=dpdk options:dpdk-devargs=0002:0f:00.3 mtu_request=9000

3.1.3 验证卸载结果

# 经过验证两台虚拟机能够经过智能网卡侧的网桥br-net正常通信。

# centos-00:

[root@centos-00 ~]# ping 172.0.0.200 -c 4

PING 172.0.0.200 (172.0.0.200) 56(84) bytes of data.

64 bytes from 172.0.0.200: icmp_seq=1 ttl=64 time=0.220 ms

64 bytes from 172.0.0.200: icmp_seq=2 ttl=64 time=0.164 ms

64 bytes from 172.0.0.200: icmp_seq=3 ttl=64 time=0.140 ms

64 bytes from 172.0.0.200: icmp_seq=4 ttl=64 time=0.132 ms

--- 172.0.0.200 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3000ms

rtt min/avg/max/mdev = 0.132/0.164/0.220/0.034 ms

[root@centos-00 ~]#

# centos-01:

[root@centos-01 ~]# ping 172.0.0.100 -c 4

PING 172.0.0.100 (172.0.0.100) 56(84) bytes of data.

64 bytes from 172.0.0.100: icmp_seq=1 ttl=64 time=0.159 ms

64 bytes from 172.0.0.100: icmp_seq=2 ttl=64 time=0.163 ms

64 bytes from 172.0.0.100: icmp_seq=3 ttl=64 time=0.179 ms

64 bytes from 172.0.0.100: icmp_seq=4 ttl=64 time=0.180 ms

--- 172.0.0.100 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 2999ms

rtt min/avg/max/mdev = 0.159/0.170/0.180/0.013 ms

3.2 基于虚拟机的vFW卸载

3.2.1 验证思路

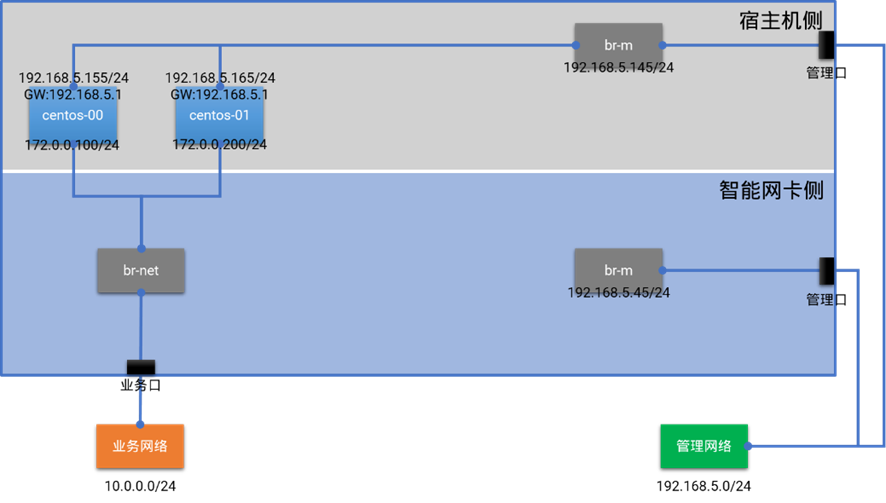

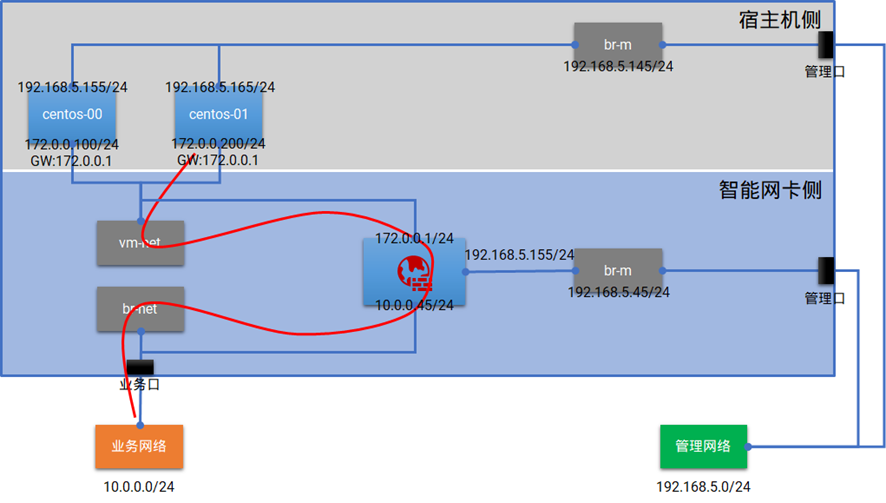

为了验证智能网卡对基于虚拟机的VNF卸载能力,本方案将在宿主机侧启动两台虚拟机作为租户的业务实例,在智能网卡侧运行CentOS虚拟机,配置相应的iptables规则作为VPC网关与防火墙。

经过验证测试,成功地将防火墙功能卸载至智能网卡。由上图可知,租户的VPC网段为172.0.0.0/24,实例的网关设置为VPC网关172.0.0.1。当智能网卡上运行的防火墙收到业务实例访问其他网段的流量时,会对流量按预设规则进行过滤转发,而且在转发到业务网络前会进行一次SNAT,使得租户VPC内的实例可以访问到业务网络。

3.2.2 验证过程

本小节的所有操作,都是基于 3.1 小节的配置环境进行,因此基础的操作步骤不再赘述。

3.2.2.1 在智能网卡侧配置网桥vm-net,并将宿主机侧的两台虚机连接到此网桥

# 在智能网卡侧创建网桥vm-net。

root@OCTEONTX:~# ovs-vsctl add-br vm-net -- set bridge vm-net datapath_type=netdev

# 将VF从br-net网桥上删除。

root@OCTEONTX:~# ovs-vsctl del-port br-net sdp1

root@OCTEONTX:~# ovs-vsctl del-port br-net sdp2

# 将VF连接到vm-net网桥。

root@OCTEONTX:~# ovs-vsctl add-port vm-net sdp1 -- set Interface sdp1 type=dpdk options:dpdk-devargs=0002:0f:00.2 mtu_request=9000

root@OCTEONTX:~# ovs-vsctl add-port vm-net sdp2 -- set Interface sdp2 type=dpdk options:dpdk-devargs=0002:0f:00.3 mtu_request=90003.2.2.2 在智能网卡侧创建虚拟机,并分别连接到网桥vm-net和br-net

# 在智能网卡侧安装虚拟化软件包。

root@OCTEONTX:~# apt install -y qemu qama-utils qemu-efi-arm qemu-efi-aarch64 qemu-system-arm qemu-system-common qemu-system-data qemu-system-gui

# 准备虚拟机的镜像和xml文件,结果如下:

root@OCTEONTX:/data# mkdir libvirt && cd libvirt

root@OCTEONTX:/data/libvirt# tree

.

|-- images

| |-- CentOS-7-aarch64-GenericCloud-2009.qcow2

| `-- QEMU_EFI.fd

`-- xml

|-- firewall-00.xml

`-- default-net.xml

2 directories, 4 files

root@OCTEONTX:/data/libvirt# cat xml/centos-00.xml

<domain type='qemu'>

<name>firewall-00</name>

<uuid>dc042799-4e06-466f-8fce-71ac2105f786</uuid>

<metadata>

<libosinfo:libosinfo xmlns:libosinfo="http://libosinfo.org/xmlns/libvirt/domain/1.0">

<libosinfo:os id="http://centos.org/centos/7.0"/>

</libosinfo:libosinfo>

</metadata>

<memory unit='KiB'>2097152</memory>

<currentMemory unit='KiB'>2097152</currentMemory>

<vcpu placement='static'>2</vcpu>

<os>

<type arch='aarch64' machine='virt-4.2'>hvm</type>

<loader readonly='yes' type='pflash'>/usr/share/AAVMF/AAVMF_CODE.fd</loader>

<nvram>/var/lib/libvirt/qemu/nvram/centos-00_VARS.fd</nvram>

<boot dev='hd'/>

</os>

<features>

<acpi/>

<gic version='2'/>

</features>

<cpu mode='custom' match='exact' check='none'>

<model fallback='allow'>cortex-a57</model>

</cpu>

<clock offset='utc'/>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>destroy</on_crash>

<devices>

<emulator>/usr/bin/qemu-system-aarch64</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2'/>

<source file='/data/libvirt/images/CentOS-7-aarch64-GenericCloud-2009.qcow2'/>

<target dev='vda' bus='virtio'/>

<address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x0'/>

</disk>

<controller type='pci' index='0' model='pcie-root'/>

<controller type='pci' index='1' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='1' port='0x8'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x0' multifunction='on'/>

</controller>

<controller type='pci' index='2' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='2' port='0x9'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/>

</controller>

<controller type='pci' index='3' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='3' port='0xa'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x2'/>

</controller>

<controller type='pci' index='4' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='4' port='0xb'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x3'/>

</controller>

<controller type='pci' index='5' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='5' port='0xc'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x4'/>

</controller>

<controller type='pci' index='6' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='6' port='0xd'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x5'/>

</controller>

<controller type='pci' index='7' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='7' port='0xe'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x6'/>

</controller>

<controller type='virtio-serial' index='0'>

<address type='pci' domain='0x0000' bus='0x03' slot='0x00' function='0x0'/>

</controller>

<serial type='pty'>

<target type='system-serial' port='0'>

<model name='pl011'/>

</target>

</serial>

<console type='pty'>

<target type='serial' port='0'/>

</console>

<channel type='unix'>

<target type='virtio' name='org.qemu.guest_agent.0'/>

<address type='virtio-serial' controller='0' bus='0' port='1'/>

</channel>

<memballoon model='virtio'>

<address type='pci' domain='0x0000' bus='0x05' slot='0x00' function='0x0'/>

</memballoon>

<rng model='virtio'>

<backend model='random'>/dev/urandom</backend>

<address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x0'/>

</rng>

</devices>

</domain>

#相关镜像CentOS-7-aarch64-GenericCloud-XXXX.qcow2需要自己从网上下载。

# 创建虚拟机并启动。

root@OCTEONTX:/data/libvirt# virsh define firwall-00.xml

root@OCTEONTX:/data/libvirt# virsh start firewall-00

root@OCTEONTX:/data/libvirt# virsh list --all

Id Name State

---------------------------

30 firewall-00 running

# 将虚拟机分别连接网桥vm-net、br-net和br-m。

root@OCTEONTX:/data/libvirt# ip link add fw-if-in type veth peer name fw-if-in-sw

root@OCTEONTX:/data/libvirt# ip link add fw-if-ou type veth peer name fw-if-ou-sw

root@OCTEONTX:/data/libvirt# ip link add fw-m type veth peer name fw-m-sw

root@OCTEONTX:/data/libvirt# ip link set dev fw-m up

root@OCTEONTX:/data/libvirt# ip link set dev fw-m-sw up

root@OCTEONTX:/data/libvirt# ip link set dev fw-if-in up

root@OCTEONTX:/data/libvirt# ip link set dev fw-if-in-sw up

root@OCTEONTX:/data/libvirt# ip link set dev fw-if-ou up

root@OCTEONTX:/data/libvirt# ip link set dev fw-if-ou-sw up

root@OCTEONTX:/data/libvirt# ovs-vsctl add-port vm-net fw-if-in-sw

root@OCTEONTX:/data/libvirt# ovs-vsctl add-port br-net fw-if-ou-sw

root@OCTEONTX:/data/libvirt# ovs-vsctl add-port br-m fw-m-sw

root@OCTEONTX:/data/libvirt# virsh attach-interface firewall-00 --type direct --source fw-if-in --config

root@OCTEONTX:/data/libvirt# virsh attach-interface firewall-00 --type direct --source fw-if-in --live

root@OCTEONTX:/data/libvirt# virsh attach-interface firewall-00 --type direct --source fw-if-ou --config

root@OCTEONTX:/data/libvirt# virsh attach-interface firewall-00 --type direct --source fw-if-ou --live

root@OCTEONTX:/data/libvirt# virsh attach-interface firewall-00 --type direct --source fw-m --config

root@OCTEONTX:/data/libvirt# virsh attach-interface firewall-00 --type direct --source fw-m --live

#为br-net配置网关IP

root@OCTEONTX:/data/libvirt#ip address add dev br-net 10.0.0.1/243.2.2.3 在智能网卡侧的虚拟机上配置防火墙规则

# 配置虚拟机各个网卡的IP地址。

root@OCTEONTX:~# virsh console firewall-00

Connected to domain firewall-00

Escape character is ^]

[root@firewall ~]# ip link set dev eth0 up

[root@firewall ~]# ip link set dev eth1 up

[root@firewall ~]# ip link set dev eth2 up

[root@firewall ~]# ip add add dev eth0 172.0.0.1/24

[root@firewall ~]# ip add add dev eth1 10.0.0.45/24

[root@firewall ~]# ip add add dev eth2 192.168.5.155/24

[root@firewall ~]# ip route add default via 10.0.0.1 dev eth1

# 开启虚拟机的报文转发功能。

[root@firewall ~]# echo '1' > /proc/sys/net/ipv4/ip_forward

# 设置防火墙的测试规则:丢弃实例172.0.0.100的所有报文(也即从宿主机上的第一个虚机发出的报文)。

[root@firewall ~]# iptables -I FORWARD -s 172.0.0.100 -j DROP

[root@firewall ~]# iptables -nvL

Chain INPUT (policy ACCEPT 332K packets, 135M bytes)

pkts bytes target prot opt in out source destination

Chain FORWARD (policy ACCEPT 7158 packets, 545K bytes)

pkts bytes target prot opt in out source destination

7305 544K DROP all -- * * 172.0.0.100 0.0.0.0/0

Chain OUTPUT (policy ACCEPT 20823 packets, 1740K bytes)

pkts bytes target prot opt in out source destination

# 设置防火墙的SNAT规则。

[root@firewall ~]# iptables -t nat -A POSTROUTING -o eth1 -s 172.0.0.0/24 -j SNAT --to-source 10.0.0.45

[root@firewall ~]#iptables -nvL -t nat

Chain PREROUTING (policy ACCEPT 11048 packets, 828K bytes)

pkts bytes target prot opt in out source destination

Chain INPUT (policy ACCEPT 16 packets, 784 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 9639 packets, 725K bytes)

pkts bytes target prot opt in out source destination

Chain POSTROUTING (policy ACCEPT 9639 packets, 725K bytes)

pkts bytes target prot opt in out source destination

6188 470K SNAT all -- * eth1 172.0.0.0/24 0.0.0.0/0 to:10.0.0.45

3.2.3 验证卸载结果

# 在宿主机上的虚机centos-00上Ping位于业务网络上的“外网网关”10.0.0.1,无法通信。

[root@centos-00 ~]# ip route del default via 192.168.5.1 dev eth1

[root@centos-00 ~]# ip route add default via 172.0.0.1 dev eth0

[root@centos-00 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.0.0.1 0.0.0.0 UG 0 0 0 eth0

172.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

192.168.5.0 0.0.0.0 255.255.255.0 U 0 0 0 eth1

[root@centos-00 ~]# ping 10.0.0.1 -c 4

PING 10.0.0.1 (10.0.0.1) 56(84) bytes of data.

--- 10.0.0.1 ping statistics ---

4 packets transmitted, 0 received, 100% packet loss, time 2999ms

[root@centos-00 ~]#

# 在宿主机上的虚机centos-01上Ping位于业务网络上的“外网网关”10.0.0.1,通信正常。

[root@centos-00 ~]# ip route del default via 192.168.5.1 dev eth1

[root@centos-00 ~]# ip route add default via 172.0.0.1 dev eth0

[root@centos-01 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.0.0.1 0.0.0.0 UG 0 0 0 eth0

172.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

192.168.5.0 0.0.0.0 255.255.255.0 U 0 0 0 eth1

[root@centos-01 ~]# ping 10.0.0.1 -c 4

PING 10.0.0.1 (10.0.0.1) 56(84) bytes of data.

64 bytes from 10.0.0.1: icmp_seq=1 ttl=63 time=1.07 ms

64 bytes from 10.0.0.1: icmp_seq=2 ttl=63 time=1.04 ms

64 bytes from 10.0.0.1: icmp_seq=3 ttl=63 time=1.04 ms

64 bytes from 10.0.0.1: icmp_seq=4 ttl=63 time=1.04 ms

--- 10.0.0.1 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3003ms

rtt min/avg/max/mdev = 1.042/1.052/1.075/0.041 ms3.3 基于容器的SSL加解密卸载

3.3.1 验证思路

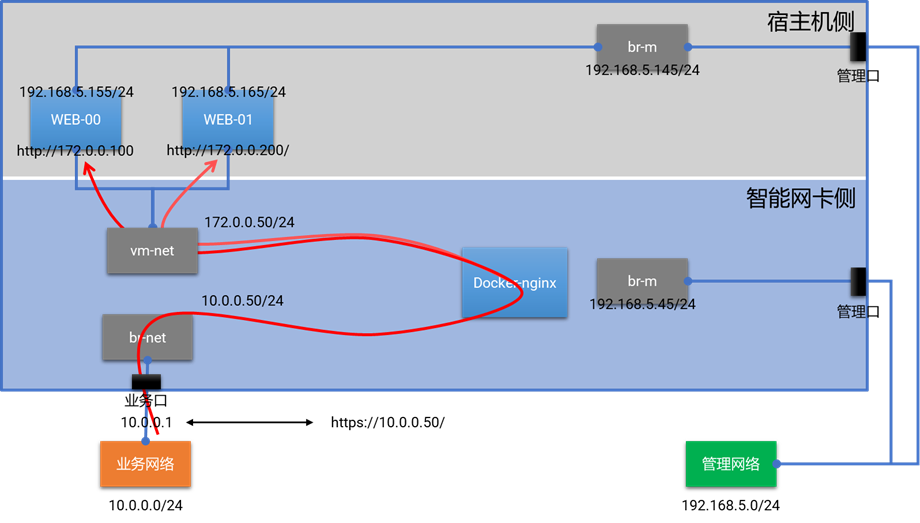

为了验证智能网卡对基于容器的VNF卸载能力,本方案将在宿主机侧启动两台虚拟机作为WEB后端,在智能网卡侧运行nginx容器作为SSL加解密的前端和负载均衡器,用https://10.0.0.50/这个域名对业务网的其他用户提供HTTPS服务。

经过验证测试,成功地将SSL加解密功能卸载至智能网卡。当nginx容器从智能网卡的25G业务口收到来自客户端10.0.0.1访问https://10.0.0.50/的HTTPS流量时,会将其转换为HTTP流量发送至位于宿主机的WEB节点中,后端WEB节点的选择采用轮询算法,因此在客户端10.0.0.1上多次访问,会交替收到WEB-00和WEB-01响应的页面。

3.3.2 验证过程

本小节的所有操作,都是基于 3.2 小节的配置环境进行,因此基础的操作步骤不再赘述。

3.3.2.1 在宿主机侧启动两台虚拟机,并分别连接到管理网和业务网

# 修改xml文件,将3.1小节创建的虚拟机重命名后用作WEB后端。

[root@asterfusion ~]# virsh shutdown centos-00

[root@asterfusion ~]# virsh shutdown centos-01

[root@asterfusion ~]# virsh domrename centos-00 WEB-00.xml

[root@asterfusion ~]# virsh domrename centos-01 WEB-01.xml

[root@asterfusion ~]# virsh start WEB-00

[root@asterfusion ~]# virsh start WEB-01

[root@asterfusion ~]# virsh list --all

Id Name State

----------------------------------------------------

13 WEB-00 running

14 WEB-01 running

# 重新给两台虚拟机配置管理IP。

# WEB-00:

[root@WEB-00 ~]# ip link set dev eth1 up

[root@WEB-00 ~]# ip add add dev eth1 192.168.5.155/24

[root@WEB-00 ~]# ip link set dev eth0 up

[root@WEB-00 ~]# ip add add dev eth0 172.0.0.100/24

[root@WEB-00 ~]# ip route add default via 172.0.0.1 dev eth0

# WEB-01:

[root@WEB-01 ~]# ip link set dev eth1 up

[root@WEB-01 ~]# ip add add dev eth1 192.168.5.165/24

[root@WEB-01 ~]# ip link set dev eth0 up

[root@WEB-01 ~]# ip add add dev eth0 172.0.0.200/24

[root@WEB-01 ~]# ip route add default via 172.0.0.1 dev eth13.3.2.2 将宿主机侧的两台虚拟机配置为WEB后端

# 分别在两台虚拟上安装httpd服务并创建index页面。

# WEB-00:

[root@WEB-00 ~]# setenforce 0

[root@WEB-00 ~]# yum update && yum install -y httpd

[root@WEB-00 ~]# cd /var/www/html/

[root@WEB-00 html]# echo "I'm end server: 172.0.0.100" > index.html

[root@WEB-00 html]# systemctl restart httpd

[root@WEB-00 html]# systemctl enable httpd

# WEB-01:

[root@WEB-01 ~]# getenforce

Disabled

[root@WEB-01 ~]# yum update && yum install -y httpd

[root@WEB-01 ~]# cd /var/www/html/

[root@WEB-01 html]# echo "I'm end server: 172.0.0.200" > index.html

[root@WEB-01 html]# systemctl restart httpd

[root@WEB-01 html]# systemctl enable httpd

3.3.2.3 在智能网卡侧创建两个网桥用于前后端网络IP的挂载

# 删除3.2节用不到的端口及网桥。

root@OCTEONTX:~# ovs-vsctl del-port vm-net fw-if-in-sw

root@OCTEONTX:~# ovs-vsctl del-port br-net fw-if-ou-sw

root@OCTEONTX:~# ovs-vsctl del-port br-m fw-m-sw

root@OCTEONTX:~# ip link delete fw-if-in type veth peer name fw-if-in-sw

root@OCTEONTX:~# ip link delete fw-if-ou type veth peer name fw-if-ou-sw

root@OCTEONTX:~# ip link delete fw-m type veth peer name fw-m-sw

root@OCTEONTX:~# ipconfig vm-net 172.0.0.50/24

root@OCTEONTX:~# ipconfig br-net 10.0.0.50/24

3.3.2.4 在智能网卡侧进行基于容器的SSL加解密卸载

# 准备nginx的目录以及配置文件。

root@OCTEONTX:~# cd /data/

root@OCTEONTX:/data# mkdir nginx && cd nginx

root@OCTEONTX:/data/nginx# mkdir config data logs ssl

root@OCTEONTX:/data/nginx# ll

total 20K

drwxr-xr-x 3 root root 4.0K Sep 18 01:54 config

drwxr-xr-x 2 root root 4.0K Sep 17 08:06 data

drwxr-xr-x 2 root root 4.0K Sep 18 02:15 logs

drwxr-xr-x 2 root root 4.0K Sep 18 02:02 ssl

# 创建自签名证书。

root@OCTEONTX:/data/nginx# cd ssl/

root@OCTEONTX:/data/nginx/ssl# openssl genrsa -des3 -out server.key 2048

root@OCTEONTX:/data/nginx/ssl# openssl req -new -key server.key -out server.csr

root@OCTEONTX:/data/nginx/ssl# openssl rsa -in server.key -out server_nopwd.key

root@OCTEONTX:/data/nginx/ssl# openssl x509 -req -days 365 -in server.csr -signkey

# 准备完成后的nginx目录以及相关配置。

root@OCTEONTX:/data/nginx# tree

.

|-- config

| |-- conf.d

| | `-- default.conf

| `-- nginx.conf

|-- data

| `-- index.html

|-- logs

| |-- access.log

| `-- error.log

|-- ssl

| |-- server.crt

| |-- server.csr

| |-- server.key

| `-- server_nopwd.key

`-- start-n.sh

5 directories, 10 files

root@OCTEONTX:/data/nginx# cat data/index.html

I'm SSL Proxer

root@OCTEONTX:/data/nginx# cat config/conf.d/default.conf

upstream end_server {

server 172.0.0.100:80 weight=1 max_fails=3 fail_timeout=15s;

server 172.0.0.200:80 weight=1 max_fails=3 fail_timeout=15s;

}

server {

listen 443 ssl;

server_name localhost;

ssl_certificate /ssl/server.crt;

ssl_certificate_key /ssl/server_nopwd.key;

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 5m;

ssl_protocols SSLv2 SSLv3 TLSv1.2;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

proxy_pass http://end_server/;

proxy_set_header Host $host:$server_port;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

proxy_ignore_client_abort on;

}

# 在智能网卡的操作系统上运行nginx容器。

root@OCTEONTX:/data/nginx# docker run -d --network host --name nginx-00 -v /data/nginx/data:/usr/share/nginx/html:rw -v /data/nginx/config/nginx.conf:/etc/nginx/nginx.conf/:rw -v /data/nginx/config/conf.d/default.conf:/etc/nginx/conf.d/default.conf:rw -v /data/nginx/logs:/var/log/nginx/:rw -v /data/nginx/ssl:/ssl/:rw nginx

3.3.3 验证卸载结果

# 从业务网中的一台服务器访问https://10.0.0.50/,卸载成功则会返回后端WEB提供的页面。

[root@compute-01 ~]# ip a | grep -i "enp2s0f0"

8: enp2s0f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

inet 10.0.0.1/24 scope global enp2s0f0

[root@compute-01 ~]# curl --insecure https://10.0.0.50/

I'm end server: 172.0.0.100

[root@compute-01 ~]# curl --insecure https://10.0.0.50/

I'm end server: 172.0.0.200

4 在服务器与智能网卡中运行VNF的异同

VNF通常以虚拟机或者容器的形式进行部署。关于安装部署,对于服务器与Helium智能网卡基本没区别,只要有对应版本的可以安装运行,配置方式、命令行等基本一致。

关于软件资源,由于目前x86架构的服务器占比极高,各种操作系统、容器服务、Hypervisor软件、镜像、应用等均会提供x86版本。对于Helium智能网卡这个arm架构平台,在操作系统、容器服务、Hypervisor等方面,大多数流行的产品也已经提供了arm版本。但是,对于各种应用软件、容器镜像等只有少部分支持arm版本,如果客户原先跑在x86平台的VNF软件没有arm版本,则需要先由研发完成移植、测试等工作。

移植工作涉及到代码层面,因此一定是需要研发进行的。因为从x86向arm进行代码移植有两个方面的问题,一是这两种CPU在处理某些类型的溢出时,行为不同,二是这两种CPU采用不同的指令集,即复杂指令集与精简指令集,因此他们的机器指令不能完全一一对应,如果项目嵌入汇编进行加速,则代码的移植会更麻烦。

5 总结

通过前文所述的验证测试操作,证明Helium智能网卡可以完成对OVS的卸载、对基于虚拟机的VNF(vFW)功能的卸载、对基于容器的VNF(SSL加解密)功能的卸载。未来再配合Helium智能网卡SoC的协处理器,不仅能对VNF进行卸载,还能进一步提升VNF应用的处理性能。