功能验证:X-T系列硬件平台 DPU 四层负载均衡DPVS 卸载

1 方案概述

本文档主要讲解星融元X-T系列交换机DPU扣卡的业务卸载能力验证,以及卸载后的负载性能测试,本次功能验证与性能测试是以DPVS的卸载为例进行的,DPVS是一款开源的、基于DPDK的高性能四层负载均衡器。

在按照本文档进行验证场景复现之前,建议先阅读文档《X-T Programmable Bare Metal用户指导手册》,了解星融元X-T系列交换机和DPU扣卡的相关概念。

2 硬件与软件环境

验证过程中涉及到的硬件和软件环境如表2-1和表2-2所示。

| 名称 | 型号 | 硬件指标 | 数量 |

|---|---|---|---|

| 交换机 | X312P-48Y-T | 配置一块DPU扣卡 | 1 |

| 服务器 | 通用X86服务器 | 配置10G光口 | 4 |

| 光模块 | 10G | SFP+ | 8 |

| 光纤 | 多模 | 10G适用 | 4 |

表2-1:硬件环境

| 软件 | 版本 | 备注 |

|---|---|---|

| 服务器操作系统 | CentOS Linux release 7.8.2003 (Core) | 开源版本 |

| 交换机操作系统 | AsterNOS v3.1 | 联系技术支持获取 相应版本软件包 |

| DPU扣卡操作系统 | Debian 10.3 (Kernel 4.14.76-17.0.1) | 联系技术支持获取 相应版本软件包 |

| DPDK | 19.11.0 | 联系技术支持获取 相应版本软件包 |

| DPVS | 1.8-8 | 开源版本 |

表2-2:软件环境

3 验证思路及过程

3.1 验证思路

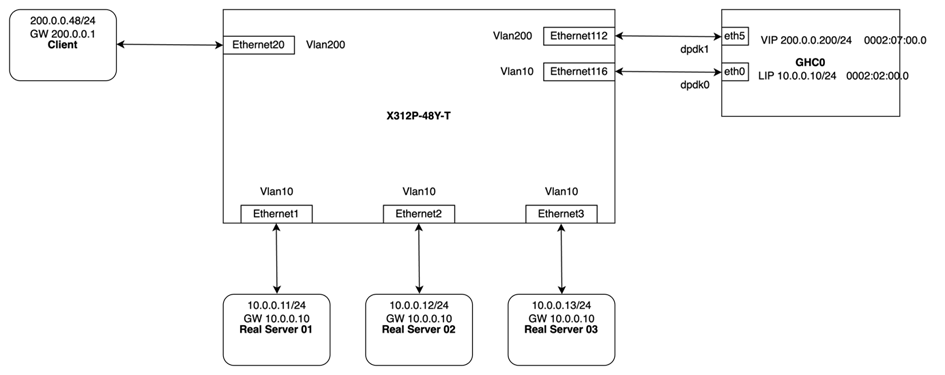

为了验证星融元X-T系列交换机的DPU扣卡对DPVS的卸载能力,本次验证使用4台服务器(其中1台作为Client,发起HTTP请求,其他3台作为Real Server,提供Web服务,响应HTTP请求)直连交换机,在DPU扣卡上,编译安装DPDK和DPVS,并进行双臂Full-NAT模式的四层负载均衡配置测试。本次验证的设备连接拓扑如图3-1所示。

在DPVS的双臂模式下,需要在交换机上配置2个VLAN,分别用于Client端与DPU扣卡上dpdk1端口之间的报文转发,后端3台Real Server与DPU扣卡上dpdk0端口的报文转发,相应的VLAN划分、端口分配、端口角色以及对应的IP配置如图3-2所示。

3.2 验证过程

3.2.1 在交换机上进行VLAN配置

# 配置VLAN

admin@sonic:~$ sudo config vlan add 10

admin@sonic:~$ sudo config vlan add 200

admin@sonic:~$ sudo config vlan member add 10 Ethernet1 -u

admin@sonic:~$ sudo config vlan member add 10 Ethernet2 -u

admin@sonic:~$ sudo config vlan member add 10 Ethernet3 -u

admin@sonic:~$ sudo config vlan member add 10 Ethernet116 -u

admin@sonic:~$ sudo config vlan member add 200 Ethernet20 -u

admin@sonic:~$ sudo config vlan member add 200 Ethernet112 -u

3.2.2 在DPU扣卡上编译安装DPDK和DPVS

# 配置编译环境

root@OCTEONTX:~# apt-get install libpopt0 libpopt-dev libnl-3-200 libnl-3-dev libnl-genl-3-dev libpcap-dev

root@OCTEONTX:~# tar xvf linux-custom.tgz

root@OCTEONTX:~# ln -s `pwd`/linux-custom /lib/modules/`uname -r`/build

# 编译DPDK

root@OCTEONTX:~# cd /var/dpvs/

root@OCTEONTX:/var/dpvs# tar xvf dpdk-19.11.0_raw.tar.bz2

root@OCTEONTX:/var/dpvs# cd dpdk-19.11.0

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# export TARGET="arm64-octeontx2-linux-gcc"

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# export RTE_SDK=`pwd`

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# export RTE_TARGET="build"

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# export PATH="${PATH}:$RTE_SDK/usertools"

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# make config T=arm64-octeontx2-linux-gcc

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# sed -i 's/CONFIG_RTE_LIBRTE_PMD_PCAP=n/CONFIG_RTE_LIBRTE_PMD_PCAP=y/g' $RTE_SDK/build/.config

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# make -j

# 编译DPVS

root@OCTEONTX:~# cd /var/dpvs/

root@OCTEONTX:/var/dpvs# tar xvf dpvs.tar

root@OCTEONTX:/var/dpvs# cd dpvs/

root@OCTEONTX:/var/dpvs/dpvs# patch -p1 < dpvs_5346e4c645c_with_dpdk.patch

root@OCTEONTX:/var/dpvs/dpvs# make -j

root@OCTEONTX:/var/dpvs/dpvs# make install

# 加载内核模块、设置大页内存、为指定端口绑定DPDK驱动

root@OCTEONTX:~# cd /var/dpvs

root@OCTEONTX:/var/dpvs# insmod /var/dpvs/dpdk-19.11.0/build/build/kernel/linux/kni/rte_kni.ko carrier=on

root@OCTEONTX:/var/dpvs# echo 128 > /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages

root@OCTEONTX:/var/dpvs# mount -t hugetlbfs nodev /mnt/huge -o pagesize=2M

root@OCTEONTX:/var/dpvs# dpdk-devbind.py -b vfio-pci 0002:02:00.0

root@OCTEONTX:/var/dpvs# dpdk-devbind.py -b vfio-pci 0002:07:00.0

root@OCTEONTX:/var/dpvs# dpdk-devbind.py -s

Network devices using DPDK-compatible driver

============================================

0002:02:00.0 'Device a063' drv=vfio-pci unused=

0002:07:00.0 'Device a063' drv=vfio-pci unused=

Network devices using kernel driver

===================================

0000:01:10.0 'Device a059' if= drv=octeontx2-cgx unused=vfio-pci

0000:01:10.1 'Device a059' if= drv=octeontx2-cgx unused=vfio-pci

0000:01:10.2 'Device a059' if= drv=octeontx2-cgx unused=vfio-pci

......

root@OCTEONTX:/var/dpvs#

3.2.3 在DPU扣卡上配置负载均衡服务

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpvs -- -w 0002:02:00.0 -w 0002:07:00.0

root@OCTEONTX:/var/dpvs#

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip link set dpdk0 link up

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip link set dpdk1 link up

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip addr add 10.0.0.10/32 dev dpdk0 sapool

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip addr add 200.0.0.200/32 dev dpdk1

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip route add 10.0.0.0/24 dev dpdk0

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip route add 200.0.0.0/24 dev dpdk1

root@OCTEONTX:/var/dpvs#

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -A -t 200.0.0.200:80 -s rr

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -a -t 200.0.0.200:80 -r 10.0.0.11 -b

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -a -t 200.0.0.200:80 -r 10.0.0.12 -b

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -a -t 200.0.0.200:80 -r 10.0.0.13 -b

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm --add-laddr -z 10.0.0.10 -t 200.0.0.200:80 -F dpdk0

root@OCTEONTX:/var/dpvs#

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -G

VIP:VPORT TOTAL SNAT_IP CONFLICTS CONNS

200.0.0.200:80 1

10.0.0.10 0 0

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -ln

IP Virtual Server version 0.0.0 (size=0)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 200.0.0.200:80 rr

-> 10.0.0.11:80 FullNat 1 0 0

-> 10.0.0.12:80 FullNat 1 0 0

-> 10.0.0.13:80 FullNat 1 0 0

root@OCTEONTX:/var/dpvs# 3.2.4 配置3台Real Server的网络和Web服务

# Real Server 01

[root@node-01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether b8:59:9f:42:36:69 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.11/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::ba59:9fff:fe42:3669/64 scope link

valid_lft forever preferred_lft forever

[root@node-01 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.0.0.10 0.0.0.0 UG 0 0 0 eth0

10.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

[root@node-01 ~]# cat index.html

Real Server 01

[root@node-01 ~]# python -m SimpleHTTPServer 80

Serving HTTP on 0.0.0.0 port 80 ...

10.0.0.10 - - [23/Dec/2022 02:57:18] "GET / HTTP/1.1" 200 –

# Real Server 02

[root@node-02 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 68:91:d0:64:02:f1 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.12/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::6a91:d0ff:fe64:2f1/64 scope link

valid_lft forever preferred_lft forever

[root@node-02 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.0.0.10 0.0.0.0 UG 0 0 0 eth0

10.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

[root@node-02 ~]# python -m SimpleHTTPServer 80

Serving HTTP on 0.0.0.0 port 80 ...

10.0.0.10 - - [23/Dec/2022 08:16:40] "GET / HTTP/1.1" 200 –

# Real Server 03

[root@node-03 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ac state UP group default qlen 1000

link/ether b8:59:9f:c7:73:cb brd ff:ff:ff:ff:ff:ff

inet6 fe80::ba59:9fff:fec7:73cb/64 scope link

valid_lft forever preferred_lft forever

[root@node-03 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.0.0.10 0.0.0.0 UG 0 0 0 eth1

10.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth1

[root@node-03 ~]# python -m SimpleHTTPServer 80

Serving HTTP on 0.0.0.0 port 80 ...

10.0.0.10 - - [23/Dec/2022 08:16:39] "GET / HTTP/1.1" 200 -3.2.5 配置Client的网络

[root@node-00 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether b8:59:9f:42:36:68 brd ff:ff:ff:ff:ff:ff

inet 200.0.0.48/24 brd 200.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::ba59:9fff:fe42:3668/64 scope link

valid_lft forever preferred_lft forever

[root@node-00 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 200.0.0.1 0.0.0.0 UG 0 0 0 eth0

200.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

[root@node-00 ~]#3.3 卸载结果验证

# 在Client端,使用curl访问http://<VIP>来验证DPVS的负载均衡效果

[root@node-00 ~]# curl http://200.0.0.200

Real Server 01

[root@node-00 ~]# curl http://200.0.0.200

Real Server 02

[root@node-00 ~]# curl http://200.0.0.200

Real Server 03经过验证测试,我们成功地将高性能四层负载均衡软件DPVS,卸载到星融X-T系列交换机的DPU扣卡上。从Client端的访问结果可以看到,在Client端访问http://200.0.0.200时,报文会先通过交换机转发到DPU扣卡上,再交由DPVS按照预设规则轮询转发到后端3台Web服务器。

4 性能测试

4.1 测试环境

4.1.1 测试组网

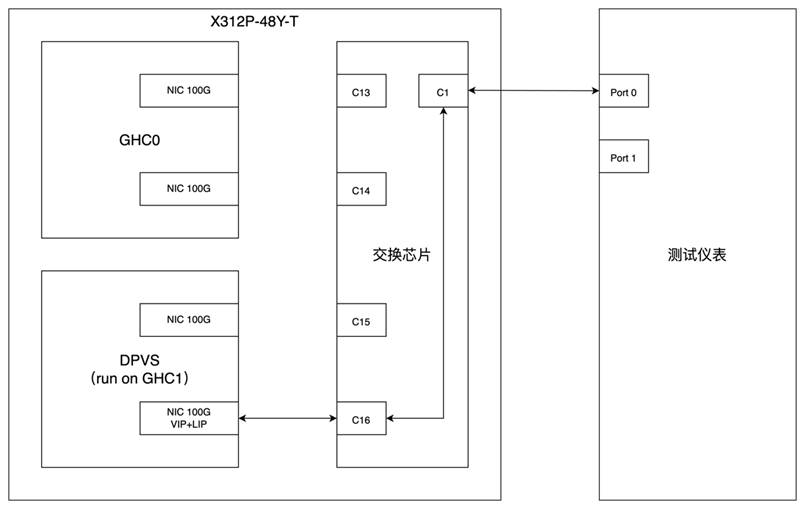

测试组网如图4-1所示,将仪表的100G端口与交换面板的100G端口相连,在AsterNOS上进行规则配置,将100G端口C1的流量送往C16,C16是与DPU扣卡互连的一个100G传输通道,流量经DPVS负载处理后原路返回。

4.1.2 仪表打流配置

- 源MAC不变,目的MAC固定为DPVS使用的网卡MAC;

- 源IP递增,目的IP固定为DPVS配置的VIP;

- TCP报文头的源端口不变,目的端口设为80,将SYN flag置1,其余均置0。

4.2 测试结果

4.2.1 Full-NAT模式下不同核单流最大转发性能

使用仪表以64Byte包长、100Gbps的发包速率打流,测得不同核心数配置下,DPVS最大转发性能如下表所示。

| 核心数 | 1 | 4 | 8 | 16 | 23 |

|---|---|---|---|---|---|

| DPVS转发带宽/Gbps | 0.77 | 2.66 | 5.16 | 10.01 | 14.16 |

表4-1:Full-NAT模式下不同核单流最大转发性能

4.2.2 Full-NAT模式下16核稳定转发性能

使用仪表以不同长度的包长持续打流5分钟,测得DPVS在使用16个核心时,不同包长的情况下不丢包的稳定转发性能。

| 包长/Byte | 78 | 128 | 256 | 512 |

|---|---|---|---|---|

| DPVS转发带宽/Gbps | 9.6 | 13.6 | 21.6 | 31.2 |

表4-2:Full-NAT模式下16核稳定转发性能

4.2.3 Full-NAT模式下23核稳定转发性能

使用仪表以不同长度的包长持续打流5分钟,测得DPVS在使用23个核心时,不同包长的情况下不丢包的稳定转发性能。

| 包长/Byte | 78 | 128 | 256 | 512 |

|---|---|---|---|---|

| 12.2 | 17.7 | 27.9 | 42.8 |

表4-3:Full-NAT模式下23核稳定转发性能

4.2.4 Full-NAT模式下多核建连性能

使用仪表以64Byte包长、100Gbps的发包速率打流,测得不同核心数配置下,DPVS每秒最大建连性能。

| 核心数 | 1 | 4 | 8 | 16 | 23 |

|---|---|---|---|---|---|

| 每秒最高建连数 | 22w | 55w | 94w | 163w | 203w |

表4-4:Full-NAT模式下多核建连性能

5 总结

通过前文所描述的功能验证与性能测试的结果,能够证明星融元X-T系列交换机的DPU扣卡,可以像通用的X86或Arm架构服务器一样运行DPVS来承载负载均衡业务。并且,一块DPU扣卡可以让DPVS在Full-NAT模式下跑到42.8Gbps的转发带宽,一台X312P-48Y-T在配置两块DPU扣卡时,转发带宽可以达到85Gbps左右。在生产环境中部署时,可根据业务情况,选择更高性能版本的DPU扣卡,以应对更大的流量业务场景。

除了四层负载均衡网关,DPU扣卡还具有更加多样化的商用应用场景,智能网关(如限速网关、专线网关、对等连接网关、协议网关、东西向网关、公有服务网关…)、DC承载Spine/Leaf组网、汇聚分流等。将业务卸载到DPU扣卡后,还可以配合DPU扣卡上硬件加速单元,进一步提升业务性能。

6 附录1:测试期间使用的DPVS配置文件

root@OCTEONTX:/var/dpvs# cat /etc/dpvs.conf

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

! This is dpvs default configuration file.

!

! The attribute "<init>" denotes the configuration item at initialization stage. Item of

! this type is configured oneshoot and not reloadable. If invalid value configured in the

! file, dpvs would use its default value.

!

! Note that dpvs configuration file supports the following comment type:

! * line comment: using '#" or '!'

! * inline range comment: using '<' and '>', put comment in between

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

! global config

global_defs {

log_level INFO

log_file /var/log/dpvs.log

! log_async_mode on

}

! netif config

netif_defs {

<init> pktpool_size 65535

<init> pktpool_cache 32

<init> device dpdk0 {

rx {

queue_number 1

descriptor_number 1024

rss all

}

tx {

queue_number 1

descriptor_number 1024

}

fdir {

mode perfect

pballoc 64k

status matched

}

promisc_mode

kni_name dpdk0.kni

}

<init> device dpdk1 {

rx {

queue_number 1

descriptor_number 1024

rss all

}

tx {

queue_number 1

descriptor_number 1024

}

fdir {

mode perfect

pballoc 64k

status matched

}

promisc_mode

kni_name dpdk1.kni

}

! <init> bonding bond0 {

! mode 0

! slave dpdk0

! slave dpdk1

! primary dpdk0

! kni_name bond0.kni

!}

}

! worker config (lcores)

worker_defs {

<init> worker cpu0 {

type master

cpu_id 0

}

<init> worker cpu1 {

type slave

cpu_id 1

port dpdk0 {

rx_queue_ids 0

tx_queue_ids 0

! isol_rx_cpu_ids 9

! isol_rxq_ring_sz 1048576

}

port dpdk1 {

rx_queue_ids 0

tx_queue_ids 0

! isol_rx_cpu_ids 9

! isol_rxq_ring_sz 1048576

}

}

}

! timer config

timer_defs {

# cpu job loops to schedule dpdk timer management

schedule_interval 500

}

! dpvs neighbor config

neigh_defs {

<init> unres_queue_length 128

timeout 60

}

! dpvs ipv4 config

ipv4_defs {

forwarding on

<init> default_ttl 64

fragment {

<init> bucket_number 4096

<init> bucket_entries 16

<init> max_entries 4096

<init> ttl 1

}

}

! dpvs ipv6 config

ipv6_defs {

disable off

forwarding off

route6 {

<init> method hlist

recycle_time 10

}

}

! control plane config

ctrl_defs {

lcore_msg {

<init> ring_size 4096

sync_msg_timeout_us 20000

priority_level low

}

ipc_msg {

<init> unix_domain /var/run/dpvs_ctrl

}

}

! ipvs config

ipvs_defs {

conn {

<init> conn_pool_size 2097152

<init> conn_pool_cache 256

conn_init_timeout 3

! expire_quiescent_template

! fast_xmit_close

! <init> redirect off

}

udp {

! defence_udp_drop

uoa_mode opp

uoa_max_trail 3

timeout {

normal 300

last 3

}

}

tcp {

! defence_tcp_drop

timeout {

none 2

established 90

syn_sent 3

syn_recv 30

fin_wait 7

time_wait 7

close 3

close_wait 7

last_ack 7

listen 120

synack 30

last 2

}

synproxy {

synack_options {

mss 1452

ttl 63

sack

! wscale

! timestamp

}

! defer_rs_syn

rs_syn_max_retry 3

ack_storm_thresh 10

max_ack_saved 3

conn_reuse_state {

close

time_wait

! fin_wait

! close_wait

! last_ack

}

}

}

}

! sa_pool config

sa_pool {

pool_hash_size 16

}

root@OCTEONTX:/var/dpvs#