配置指导:并行文件系统BeeGFS的安装部署与性能测试

1 目标

本文档将简要介绍并行文件系统及其开源方案BeeGFS的基本概念,并选用Asterfusion CX-N系列超低时延云交换机进行组网,完成3节点环境的部署配置和性能测试。

2 概要介绍

2.1 关于并行文件系统

高性能计算机系统(HPC)通过汇总多个计算资源来快速解决大型计算问题,为了让集群中的计算节点更好地配合,通常会为HPC搭建一个用于计算节点之间共享的并行文件系统。

并行文件系统(Parallel File System)是一种经过优化的高性能文件系统,提供毫秒级别访问时延,TB/s级别带宽和百万级别的IOPS,能够快速处理高性能计算(HPC)工作负载。

并行文件系统适用于需要处理大量数据和高度并行化计算的领域,例如:

- 科学计算:天气预测、气候模拟、地震模拟、流体力学、生物医学、物理学等需要处理大量实验数据和进行复杂计算的领域;

- 工业制造:汽车设计、航空航天、船舶设计、复杂机械制造等需要进行大规模计算和模拟的领域;

- 金融:证券交易、风险管理、金融建模等需要处理大量交易数据和进行复杂计算的领域;

- 动画制作:电影、电视、游戏等需要进行大规模渲染和图像处理的领域;

- 互联网应用:大规模数据挖掘、搜索引擎、社交网络、电子商务等需要处理大量数据和进行实时计算的领域。

如今,HPC已经从传统的计算密集型(大规模的仿真应用等),转变为数据驱动的以数据为中心的计算(基于大规模数据的生产、处理和分析等),这种转变趋势驱使后端存储不断演进发展,满足高性能和高可扩展性的要求。

并行文件系统中,文件/数据被切分并放置到多个存储设备中(各个被切分的数据如何放置,由并行文件系统通过算法来控制),系统使用全局名称空间来进行数据访问。并行文件系统的客户端可以同时使用多个 IO 路径将数据读/写到多个存储设备。

目前,常用的并行文件系统有以下几种: - Lustre:是一种开源的并行分布式文件系统,由Sun Microsystems和Cray公司开发,目前由OpenSFS和欧洲开源软件基金会(EOFS)维护。被广泛应用于高性能计算(HPC)和大规模数据存储领域,具有高性能、高可靠性和高可扩展性等特点;

- BeeGFS:是一种高性能、可扩展的并行文件系统,由Fraunhofer Institute for Industrial Mathematics and IT(ITWM)开发。它支持多种数据访问协议,包括POSIX、NFS和SMB等,被广泛应用于高性能计算和大规模数据存储领域;

- IBM Spectrum Scale(原名GPFS):是一种高性能、可扩展的并行文件系统,由IBM公司开发,可用于大规模数据存储和分析。它支持多种数据访问协议,包括POSIX、NFS、SMB和HDFS等;

- Ceph:是一种开源的分布式存储系统,可以提供块存储、对象存储和文件存储等多种接口,支持高可靠性和可扩展性的数据存储和访问;

- PVFS(Parallel Virtual File System):是一种开源的并行文件系统,由Clemson University和Oak Ridge National Laboratory共同开发。被广泛用于科学计算和高性能计算领域,具有高性能和高可扩展性等特点。

这些并行文件系统都具有高性能、高可靠性和高可扩展性等特点,被广泛应用于高性能计算、大规模数据存储和分析等领域。

2.2 关于BeeGFS

BeeGFS原名为FhGFS,是由Fraunhofer Institute为工业数学计算而设计开发,由于在欧洲和美国的中小型HPC系统性能表现良好,在2014年改名注册为BeeGFS并受到科研和商业的广泛应用。

BeeGFS既是一个网络文件系统也是一个并行文件系统。客户端通过网络与存储服务器进行通信(具有TCP/IP或任何具有RDMA功能的互连,如InfiniBand,RoCE或Omni-Path,支持native verbs 接口)。

BeeGFS实现了文件和MetaData的分离。文件是用户希望存储的数据,而MetaData是包括访问权限、文件大小和位置的“关于数据的数据”,MetaData中最重要的是如何从多个文件服务器中找到具体对应的文件,这样才能使客户端获取特定文件或目录的MetaData后,可以直接与存储文件的Stroage服务器对话以检索信息。

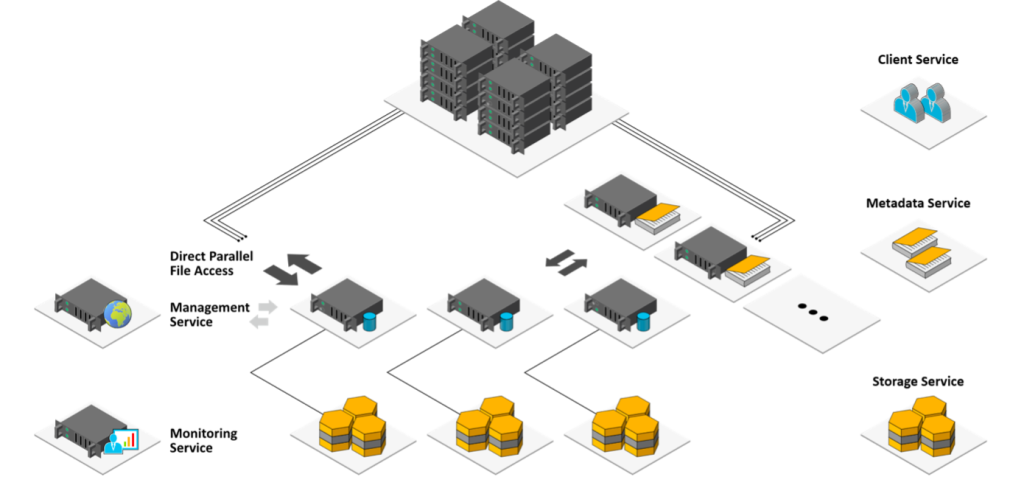

BeeGFS的Storage Servers和MetaData Servers的数量可以弹性伸缩。因此,可通过扩展到适当数量的服务器来满足不同性能要求,软件整体架构如下图所示:

图1:BeeGFS整体架构图

| 组件名称 | 组件包名称 | 说明 |

| 管理服务 | beegfs-mgmtd | 管理服务用于监控所有已注册服务的状态,不存储用户的任何数据。 注:在进行元数据服务、存储服务、客户端服务配置时,都需要指向管理服务节点IP地址,一般集群部署需要第一个部署的服务,有且只有一个。 |

| 元数据服务 | beegfs-meta | 元数据服务用于存储文件的元数据信息,如目录结构、权限信息、数据分片存放位置等,一个文件对应一个元数据文件,客户端打开文件时,由元数据服务向客户端提供数据具体存放节点位置,之后客户端直接与存储服务进行数据读写操作,可支持横向扩展,增加多个元数据服务用以提升文件系统性能。 |

| 存储服务 | beegfs-storage | 存储服务用于存储条带化后的数据块文件。 |

| 客户端服务 | beegfs-client beegfs-helperd | 客户端服务用于集群存储空间挂载,当服务开启时自动挂载到本地路径,之后可通过nfs/samba服务将本地挂载点导出,提供linux/windows客户端访问。 注:挂载路径通过/etc/beegfs/beegfs-mounts.conf 配置文件指定,beegfs-helperd主要用于日志写入,不需要额外的配置。 |

| 命令行组件 | beegfs-utils beegfs-common | 提供命令行工具,如beegfs-ctl、beegfs-df等。 |

2.3 关于Asterfusion CX-N系列超低时延云交换机

星融元Asterfusion自主开发的面向数据中心网络的CX-N系列超低时延云交换机,为云数据中心的高性能计算集群、存储集群、大数据分析、高频交易、Cloud OS全面融合等多业务场景提供高性能的网络服务。

本次测试环境使用了一台CX532P-N进行组网,这款1U交换机拥有32x100GE QSFP28光口,和2 x 10GE SFP+光口,交换容量高达6.4Tbps。

3 测试环境声明与组网拓扑

3.1 硬件设备与物料

| 设备类型 | 配置参数 | 数量 |

| 交换机 | CX532P-N(1U, 32 x 100GE QSFP28, 2 x 10GE SFP+) | 1 |

| 模块、线缆 | 100G breakout 4x25G[10G] 的模块、线缆 | 1 |

| 服务器 | 处理器:Intel(R) Core(TM) i7-7700 内存:8GB 硬盘:1TB HDD + 1TB SSD | 1 |

| 服务器 | 处理器:Intel(R) Core(TM) i7-8700 内存:8GB 硬盘:1TB HDD + 1TB SSD | 1 |

| 服务器 | 处理器:Intel(R) Core(TM) i7-9700 内存:8GB 硬盘:1TB HDD + 1TB SSD | 1 |

3.2 系统和软件版本

| 设备类型 | 主机名 | 版本 |

| 交换机 | CX532P-N | AsterNOS Software, Version 3.1, R0314P06 |

| 服务器 | server4 | 操作系统:openEuler release 22.03 (LTS-SP1) 内核:5.10.0-136.35.0.111.oe2203sp1.x86_64 BeeGFS:7.3.3 OFED驱动:MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64 |

| 服务器 | server5 | 操作系统:openEuler release 22.03 (LTS-SP1) 内核:5.10.0-136.35.0.111.oe2203sp1.x86_64 BeeGFS:7.3.3 OFED驱动:MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64 |

| 服务器 | server6 | 操作系统:Rocky Linux release 8.8 (Green Obsidian) 内核:4.18.0-477.13.1.el8_8.x86_64 BeeGFS:7.3.3 OFED驱动:MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64 |

3.3 存储系统规划

| 主机名 | 节点IP | 节点角色 | 硬盘划分 |

| server4 | 管理口 10.230.1.54 业务-1 172.16.8.54/24 | mgmtd、meta、storage | mgmtd:50G NVMe meta:50G NVMe storage:500G NVMe |

| server5 | 管理口 10.230.1.55 业务-1 172.16.8.55/24 | mgmtd、meta、storage | mgmtd:50G NVMe meta:50G NVMe storage:500G NVMe |

| server6 | 管理口 10.230.1.56 业务-1 172.16.8.56/24 | client、helperd | / |

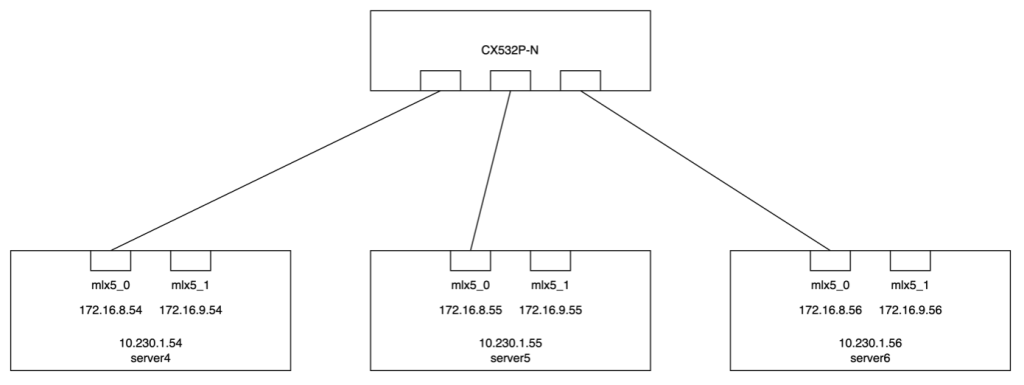

3.4 测试组网拓扑

图2:测试组网拓扑

4 测试结果

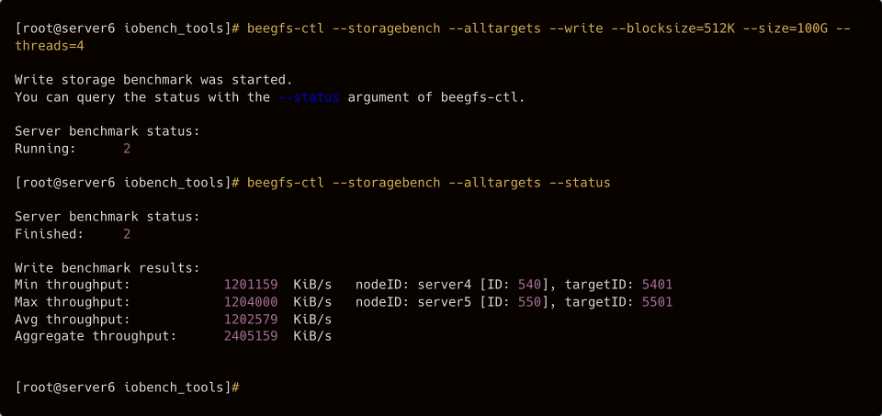

4.1 Run BeeGFS Bench

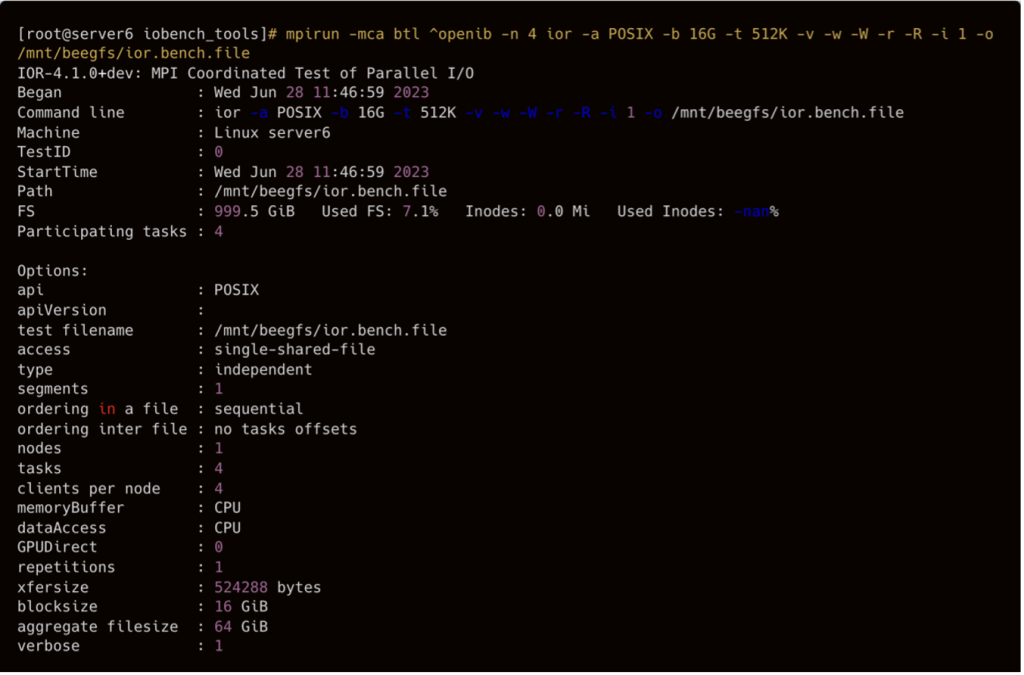

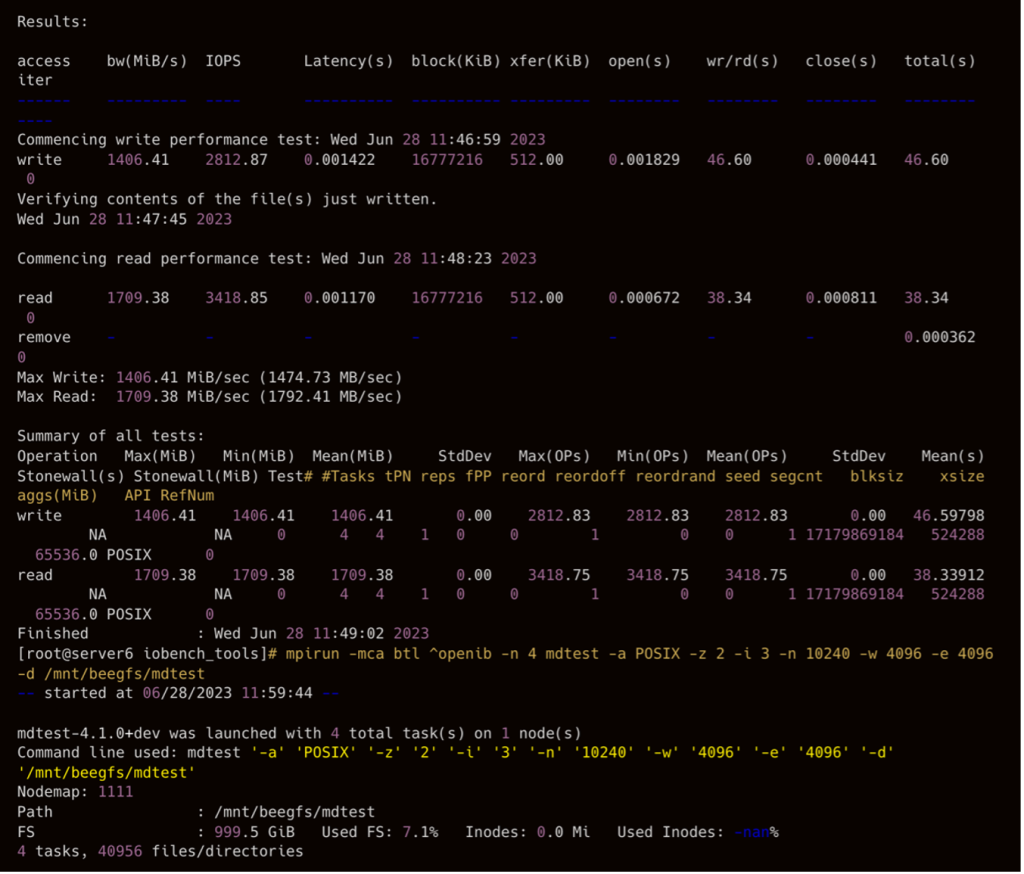

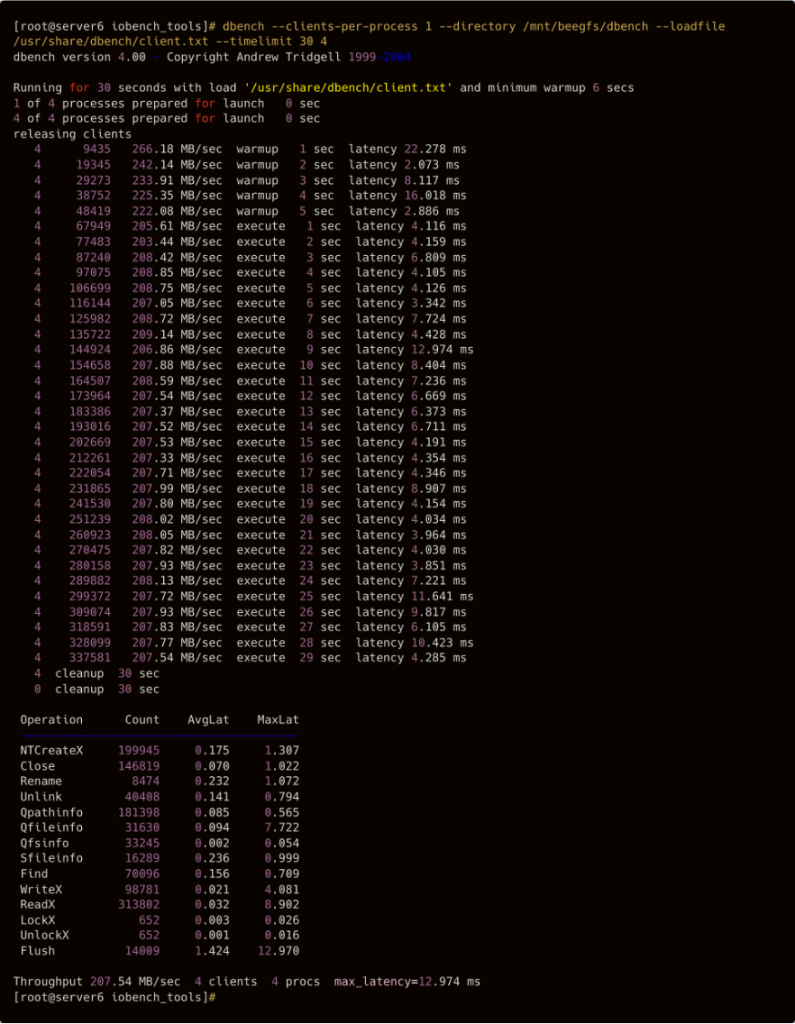

4.2 Run IOR and mdtest

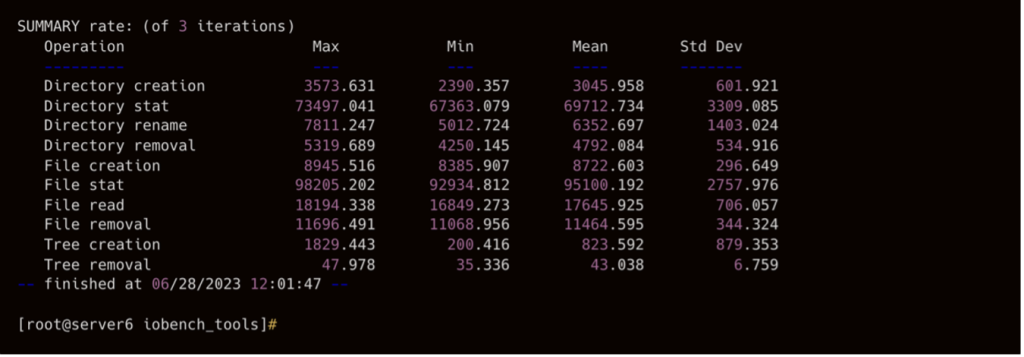

4.3 Run dbench

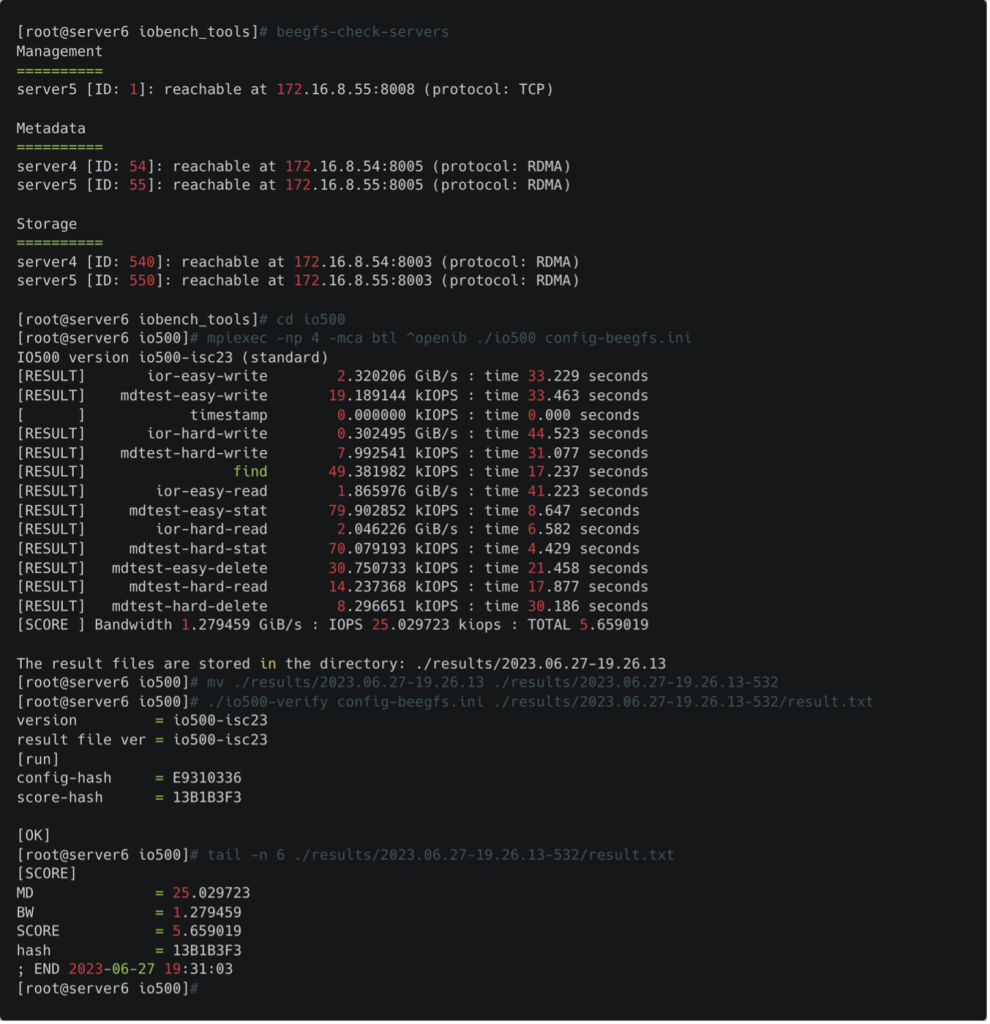

4.4 Run IO500

5 配置参考

5.1 服务器

5.1.1 安装Mellanox OFED驱动

Server4

# 下载当前系统发行版适用的驱动包

[root@server4 ~]# cat /etc/openEuler-release

openEuler release 22.03 (LTS-SP1)

[root@server4 ~]# wget https://content.mellanox.com/ofed/MLNX_OFED-5.4-3.6.8.1/MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64.tgz

# 编译当前系统内核适用的驱动包

[root@server4 ~]# tar xvf MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64.tgz

[root@server4 MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64]# cd MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64

[root@server4 MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64]# ./mlnx_add_kernel_support.sh -m /root/MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64

[root@server4 MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64]# cd ..

# 安装生成的驱动包

[root@server4 ~]# cp /tmp/MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64-ext.tgz ./

[root@server4 ~]# tar xvf MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64-ext.tgz

[root@server4 ~]# cd MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64-ext

[root@server4 MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64-ext]# ./mlnxofedinstall

# 生成initramfs,重启生效

[root@server4 MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64-ext]# dracut -f

[root@server4 MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64-ext]# reboot

# 启动openibd,检查驱动运行状态

[root@server4 ~]# /etc/init.d/openibd restart

[root@server4 ~]# /etc/init.d/openibd status

HCA driver loaded

Configured Mellanox EN devices:

enp1s0f0

enp1s0f1

Currently active Mellanox devices:

enp1s0f0

enp1s0f1

The following OFED modules are loaded:

rdma_ucm

rdma_cm

ib_ipoib

mlx5_core

mlx5_ib

ib_uverbs

ib_umad

ib_cm

ib_core

mlxfw

[root@server4 ~]#

Server5

# 下载当前系统发行版适用的驱动包

[root@server5 ~]# cat /etc/openEuler-release

openEuler release 22.03 (LTS-SP1)

[root@server5 ~]# wget https://content.mellanox.com/ofed/MLNX_OFED-5.4-3.6.8.1/MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64.tgz

# 编译当前系统内核适用的驱动包

[root@server5 ~]# tar xvf MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64.tgz

[root@server5 MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64]# cd MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64

[root@server5 MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64]# ./mlnx_add_kernel_support.sh -m /root/MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64

[root@server5 MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64]# cd ..

# 安装生成的驱动包

[root@server5 ~]# cp /tmp/MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64-ext.tgz ./

[root@server5 ~]# tar xvf MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64-ext.tgz

[root@server5 ~]# cd MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64-ext

[root@server5 MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64-ext]# ./mlnxofedinstall

# 生成initramfs,重启生效

[root@server5 MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64-ext]# dracut -f

[root@server5 MLNX_OFED_LINUX-5.4-3.6.8.1-openeuler22.03-x86_64-ext]# reboot

# 启动openibd,检查驱动运行状态

[root@server5 ~]# /etc/init.d/openibd restart

[root@server5 ~]# /etc/init.d/openibd status

HCA driver loaded

Configured Mellanox EN devices:

enp1s0f0

enp1s0f1

Currently active Mellanox devices:

enp1s0f0

enp1s0f1

The following OFED modules are loaded:

rdma_ucm

rdma_cm

ib_ipoib

mlx5_core

mlx5_ib

ib_uverbs

ib_umad

ib_cm

ib_core

mlxfw

[root@server5 ~]#

Server6

# 下载当前系统发行版适用的驱动包

[root@server6 ~]# cat /etc/rocky-release

Rocky Linux release 8.8 (Green Obsidian)

[root@server6 ~]# wget https://content.mellanox.com/ofed/MLNX_OFED-5.4-3.7.5.0/MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64.tgz

# 编译当前系统内核适用的驱动包

[root@server6 ~]# tar xvf MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64.tgz

[root@server6 MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64]# cd MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64

[root@server6 MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64]# ./mlnx_add_kernel_support.sh -m /root/MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64

[root@server6 MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64]# cd ..

# 安装生成的驱动包

[root@server6 ~]# cp /tmp/MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64-ext.tgz ./

[root@server6 ~]# tar xvf MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64-ext.tgz

[root@server6 ~]# cd MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64-ext

[root@server6 MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64-ext]# ./mlnxofedinstall

# 生成initramfs,重启生效

[root@server6 MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64-ext]# dracut -f

[root@server6 MLNX_OFED_LINUX-5.4-3.7.5.0-rhel8.8-x86_64-ext]# reboot

# 启动openibd,检查驱动运行状态

[root@server6 ~]# /etc/init.d/openibd restart

[root@server6 ~]# /etc/init.d/openibd status

HCA driver loaded

Configured Mellanox EN devices:

enp7s0

enp8s0

Currently active Mellanox devices:

enp7s0

enp8s0

The following OFED modules are loaded:

rdma_ucm

rdma_cm

ib_ipoib

mlx5_core

mlx5_ib

ib_uverbs

ib_umad

ib_cm

ib_core

mlxfw

[root@server6 ~]# 5.1.2 配置RoCEv2

Server4

[root@server4 ~]# ibdev2netdev

mlx5_0 port 1 ==> enp1s0f0 (Up)

mlx5_1 port 1 ==> enp1s0f1 (Up)

[root@server4 ~]# cat /etc/sysconfig/network-scripts/config-rocev2.sh

#enp1s0f0

mlnx_qos -i enp1s0f0 --trust dscp

mlnx_qos -i enp1s0f0 --pfc 0,0,0,0,1,0,0,0

cma_roce_mode -d mlx5_0 -p 1 -m 2

echo 128 > /sys/class/infiniband/mlx5_0/tc/1/traffic_class

cma_roce_tos -d mlx5_0 -t 128

echo 1 > /sys/class/net/enp1s0f0/ecn/roce_np/enable/1

echo 1 > /sys/class/net/enp1s0f0/ecn/roce_rp/enable/1

echo 40 > /sys/class/net/enp1s0f0/ecn/roce_np/cnp_dscp

sysctl -w net.ipv4.tcp_ecn=1

# enp1s0f1

mlnx_qos -i enp1s0f1 --trust dscp

mlnx_qos -i enp1s0f1 --pfc 0,0,0,0,1,0,0,0

cma_roce_mode -d mlx5_1 -p 1 -m 2

echo 128 > /sys/class/infiniband/mlx5_1/tc/1/traffic_class

cma_roce_tos -d mlx5_1 -t 128

echo 1 > /sys/class/net/enp1s0f1/ecn/roce_np/enable/1

echo 1 > /sys/class/net/enp1s0f1/ecn/roce_rp/enable/1

echo 40 > /sys/class/net/enp1s0f1/ecn/roce_np/cnp_dscp

[root@server4 ~]# mlnx_qos -i enp1s0f0

DCBX mode: OS controlled

Priority trust state: dscp

dscp2prio mapping:

prio:0 dscp:07,06,05,04,03,02,01,00,

prio:1 dscp:15,14,13,12,11,10,09,08,

prio:2 dscp:23,22,21,20,19,18,17,16,

prio:3 dscp:31,30,29,28,27,26,25,24,

prio:4 dscp:39,38,37,36,35,34,33,32,

prio:5 dscp:47,46,45,44,43,42,41,40,

prio:6 dscp:55,54,53,52,51,50,49,48,

prio:7 dscp:63,62,61,60,59,58,57,56,

default priority:

Receive buffer size (bytes): 130944,130944,0,0,0,0,0,0,

Cable len: 7

PFC configuration:

priority 0 1 2 3 4 5 6 7

enabled 0 0 0 0 1 0 0 0

buffer 0 0 0 0 1 0 0 0

tc: 0 ratelimit: unlimited, tsa: strict

priority: 0

priority: 1

priority: 2

priority: 3

priority: 4

priority: 5

priority: 6

priority: 7

[root@server4 ~]# mlnx_qos -i enp1s0f1

DCBX mode: OS controlled

Priority trust state: dscp

dscp2prio mapping:

prio:0 dscp:07,06,05,04,03,02,01,00,

prio:1 dscp:15,14,13,12,11,10,09,08,

prio:2 dscp:23,22,21,20,19,18,17,16,

prio:3 dscp:31,30,29,28,27,26,25,24,

prio:4 dscp:39,38,37,36,35,34,33,32,

prio:5 dscp:47,46,45,44,43,42,41,40,

prio:6 dscp:55,54,53,52,51,50,49,48,

prio:7 dscp:63,62,61,60,59,58,57,56,

default priority:

Receive buffer size (bytes): 130944,130944,0,0,0,0,0,0,

Cable len: 7

PFC configuration:

priority 0 1 2 3 4 5 6 7

enabled 0 0 0 0 1 0 0 0

buffer 0 0 0 0 1 0 0 0

tc: 0 ratelimit: unlimited, tsa: strict

priority: 0

priority: 1

priority: 2

priority: 3

priority: 4

priority: 5

priority: 6

priority: 7

[root@server4 ~]# cat /sys/class/net/*/ecn/roce_np/cnp_dscp

40

40

[root@server4 ~]#

Server5

[root@server5 ~]# ibdev2netdev

mlx5_0 port 1 ==> enp1s0f0 (Up)

mlx5_1 port 1 ==> enp1s0f1 (Up)

[root@server5 ~]# cat /etc/sysconfig/network-scripts/config-rocev2.sh

#enp1s0f0

mlnx_qos -i enp1s0f0 --trust dscp

mlnx_qos -i enp1s0f0 --pfc 0,0,0,0,1,0,0,0

cma_roce_mode -d mlx5_0 -p 1 -m 2

echo 128 > /sys/class/infiniband/mlx5_0/tc/1/traffic_class

cma_roce_tos -d mlx5_0 -t 128

echo 1 > /sys/class/net/enp1s0f0/ecn/roce_np/enable/1

echo 1 > /sys/class/net/enp1s0f0/ecn/roce_rp/enable/1

echo 40 > /sys/class/net/enp1s0f0/ecn/roce_np/cnp_dscp

sysctl -w net.ipv4.tcp_ecn=1

# enp1s0f1

mlnx_qos -i enp1s0f1 --trust dscp

mlnx_qos -i enp1s0f1 --pfc 0,0,0,0,1,0,0,0

cma_roce_mode -d mlx5_1 -p 1 -m 2

echo 128 > /sys/class/infiniband/mlx5_1/tc/1/traffic_class

cma_roce_tos -d mlx5_1 -t 128

echo 1 > /sys/class/net/enp1s0f1/ecn/roce_np/enable/1

echo 1 > /sys/class/net/enp1s0f1/ecn/roce_rp/enable/1

echo 40 > /sys/class/net/enp1s0f1/ecn/roce_np/cnp_dscp

[root@server5 ~]# mlnx_qos -i enp1s0f0

DCBX mode: OS controlled

Priority trust state: dscp

dscp2prio mapping:

prio:0 dscp:07,06,05,04,03,02,01,00,

prio:1 dscp:15,14,13,12,11,10,09,08,

prio:2 dscp:23,22,21,20,19,18,17,16,

prio:3 dscp:31,30,29,28,27,26,25,24,

prio:4 dscp:39,38,37,36,35,34,33,32,

prio:5 dscp:47,46,45,44,43,42,41,40,

prio:6 dscp:55,54,53,52,51,50,49,48,

prio:7 dscp:63,62,61,60,59,58,57,56,

default priority:

Receive buffer size (bytes): 130944,130944,0,0,0,0,0,0,

Cable len: 7

PFC configuration:

priority 0 1 2 3 4 5 6 7

enabled 0 0 0 0 1 0 0 0

buffer 0 0 0 0 1 0 0 0

tc: 0 ratelimit: unlimited, tsa: strict

priority: 0

priority: 1

priority: 2

priority: 3

priority: 4

priority: 5

priority: 6

priority: 7

[root@server5 ~]# mlnx_qos -i enp1s0f1

DCBX mode: OS controlled

Priority trust state: dscp

dscp2prio mapping:

prio:0 dscp:07,06,05,04,03,02,01,00,

prio:1 dscp:15,14,13,12,11,10,09,08,

prio:2 dscp:23,22,21,20,19,18,17,16,

prio:3 dscp:31,30,29,28,27,26,25,24,

prio:4 dscp:39,38,37,36,35,34,33,32,

prio:5 dscp:47,46,45,44,43,42,41,40,

prio:6 dscp:55,54,53,52,51,50,49,48,

prio:7 dscp:63,62,61,60,59,58,57,56,

default priority:

Receive buffer size (bytes): 130944,130944,0,0,0,0,0,0,

Cable len: 7

PFC configuration:

priority 0 1 2 3 4 5 6 7

enabled 0 0 0 0 1 0 0 0

buffer 0 0 0 0 1 0 0 0

tc: 0 ratelimit: unlimited, tsa: strict

priority: 0

priority: 1

priority: 2

priority: 3

priority: 4

priority: 5

priority: 6

priority: 7

[root@server5 ~]# cat /sys/class/net/*/ecn/roce_np/cnp_dscp

40

40

[root@server5 ~]#

Server6

[root@server6 ~]# ibdev2netdev

mlx5_0 port 1 ==> enp7s0 (Up)

mlx5_1 port 1 ==> enp8s0 (Up)

[root@server6 ~]# cat /etc/sysconfig/network-scripts/config-rocev2.sh

#enp7s0

mlnx_qos -i enp7s0 --trust dscp

mlnx_qos -i enp7s0 --pfc 0,0,0,0,1,0,0,0

cma_roce_mode -d mlx5_0 -p 1 -m 2

echo 128 > /sys/class/infiniband/mlx5_0/tc/1/traffic_class

cma_roce_tos -d mlx5_0 -t 128

echo 1 > /sys/class/net/enp7s0/ecn/roce_np/enable/1

echo 1 > /sys/class/net/enp7s0/ecn/roce_rp/enable/1

echo 40 > /sys/class/net/enp7s0/ecn/roce_np/cnp_dscp

sysctl -w net.ipv4.tcp_ecn=1

# enp8s0

mlnx_qos -i enp8s0 --trust dscp

mlnx_qos -i enp8s0 --pfc 0,0,0,0,1,0,0,0

cma_roce_mode -d mlx5_1 -p 1 -m 2

echo 128 > /sys/class/infiniband/mlx5_1/tc/1/traffic_class

cma_roce_tos -d mlx5_1 -t 128

echo 1 > /sys/class/net/enp8s0/ecn/roce_np/enable/1

echo 1 > /sys/class/net/enp8s0/ecn/roce_rp/enable/1

echo 40 > /sys/class/net/enp8s0/ecn/roce_np/cnp_dscp

[root@server6 ~]# mlnx_qos -i enp7s0

DCBX mode: OS controlled

Priority trust state: dscp

dscp2prio mapping:

prio:0 dscp:07,06,05,04,03,02,01,00,

prio:1 dscp:15,14,13,12,11,10,09,08,

prio:2 dscp:23,22,21,20,19,18,17,16,

prio:3 dscp:31,30,29,28,27,26,25,24,

prio:4 dscp:39,38,37,36,35,34,33,32,

prio:5 dscp:47,46,45,44,43,42,41,40,

prio:6 dscp:55,54,53,52,51,50,49,48,

prio:7 dscp:63,62,61,60,59,58,57,56,

default priority:

Receive buffer size (bytes): 130944,130944,0,0,0,0,0,0,max_buffer_size=262016

Cable len: 7

PFC configuration:

priority 0 1 2 3 4 5 6 7

enabled 0 0 0 0 1 0 0 0

buffer 0 0 0 0 1 0 0 0

tc: 0 ratelimit: unlimited, tsa: strict

priority: 0

priority: 1

priority: 2

priority: 3

priority: 4

priority: 5

priority: 6

priority: 7

[root@server6 ~]# mlnx_qos -i enp8s0

DCBX mode: OS controlled

Priority trust state: dscp

dscp2prio mapping:

prio:0 dscp:07,06,05,04,03,02,01,00,

prio:1 dscp:15,14,13,12,11,10,09,08,

prio:2 dscp:23,22,21,20,19,18,17,16,

prio:3 dscp:31,30,29,28,27,26,25,24,

prio:4 dscp:39,38,37,36,35,34,33,32,

prio:5 dscp:47,46,45,44,43,42,41,40,

prio:6 dscp:55,54,53,52,51,50,49,48,

prio:7 dscp:63,62,61,60,59,58,57,56,

default priority:

Receive buffer size (bytes): 130944,130944,0,0,0,0,0,0,max_buffer_size=262016

Cable len: 7

PFC configuration:

priority 0 1 2 3 4 5 6 7

enabled 0 0 0 0 1 0 0 0

buffer 0 0 0 0 1 0 0 0

tc: 0 ratelimit: unlimited, tsa: strict

priority: 0

priority: 1

priority: 2

priority: 3

priority: 4

priority: 5

priority: 6

priority: 7

[root@server6 ~]# cat /sys/class/net/*/ecn/roce_np/cnp_dscp

40

40

[root@server6 ~]# 5.1.3 部署BeeGFS

5.1.3.1 安装各个服务的软件包

Server4:meta、storage、mgmt

[root@server4 ~]# cd /etc/yum.repos.d/

[root@server4 yum.repos.d]# wget https://www.beegfs.io/release/beegfs_7.3.3/dists/beegfs-rhel8.repo

[root@server4 yum.repos.d]# yum makecache

[root@server4 ~]# yum install beegfs-mgmtd

[root@server4 ~]# yum install beegfs-meta libbeegfs-ib

[root@server4 ~]# yum install beegfs-storage libbeegfs-ibServer5:meta、storage、mgmt

[root@server5 ~]# cd /etc/yum.repos.d/

[root@server5 yum.repos.d]# wget https://www.beegfs.io/release/beegfs_7.3.3/dists/beegfs-rhel8.repo

[root@server5 yum.repos.d]# yum makecache

[root@server5 ~]# yum install beegfs-mgmtd

[root@server5 ~]# yum install beegfs-meta libbeegfs-ib

[root@server5 ~]# yum install beegfs-storage libbeegfs-ib

Server6:client

[root@server6 ~]# cd /etc/yum.repos.d/

[root@server6 yum.repos.d]# wget https://www.beegfs.io/release/beegfs_7.3.3/dists/beegfs-rhel8.repo

[root@server6 yum.repos.d]# yum makecache

[root@server6 ~]# yum install beegfs-client beegfs-helperd beegfs-utils

5.1.3.2 编译客户端内核模块

[root@server6 ~]# cat /etc/beegfs/beegfs-client-autobuild.conf

# This is a config file for the automatic build process of BeeGFS client kernel

# modules.

# http://www.beegfs.com

#

# --- Section: [Notes] ---

#

# General Notes

# =============

# To force a rebuild of the client modules:

# $ /etc/init.d/beegfs-client rebuild

#

# To see a list of available build arguments:

# $ make help -C /opt/beegfs/src/client/client_module_${BEEGFS_MAJOR_VERSION}/build

#

# Help example for BeeGFS 2015.03 release:

# $ make help -C /opt/beegfs/src/client/client_module_2015.03/build

# RDMA Support Notes

# ==================

# If you installed InfiniBand kernel modules from OpenFabrics OFED, then also

# define the correspsonding header include path by adding

# "OFED_INCLUDE_PATH=<path>" to the "buildArgs", where <path> usually is

# "/usr/src/openib/include" or "/usr/src/ofa_kernel/default/include" for

# Mellanox OFED.

#

# OFED headers are automatically detected even if OFED_INCLUDE_PATH is not

# defined. To build the client without RDMA support, define BEEGFS_NO_RDMA=1.

#

# NVIDIA GPUDirect Storage Support Notes

# ==================

# If you want to build BeeGFS with NVIDIA GPUDirect Storage support, add

# "NVFS_INCLUDE_PATH=<path>" to the "buildArgs" below, where path is the directory

# that contains nvfs-dma.h. This is usually the nvidia-fs source directory:

# /usr/src/nvidia-fs-VERSION.

#

# If config-host.h is not present in NVFS_INCLUDE_PATH, execute the configure

# script. Example:

# $ cd /usr/src/nvidia-fs-2.13.5

# $ ./configure

#

# NVIDIA_INCLUDE_PATH must be defined and point to the NVIDIA driver source:

# /usr/src/nvidia-VERSION/nvidia

#

# OFED_INCLUDE_PATH must be defined and point to Mellanox OFED.

#

#

# --- Section: [Build Settings] ---

#

# Build Settings

# ==============

# These are the arguments for the client module "make" command.

#

# Note: Quotation marks and equal signs can be used without escape characters

# here.

#

# Example1:

# buildArgs=-j8

#

# Example2 (see "RDMA Support Notes" above):

# buildArgs=-j8 OFED_INCLUDE_PATH=/usr/src/openib/include

#

# Example3 (see "NVIDIA GPUDirect Storage Support Notes" above):

# buildArgs=-j8 OFED_INCLUDE_PATH=/usr/src/ofa_kernel/default/include \

# NVFS_INCLUDE_PATH=/usr/src/nvidia-fs-2.13.5 \

# NVIDIA_INCLUDE_PATH=/usr/src/nvidia-520.61.05/nvidia

#

# Default:

# buildArgs=-j8

buildArgs=-j8 OFED_INCLUDE_PATH=/usr/src/ofa_kernel/default/include

# Turn Autobuild on/off

# =====================

# Controls whether modules will be built on "/etc/init.d/beegfs-client start".

#

# Note that even if autobuild is enabled here, the modules will only be built

# if no beegfs kernel module for the current kernel version exists in

# "/lib/modules/<kernel_version>/updates/".

#

# Default:

# buildEnabled=true

buildEnabled=true

[root@server6 ~]# cat /etc/beegfs/beegfs-client.conf

# This is a config file for BeeGFS clients.

# http://www.beegfs.com

# --- [Table of Contents] ---

#

# 1) Settings

# 2) Mount Options

# 3) Basic Settings Documentation

# 4) Advanced Settings Documentation

#

# --- Section 1.1: [Basic Settings] ---

#

sysMgmtdHost = server5

#

# --- Section 1.2: [Advanced Settings] ---

#

connAuthFile =

connDisableAuthentication = true

connClientPortUDP = 8004

connHelperdPortTCP = 8006

connMgmtdPortTCP = 8008

connMgmtdPortUDP = 8008

connPortShift = 0

connCommRetrySecs = 600

connFallbackExpirationSecs = 900

connInterfacesFile = /etc/beegfs/interface.conf

connRDMAInterfacesFile = /etc/beegfs/interface.conf

connMaxInternodeNum = 12

connMaxConcurrentAttempts = 0

connNetFilterFile = /etc/beegfs/network.conf

connUseRDMA = true

connTCPFallbackEnabled = true

connTCPRcvBufSize = 0

connUDPRcvBufSize = 0

connRDMABufNum = 70

connRDMABufSize = 8192

connRDMATypeOfService = 0

connTcpOnlyFilterFile =

logClientID = false

logHelperdIP =

logLevel = 3

logType = helperd

quotaEnabled = false

sysCreateHardlinksAsSymlinks = false

sysMountSanityCheckMS = 11000

sysSessionCheckOnClose = false

sysSyncOnClose = false

sysTargetOfflineTimeoutSecs = 900

sysUpdateTargetStatesSecs = 30

sysXAttrsEnabled = false

tuneFileCacheType = buffered

tunePreferredMetaFile =

tunePreferredStorageFile =

tuneRemoteFSync = true

tuneUseGlobalAppendLocks = false

tuneUseGlobalFileLocks = false

#

# --- Section 1.3: [Enterprise Features] ---

#

# See end-user license agreement for definition and usage limitations of

# enterprise features.

#

sysACLsEnabled = false

[root@server6 ~]# mkdir /mnt/beegfs

[root@server6 ~]# cat /etc/beegfs/beegfs-mounts.conf

/mnt/beegfs /etc/beegfs/beegfs-client.conf

[root@server6 ~]# cat /etc/beegfs/interface.conf

enp7s0

[root@server6 ~]# cat /etc/beegfs/network.conf

172.16.8.0/24

[root@server6 ~]# /etc/init.d/beegfs-client rebuild

[root@server6 ~]# systemctl restart beegfs-client

[root@server6 ~]# systemctl status beegfs-client

● beegfs-client.service - Start BeeGFS Client

Loaded: loaded (/usr/lib/systemd/system/beegfs-client.service; enabled; vendor preset: disabled)

Active: active (exited) since Tue 2023-06-27 19:25:17 CST; 18min ago

Process: 22301 ExecStop=/etc/init.d/beegfs-client stop (code=exited, status=0/SUCCESS)

Process: 22323 ExecStart=/etc/init.d/beegfs-client start (code=exited, status=0/SUCCESS)

Main PID: 22323 (code=exited, status=0/SUCCESS)

6月 27 19:25:17 server6 systemd[1]: Starting Start BeeGFS Client...

6月 27 19:25:17 server6 beegfs-client[22323]: Starting BeeGFS Client:

6月 27 19:25:17 server6 beegfs-client[22323]: - Loading BeeGFS modules

6月 27 19:25:17 server6 beegfs-client[22323]: - Mounting directories from /etc/beegfs/beegfs-mounts.conf

6月 27 19:25:17 server6 systemd[1]: Started Start BeeGFS Client.

[root@server6 ~]# lsmod | grep beegfs

beegfs 540672 1

rdma_cm 118784 2 beegfs,rdma_ucm

ib_core 425984 9 beegfs,rdma_cm,ib_ipoib,iw_cm,ib_umad,rdma_ucm,ib_uverbs,mlx5_ib,ib_cm

mlx_compat 16384 12 beegfs,rdma_cm,ib_ipoib,mlxdevm,iw_cm,ib_umad,ib_core,rdma_ucm,ib_uverbs,mlx5_ib,ib_cm,mlx5_core

[root@server6 ~]# 5.1.3.3 BeeGFS配置

Server5和Server6上的存储空间分配。

[root@server4 ~]# mkdir -p /mnt/beegfs/{mgmtd,meta,storage}

[root@server4 ~]# fdisk -l /dev/nvme0n1

Disk /dev/nvme0n1:953.87 GiB,1024209543168 字节,2000409264 个扇区

磁盘型号:ZHITAI TiPlus5000 1TB

单元:扇区 / 1 * 512 = 512 字节

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 512 字节

磁盘标签类型:gpt

磁盘标识符:090F6714-0F4E-E543-8293-10A0405490DE

设备 起点 末尾 扇区 大小 类型

/dev/nvme0n1p1 2048 104859647 104857600 50G Linux 文件系统

/dev/nvme0n1p2 104859648 209717247 104857600 50G Linux 文件系统

/dev/nvme0n1p3 209717248 1258293247 1048576000 500G Linux 文件系统

[root@server4 ~]# mkfs.ext4 /dev/nvme0n1p1

[root@server4 ~]# mkfs.ext4 /dev/nvme0n1p2

[root@server4 ~]# mkfs.xfs /dev/nvme0n1p3

[root@server4 ~]# mount /dev/nvme0n1p1 /mnt/beegfs/mgmtd/

[root@server4 ~]# mount /dev/nvme0n1p2 /mnt/beegfs/meta/

[root@server4 ~]# mount /dev/nvme0n1p3 /mnt/beegfs/storage/

[root@server5 ~]# mkdir -p /mnt/beegfs/{mgmtd,meta,storage}

[root@server5 ~]# fdisk -l /dev/nvme0n1

Disk /dev/nvme0n1:953.87 GiB,1024209543168 字节,2000409264 个扇区

磁盘型号:ZHITAI TiPlus5000 1TB

单元:扇区 / 1 * 512 = 512 字节

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 512 字节

磁盘标签类型:gpt

磁盘标识符:A64F55F2-0650-8A40-BE56-BC451387B729

设备 起点 末尾 扇区 大小 类型

/dev/nvme0n1p1 2048 104859647 104857600 50G Linux 文件系统

/dev/nvme0n1p2 104859648 209717247 104857600 50G Linux 文件系统

/dev/nvme0n1p3 209717248 1258293247 1048576000 500G Linux 文件系统

[root@server5 ~]# mkfs.ext4 /dev/nvme0n1p1

[root@server5 ~]# mkfs.ext4 /dev/nvme0n1p2

[root@server5 ~]# mkfs.xfs /dev/nvme0n1p3

[root@server5 ~]# mount /dev/nvme0n1p1 /mnt/beegfs/mgmtd/

[root@server5 ~]# mount /dev/nvme0n1p2 /mnt/beegfs/meta/

[root@server5 ~]# mount /dev/nvme0n1p3 /mnt/beegfs/storage/ Mgmt服务配置。

[root@server5 ~]# /opt/beegfs/sbin/beegfs-setup-mgmtd -p /mnt/beegfs/mgmtd

[root@server5 ~]# systemctl restart beegfs-mgmtd

[root@server5 ~]# systemctl status beegfs-mgmtd

● beegfs-mgmtd.service - BeeGFS Management Server

Loaded: loaded (/usr/lib/systemd/system/beegfs-mgmtd.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2023-06-25 11:22:00 CST; 2 days ago

Docs: http://www.beegfs.com/content/documentation/

Main PID: 18739 (beegfs-mgmtd/Ma)

Tasks: 11 (limit: 45464)

Memory: 13.9M

CGroup: /system.slice/beegfs-mgmtd.service

└─ 18739 /opt/beegfs/sbin/beegfs-mgmtd cfgFile=/etc/beegfs/beegfs-mgmtd.conf runDaemonized=false

6月 25 11:22:00 server5 systemd[1]: Started BeeGFS Management Server.

[root@server5 ~]# Meta服务配置。

Server4

[root@server4 ~]# /opt/beegfs/sbin/beegfs-setup-meta -p /mnt/beegfs/meta -s 54 -m server5

[root@server4 ~]# systemctl restart beegfs-meta

[root@server4 ~]# systemctl status beegfs-meta

● beegfs-meta.service - BeeGFS Metadata Server

Loaded: loaded (/usr/lib/systemd/system/beegfs-meta.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2023-06-25 16:31:57 CST; 2 days ago

Docs: http://www.beegfs.com/content/documentation/

Main PID: 4444 (beegfs-meta/Mai)

Tasks: 63 (limit: 45901)

Memory: 2.2G

CGroup: /system.slice/beegfs-meta.service

└─ 4444 /opt/beegfs/sbin/beegfs-meta cfgFile=/etc/beegfs/beegfs-meta.conf runDaemonized=false

6月 25 16:31:57 server4 systemd[1]: Started BeeGFS Metadata Server.

[root@server4 ~]#

Server5

[root@server5 ~]# /opt/beegfs/sbin/beegfs-setup-meta -p /mnt/beegfs/meta -s 55 -m server5

[root@server5 ~]# systemctl restart beegfs-meta

[root@server5 ~]# systemctl status beegfs-meta

● beegfs-meta.service - BeeGFS Metadata Server

Loaded: loaded (/usr/lib/systemd/system/beegfs-meta.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2023-06-25 11:22:16 CST; 2 days ago

Docs: http://www.beegfs.com/content/documentation/

Main PID: 18763 (beegfs-meta/Mai)

Tasks: 87 (limit: 45464)

Memory: 1.7G

CGroup: /system.slice/beegfs-meta.service

└─ 18763 /opt/beegfs/sbin/beegfs-meta cfgFile=/etc/beegfs/beegfs-meta.conf runDaemonized=false

6月 25 11:22:16 server5 systemd[1]: Started BeeGFS Metadata Server.

[root@server5 ~]# Storage服务配置。

Server4

[root@server4 ~]# /opt/beegfs/sbin/beegfs-setup-storage -p /mnt/beegfs/storage -s 540 -i 5401 -m server5 -f

[root@server4 ~]# systemctl restart beegfs-storage

[root@server4 ~]# systemctl status beegfs-storage

● beegfs-storage.service - BeeGFS Storage Server

Loaded: loaded (/usr/lib/systemd/system/beegfs-storage.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2023-06-25 15:46:33 CST; 2 days ago

Docs: http://www.beegfs.com/content/documentation/

Main PID: 4197 (beegfs-storage/)

Tasks: 21 (limit: 45901)

Memory: 118.4M

CGroup: /system.slice/beegfs-storage.service

└─ 4197 /opt/beegfs/sbin/beegfs-storage cfgFile=/etc/beegfs/beegfs-storage.conf runDaemonized=false

6月 25 15:46:33 server4 systemd[1]: Started BeeGFS Storage Server.

[root@server4 ~]#

Server5

[root@server5 ~]# /opt/beegfs/sbin/beegfs-setup-storage -p /mnt/beegfs/storage -s 550 -i 5501 -m server5 -f

[root@server5 ~]# systemctl restart beegfs-storage.service

[root@server5 ~]# systemctl status beegfs-storage.service

● beegfs-storage.service - BeeGFS Storage Server

Loaded: loaded (/usr/lib/systemd/system/beegfs-storage.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2023-06-25 11:29:58 CST; 2 days ago

Docs: http://www.beegfs.com/content/documentation/

Main PID: 18901 (beegfs-storage/)

Tasks: 21 (limit: 45464)

Memory: 124.8M

CGroup: /system.slice/beegfs-storage.service

└─ 18901 /opt/beegfs/sbin/beegfs-storage cfgFile=/etc/beegfs/beegfs-storage.conf runDaemonized=false

6月 25 11:29:58 server5 systemd[1]: Started BeeGFS Storage Server.

[root@server5 ~]# 5.1.3.4 状态检查

[root@server6 ~]# beegfs-check-servers

Management

==========

server5 [ID: 1]: reachable at 172.16.8.55:8008 (protocol: TCP)

Metadata

==========

server4 [ID: 54]: reachable at 172.16.8.54:8005 (protocol: RDMA)

server5 [ID: 55]: reachable at 172.16.8.55:8005 (protocol: RDMA)

Storage

==========

server4 [ID: 540]: reachable at 172.16.8.54:8003 (protocol: RDMA)

server5 [ID: 550]: reachable at 172.16.8.55:8003 (protocol: RDMA)

[root@server6 ~]# beegfs-df

METADATA SERVERS:

TargetID Cap. Pool Total Free % ITotal IFree %

======== ========= ===== ==== = ====== ===== =

54 low 48.9GiB 48.7GiB 99% 3.3M 3.2M 98%

55 low 48.9GiB 48.7GiB 99% 3.3M 3.2M 98%

STORAGE TARGETS:

TargetID Cap. Pool Total Free % ITotal IFree %

======== ========= ===== ==== = ====== ===== =

5401 low 499.8GiB 464.2GiB 93% 262.1M 262.1M 100%

5501 low 499.8GiB 464.2GiB 93% 262.1M 262.1M 100%

[root@server6 ~]# beegfs-ctl --listnodes --nodetype=meta --nicdetails

server4 [ID: 54]

Ports: UDP: 8005; TCP: 8005

Interfaces:

+ enp1s0f0[ip addr: 172.16.8.54; type: RDMA]

+ enp1s0f0[ip addr: 172.16.8.54; type: TCP]

server5 [ID: 55]

Ports: UDP: 8005; TCP: 8005

Interfaces:

+ enp1s0f0[ip addr: 172.16.8.55; type: RDMA]

+ enp1s0f0[ip addr: 172.16.8.55; type: TCP]

Number of nodes: 2

Root: 55

[root@server6 ~]# beegfs-ctl --listnodes --nodetype=storage --nicdetails

server4 [ID: 540]

Ports: UDP: 8003; TCP: 8003

Interfaces:

+ enp1s0f0[ip addr: 172.16.8.54; type: RDMA]

+ enp1s0f0[ip addr: 172.16.8.54; type: TCP]

server5 [ID: 550]

Ports: UDP: 8003; TCP: 8003

Interfaces:

+ enp1s0f0[ip addr: 172.16.8.55; type: RDMA]

+ enp1s0f0[ip addr: 172.16.8.55; type: TCP]

Number of nodes: 2

[root@server6 ~]# beegfs-ctl --listnodes --nodetype=client --nicdetails

5751-649AC71D-server6 [ID: 8]

Ports: UDP: 8004; TCP: 0

Interfaces:

+ enp7s0[ip addr: 172.16.8.56; type: TCP]

+ enp7s0[ip addr: 172.16.8.56; type: RDMA]

Number of nodes: 1

[root@server6 ~]# beegfs-net

mgmt_nodes

=============

server5 [ID: 1]

Connections: TCP: 1 (172.16.8.55:8008);

meta_nodes

=============

server4 [ID: 54]

Connections: RDMA: 4 (172.16.8.54:8005);

server5 [ID: 55]

Connections: RDMA: 4 (172.16.8.55:8005);

storage_nodes

=============

server4 [ID: 540]

Connections: RDMA: 4 (172.16.8.54:8003);

server5 [ID: 550]

Connections: RDMA: 4 (172.16.8.55:8003);

[root@server6 ~]# 5.1.4 挂载测试

[root@server6 ~]# df -h

文件系统 容量 已用 可用 已用% 挂载点

devtmpfs 1.8G 0 1.8G 0% /dev

tmpfs 1.8G 4.0K 1.8G 1% /dev/shm

tmpfs 1.8G 8.7M 1.8G 1% /run

tmpfs 1.8G 0 1.8G 0% /sys/fs/cgroup

/dev/mapper/rocky-root 9.0G 6.8G 2.2G 76% /

/dev/vda2 994M 431M 564M 44% /boot

/dev/vda1 99M 5.8M 94M 6% /boot/efi

tmpfs 367M 0 367M 0% /run/user/0

beegfs_nodev 1000G 72G 929G 8% /mnt/beegfs

[root@server6 ~]# 5.1.5 安装IO500(IOR&mdtest)

安装OpenMPI。

[root@server6 ~]# mkdir iobench_tools

# 下载源码包

[root@server6 iobench_tools]# wget https://download.open-mpi.org/release/open-mpi/v4.1/openmpi-4.1.1.tar.gz

[root@server6 iobench_tools]# tar xvf openmpi-4.1.1.tar.gz

[root@server6 iobench_tools]# cd openmpi-4.1.1

# 编译安装

[root@server6 openmpi-4.1.1]# yum install automake gcc gcc-c++ gcc-gfortran

[root@server6 openmpi-4.1.1]# mkdir /usr/local/openmpi

[root@server6 openmpi-4.1.1]# ./configure --prefix=/usr/local/openmpi/

[root@server6 openmpi-4.1.1]# make

[root@server6 openmpi-4.1.1]# make install

# 配置环境变量

[root@server6 openmpi-4.1.1]# export PATH=$PATH:/usr/local/openmpi/bin

[root@server6 openmpi-4.1.1]# export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/openmpi/lib

# 安装结果验证

[root@server6 openmpi-4.1.1]# mpirun --version

mpirun (Open MPI) 4.1.1

Report bugs to http://www.open-mpi.org/community/help/

# 运行测试

[root@server6 openmpi-4.1.1]# cd ..

[root@server6 iobench_tools]# echo '#include <mpi.h>

#include <stdio.h>

int main(int argc, char** argv) {

MPI_Init(&argc, &argv);

int world_rank;

MPI_Comm_rank(MPI_COMM_WORLD, &world_rank);

printf("Hello from process %d\n", world_rank);

MPI_Finalize();

return 0;

}' > mpi_hello.c

[root@server6 iobench_tools]# mpicc mpi_hello.c -o mpi_hello

[root@server6 iobench_tools]# mpirun --allow-run-as-root -mca btl ^openib -n 2 ./mpi_hello

Hello from process 0

Hello from process 1

[root@server6 iobench_tools]#

# 添加环境变量到用户的bashrc文件

[root@server6 iobench_tools]# tail ~/.bashrc

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

export PATH=$PATH:/usr/local/openmpi/bin:/usr/local/ior/bin

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/openmpi/lib:/usr/local/ior/lib

export MPI_CC=mpicc

export OMPI_ALLOW_RUN_AS_ROOT=1

export OMPI_ALLOW_RUN_AS_ROOT_CONFIRM=1

[root@server6 iobench_tools]# source ~/.bashrc安装IOR(mdtest)。

# 下载源代码

[root@server6 iobench_tools]# yum install git

[root@server6 iobench_tools]# git clone https://github.com/hpc/ior.git

[root@server6 iobench_tools]# cd ior/

# 编译安装

[root@server6 ior]# ./bootstrap

[root@server6 ior]# mkdir /usr/local/ior

[root@server6 ior]# ./configure --prefix=/usr/local/ior/

[root@server6 ior]# make

[root@server6 ior]# make install

# 添加环境变量到用户的bashrc文件

[root@server6 ior]# tail ~/.bashrc

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

export PATH=$PATH:/usr/local/openmpi/bin:/usr/local/ior/bin

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/openmpi/lib:/usr/local/ior/lib

export MPI_CC=mpicc

export OMPI_ALLOW_RUN_AS_ROOT=1

export OMPI_ALLOW_RUN_AS_ROOT_CONFIRM=1

[root@server6 ior]# source ~/.bashrc 安装IO500。

# 下载源码

[root@server6 iobench_tools]# git clone https://github.com/IO500/io500.git

[root@server6 iobench_tools]# cd io500

# 编译安装

[root@server6 io500]# ./prepare.sh

# 获取所有配置项

[root@server6 io500]# ./io500 --list > config-all.ini

# 自定义测试配置

[root@server6 io500]# cat config-beegfs.ini

[global]

datadir = /mnt/beegfs/io500

timestamp-datadir = TRUE

resultdir = ./results

timestamp-resultdir = TRUE

api = POSIX

drop-caches = FALSE

drop-caches-cmd = sudo -n bash -c "echo 3 > /proc/sys/vm/drop_caches"

io-buffers-on-gpu = FALSE

verbosity = 1

scc = TRUE

dataPacketType = timestamp

[debug]

stonewall-time = 30

[ior-easy]

API =

transferSize = 1m

blockSize = 204800m

filePerProc = TRUE

uniqueDir = FALSE

run = TRUE

verbosity =

[ior-easy-write]

API =

run = TRUE

[mdtest-easy]

API =

n = 500000

run = TRUE

[mdtest-easy-write]

API =

run = TRUE

[find-easy]

external-script =

external-mpi-args =

external-extra-args =

nproc =

run = TRUE

pfind-queue-length = 10000

pfind-steal-next = FALSE

pfind-parallelize-single-dir-access-using-hashing = FALSE

[ior-hard]

API =

segmentCount = 500000

collective =

run = TRUE

verbosity =

[ior-hard-write]

API =

collective =

run = TRUE

[mdtest-hard]

API =

n = 500000

files-per-dir =

run = TRUE

[mdtest-hard-write]

API =

run = TRUE

[find]

external-script =

external-mpi-args =

external-extra-args =

nproc =

run = TRUE

pfind-queue-length = 10000

pfind-steal-next = FALSE

pfind-parallelize-single-dir-access-using-hashing = FALSE

[find-hard]

external-script =

external-mpi-args =

external-extra-args =

nproc =

run = FALSE

pfind-queue-length = 10000

pfind-steal-next = FALSE

pfind-parallelize-single-dir-access-using-hashing = FALSE

[mdworkbench-bench]

run = FALSE

[ior-easy-read]

API =

run = TRUE

[mdtest-easy-stat]

API =

run = TRUE

[ior-hard-read]

API =

collective =

run = TRUE

[mdtest-hard-stat]

API =

run = TRUE

[mdtest-easy-delete]

API =

run = TRUE

[mdtest-hard-read]

API =

run = TRUE

[mdtest-hard-delete]

API =

run = TRUE

[root@server6 io500]# 5.1.6 安装dbench

[root@server6 iobench_tools]# yum install dbench5.2 交换机

5.2.1 CX532P-N的配置结果

532# show running-config

!

class-map ecn_map

match cos 3 4

!

vlan 456

!

policy-map ecn

class ecn_map

wred default_ecn

!

interface ethernet 0/16

breakout 4x25G[10G]

service-policy ecn

switchport access vlan 456

exit

!

interface ethernet 0/17

service-policy ecn

switchport access vlan 456

exit

!

interface ethernet 0/18

service-policy ecn

switchport access vlan 456

exit

!

interface ethernet 0/19

service-policy ecn

switchport access vlan 456

exit

!

ip route 0.0.0.0/0 10.230.1.1 200

!

end

532# show interface priority-flow-control

Port PFC0 PFC1 PFC2 PFC3 PFC4 PFC5 PFC6 PFC7

----------- ------ ------ ------ ------ ------ ------ ------ ------

0/0 - - - enable enable - - -

0/4 - - - enable enable - - -

0/8 - - - enable enable - - -

0/12 - - - enable enable - - -

0/16 - - - enable enable - - -

0/17 - - - enable enable - - -

0/18 - - - enable enable - - -

0/19 - - - enable enable - - -

0/20 - - - enable enable - - -

0/24 - - - enable enable - - -

0/28 - - - enable enable - - -

0/32 - - - enable enable - - -

0/36 - - - enable enable - - -

0/40 - - - enable enable - - -

0/44 - - - enable enable - - -

0/48 - - - enable enable - - -

0/52 - - - enable enable - - -

0/56 - - - enable enable - - -

0/60 - - - enable enable - - -

0/64 - - - enable enable - - -

0/68 - - - enable enable - - -

0/72 - - - enable enable - - -

0/76 - - - enable enable - - -

0/80 - - - enable enable - - -

0/84 - - - enable enable - - -

0/88 - - - enable enable - - -

0/92 - - - enable enable - - -

0/96 - - - enable enable - - -

0/100 - - - enable enable - - -

0/104 - - - enable enable - - -

0/108 - - - enable enable - - -

0/112 - - - enable enable - - -

0/116 - - - enable enable - - -

0/120 - - - enable enable - - -

0/124 - - - enable enable - - -

532# show interface ecn

Port ECN0 ECN1 ECN2 ECN3 ECN4 ECN5 ECN6 ECN7

----------- ------ ------ ------ ------ ------ ------ ------ ------

0/0 - - - - - - - -

0/4 - - - - - - - -

0/8 - - - - - - - -

0/12 - - - - - - - -

0/16 - - - enable enable - - -

0/17 - - - enable enable - - -

0/18 - - - enable enable - - -

0/19 - - - enable enable - - -

0/20 - - - - - - - -

0/24 - - - - - - - -

0/28 - - - - - - - -

0/32 - - - - - - - -

0/36 - - - - - - - -

0/40 - - - - - - - -

0/44 - - - - - - - -

0/48 - - - - - - - -

0/52 - - - - - - - -

0/56 - - - - - - - -

0/60 - - - - - - - -

0/64 - - - - - - - -

0/68 - - - - - - - -

0/72 - - - - - - - -

0/76 - - - - - - - -

0/80 - - - - - - - -

0/84 - - - - - - - -

0/88 - - - - - - - -

0/92 - - - - - - - -

0/96 - - - - - - - -

0/100 - - - - - - - -

0/104 - - - - - - - -

0/108 - - - - - - - -

0/112 - - - - - - - -

0/116 - - - - - - - -

0/120 - - - - - - - -

0/124 - - - - - - - -

532# 6 参考资料

【1】BeeGFS Documentation 7.3.3 – Architecture、Quick Start Guide、RDMA Support

【2】高性能计算IO 500存储优化:实践与经验

【3】Github:open-mpi/ompi

【4】Github:IO500/io500

【5】AWS FSx Lustre 并行文件系统在 HPC 中的应用和性能评估