Read more

DPVS offload and performance testing on Sever-Switch(with DPU module)

This document mainly explains the service offload capability verification of Asterfusion X-T series switch’s DPU card and the load performance test after offload. This functional verification and performance test is conducted with the offload of DPVS, an open source, DPDK-based high-performance L4 load balancer.

Test environment for DPVS offload

| Hardware | Model | Hardware Specs | Quantity |

|---|---|---|---|

| Switch | X312P-48Y-T | A DPU module was installed | 1 |

| Sever | X86 server | 10G optical port | 4 |

| Optical Modules | 10G | SFP+ | 8 |

| Fiber | Multimode | 10G | 4 |

| Software | Versions | |

|---|---|---|

| Sever OS | CentOS Linux release 7.8.2003 (Core) | Open source |

| Switch OS | AsterNOS v3.1 | Provided by Asterfusion |

| DPU module OS | Debian 10.3 (Kernel 4.14.76-17.0.1) | |

| DPDK | 19.11.0 | |

| DPVS | 1.8-8 | Open source |

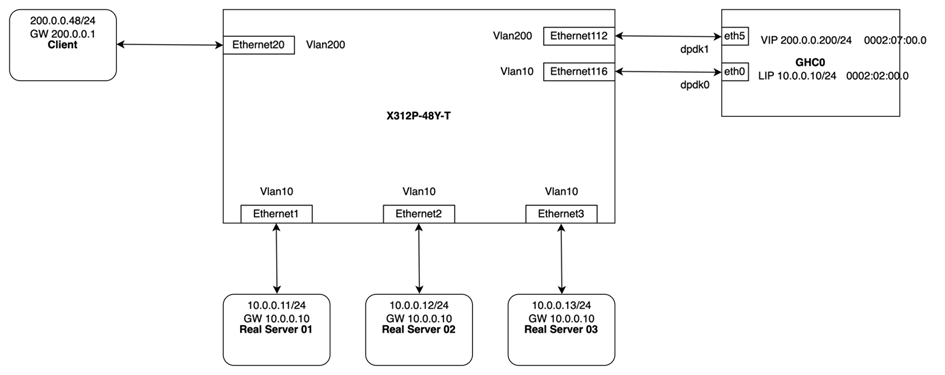

In order to verify the offloading capability of DPVS by DPU bucket card of X-T series switches, this verification uses 4 servers (1 as Client, initiating HTTP requests, and the other 3 as Real Server, providing Web services and responding to HTTP requests) directly connected to the switch, and on the DPU bucket card, compiles and installs DPDK and DPVS, and performs a two-arm Full-NAT mode of Layer 4 load balancing configuration test. The device connection topology for this verification is shown bellow.

In the dual-arm mode of DPVS, two VLANs need to be configured on the switch for message forwarding between the Client side and the dpdk1 port on the DPU module, and message forwarding between the three Real Servers on the back end and the dpdk0 port on the DPU module. The corresponding VLAN division, port assignment, port role and corresponding IP configuration are shown below.

DPVS offload testing process

VLAN configuration

admin@sonic:~$ sudo config vlan add 10

admin@sonic:~$ sudo config vlan add 200

admin@sonic:~$ sudo config vlan member add 10 Ethernet1 -u

admin@sonic:~$ sudo config vlan member add 10 Ethernet2 -u

admin@sonic:~$ sudo config vlan member add 10 Ethernet3 -u

admin@sonic:~$ sudo config vlan member add 10 Ethernet116 -u

admin@sonic:~$ sudo config vlan member add 200 Ethernet20 -u

admin@sonic:~$ sudo config vlan member add 200 Ethernet112 -u

Compile and install DPDK and DPVS on the DPU module

# Configuring the compilation environment

root@OCTEONTX:~# apt-get install libpopt0 libpopt-dev libnl-3-200 libnl-3-dev libnl-genl-3-dev libpcap-dev

root@OCTEONTX:~# tar xvf linux-custom.tgz

root@OCTEONTX:~# ln -s `pwd`/linux-custom /lib/modules/`uname -r`/build

# Compiling DPDK

root@OCTEONTX:~# cd /var/dpvs/

root@OCTEONTX:/var/dpvs# tar xvf dpdk-19.11.0_raw.tar.bz2

root@OCTEONTX:/var/dpvs# cd dpdk-19.11.0

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# export TARGET=”arm64-octeontx2-linux-gcc”

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# export RTE_SDK=`pwd`

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# export RTE_TARGET=”build”

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# export PATH=”${PATH}:$RTE_SDK/usertools”

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# make config T=arm64-octeontx2-linux-gcc

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# sed -i ‘s/CONFIG_RTE_LIBRTE_PMD_PCAP=n/CONFIG_RTE_LIBRTE_PMD_PCAP=y/g’ $RTE_SDK/build/.config

root@OCTEONTX:/var/dpvs/dpdk-19.11.0# make -j

# Compiling DPVS

root@OCTEONTX:~# cd /var/dpvs/

root@OCTEONTX:/var/dpvs# tar xvf dpvs.tar

root@OCTEONTX:/var/dpvs# cd dpvs/

root@OCTEONTX:/var/dpvs/dpvs# patch -p1 < dpvs_5346e4c645c_with_dpdk.patch

root@OCTEONTX:/var/dpvs/dpvs# make -j

root@OCTEONTX:/var/dpvs/dpvs# make install

# Load kernel module, set large page memory, bind DPDK driver for specified port

root@OCTEONTX:~# cd /var/dpvs

root@OCTEONTX:/var/dpvs# insmod /var/dpvs/dpdk-19.11.0/build/build/kernel/linux/kni/rte_kni.ko carrier=on

root@OCTEONTX:/var/dpvs# echo 128 > /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages

root@OCTEONTX:/var/dpvs# mount -t hugetlbfs nodev /mnt/huge -o pagesize=2M

root@OCTEONTX:/var/dpvs# dpdk-devbind.py -b vfio-pci 0002:02:00.0

root@OCTEONTX:/var/dpvs# dpdk-devbind.py -b vfio-pci 0002:07:00.0

root@OCTEONTX:/var/dpvs# dpdk-devbind.py -s

Network devices using DPDK-compatible driver

============================================

0002:02:00.0 ‘Device a063’ drv=vfio-pci unused=

0002:07:00.0 ‘Device a063’ drv=vfio-pci unused=

Network devices using kernel driver

===================================

0000:01:10.0 ‘Device a059’ if= drv=octeontx2-cgx unused=vfio-pci

0000:01:10.1 ‘Device a059’ if= drv=octeontx2-cgx unused=vfio-pci

0000:01:10.2 ‘Device a059’ if= drv=octeontx2-cgx unused=vfio-pci

……

root@OCTEONTX:/var/dpvs#

Configuring Load Balancing Services

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpvs — -w 0002:02:00.0 -w 0002:07:00.0

root@OCTEONTX:/var/dpvs#

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip link set dpdk0 link up

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip link set dpdk1 link up

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip addr add 10.0.0.10/32 dev dpdk0 sapool

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip addr add 200.0.0.200/32 dev dpdk1

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip route add 10.0.0.0/24 dev dpdk0

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip route add 200.0.0.0/24 dev dpdk1

root@OCTEONTX:/var/dpvs#

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -A -t 200.0.0.200:80 -s rr

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -a -t 200.0.0.200:80 -r 10.0.0.11 -b

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -a -t 200.0.0.200:80 -r 10.0.0.12 -b

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -a -t 200.0.0.200:80 -r 10.0.0.13 -b

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm –add-laddr -z 10.0.0.10 -t 200.0.0.200:80 -F dpdk0

root@OCTEONTX:/var/dpvs#

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -G

VIP:VPORT TOTAL SNAT_IP CONFLICTS CONNS

200.0.0.200:80 1

10.0.0.10 0 0

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -ln

IP Virtual Server version 0.0.0 (size=0)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 200.0.0.200:80 rr

-> 10.0.0.11:80 FullNat 1 0 0

-> 10.0.0.12:80 FullNat 1 0 0

-> 10.0.0.13:80 FullNat 1 0 0

root@OCTEONTX:/var/dpvs#

Configuring Load Balancing Services

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpvs — -w 0002:02:00.0 -w 0002:07:00.0

root@OCTEONTX:/var/dpvs#

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip link set dpdk0 link up

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip link set dpdk1 link up

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip addr add 10.0.0.10/32 dev dpdk0 sapool

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip addr add 200.0.0.200/32 dev dpdk1

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip route add 10.0.0.0/24 dev dpdk0

root@OCTEONTX:/var/dpvs# ./dpvs/bin/dpip route add 200.0.0.0/24 dev dpdk1

root@OCTEONTX:/var/dpvs#

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -A -t 200.0.0.200:80 -s rr

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -a -t 200.0.0.200:80 -r 10.0.0.11 -b

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -a -t 200.0.0.200:80 -r 10.0.0.12 -b

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -a -t 200.0.0.200:80 -r 10.0.0.13 -b

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm –add-laddr -z 10.0.0.10 -t 200.0.0.200:80 -F dpdk0

root@OCTEONTX:/var/dpvs#

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -G

VIP:VPORT TOTAL SNAT_IP CONFLICTS CONNS

200.0.0.200:80 1

10.0.0.10 0 0

root@OCTEONTX:/var/dpvs# ./dpvs/bin/ipvsadm -ln

IP Virtual Server version 0.0.0 (size=0)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 200.0.0.200:80 rr

-> 10.0.0.11:80 FullNat 1 0 0

-> 10.0.0.12:80 FullNat 1 0 0

-> 10.0.0.13:80 FullNat 1 0 0

root@OCTEONTX:/var/dpvs#

Configuring Network and Web Services for 3 Real Servers

# Real Server 01

[root@node-01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether b8:59:9f:42:36:69 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.11/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::ba59:9fff:fe42:3669/64 scope link

valid_lft forever preferred_lft forever

[root@node-01 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.0.0.10 0.0.0.0 UG 0 0 0 eth0

10.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

[root@node-01 ~]# cat index.html

Real Server 01

[root@node-01 ~]# python -m SimpleHTTPServer 80

Serving HTTP on 0.0.0.0 port 80 …

10.0.0.10 – – [23/Dec/2022 02:57:18] “GET / HTTP/1.1” 200 –

# Real Server 02

[root@node-02 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 68:91:d0:64:02:f1 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.12/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::6a91:d0ff:fe64:2f1/64 scope link

valid_lft forever preferred_lft forever

[root@node-02 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.0.0.10 0.0.0.0 UG 0 0 0 eth0

10.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

[root@node-02 ~]# python -m SimpleHTTPServer 80

Serving HTTP on 0.0.0.0 port 80 …

10.0.0.10 – – [23/Dec/2022 08:16:40] “GET / HTTP/1.1” 200 –

# Real Server 03

[root@node-03 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ac state UP group default qlen 1000

link/ether b8:59:9f:c7:73:cb brd ff:ff:ff:ff:ff:ff

inet6 fe80::ba59:9fff:fec7:73cb/64 scope link

valid_lft forever preferred_lft forever

[root@node-03 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.0.0.10 0.0.0.0 UG 0 0 0 eth1

10.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth1

[root@node-03 ~]# python -m SimpleHTTPServer 80

Serving HTTP on 0.0.0.0 port 80 …

10.0.0.10 – – [23/Dec/2022 08:16:39] “GET / HTTP/1.1” 200 –

Configuring the Client’s network

[root@node-00 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether b8:59:9f:42:36:68 brd ff:ff:ff:ff:ff:ff

inet 200.0.0.48/24 brd 200.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::ba59:9fff:fe42:3668/64 scope link

valid_lft forever preferred_lft forever

[root@node-00 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 200.0.0.1 0.0.0.0 UG 0 0 0 eth0

200.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

[root@node-00 ~]#

Results of DPVS offloading to Server-switch (Asterfusion X-T Series)

After the test, we successfully offload the high performance Layer 4 load balancing software DPVS to the DPU module of Asterfusion X-T series switch. From the access result of Client side, we can see that when Client accesses http://200.0.0.200, the message will be forwarded to the DPU module of the switch first, and then handed over to DPVS to forward to the 3 web servers according to the preset rules.

# On the Client side, use curl to access http://<VIP> to verify the load balancing effect of DPVS

[root@node-00 ~]# curl http://200.0.0.200

Real Server 01

[root@node-00 ~]# curl http://200.0.0.200

Real Server 02

[root@node-00 ~]# curl http://200.0.0.200

Real Server 03

1. Maximum Forwarding Performance of Single Stream with Different Cores in Full-NAT Mode

The maximum forwarding performance of DPVS with different core configurations is shown in the table below.

| Core | 1 | 4 | 8 | 16 | 23 |

| DPVS/Gbps | 0.77 | 2.66 | 5.16 | 10.01 | 14.16 |

2. 16-core stable forwarding performance in Full-NAT mode

The stable forwarding performance of DPVS with different packet lengths without packet loss was measured by using the meter for 5 minutes with different packet lengths

| Package length/Byte | 78 | 128 | 256 | 512 |

| DPVS/Gbps | 9.6 | 13.6 | 21.6 | 31.2 |

3. Stable forwarding performance of 23 cores in Full-NAT mode

The stable forwarding performance of DPVS with different packet lengths without packet loss was measured by using the meter for 5 minutes with packet lengths of different lengths.

| Package length/Byte | 78 | 128 | 256 | 512 |

| DPVS/Gbps | 12.2 | 17.7 | 27.9 | 42.8 |

4. Multi-core connectivity performance in Full-NAT mode

Using the meter, we measured the maximum connection building performance of DPVS per second with different core configurations by hitting the stream with 64Byte packet length and 100Gbps packet delivery rate.

| Core | 1 | 4 | 8 | 16 | 23 |

| Connections established/s MAX | 22w | 55w | 94w | 163w | 203w |