Read more

Introduction to VirtIO technology development and DPU SmartNIC Offload

I/O virtualization solutions – VirtIO

Virtualization is the foundation of IaaS cloud computing technology, and virtual machines have an immediate need for EBS storage Existing I/O virtualization solutions are the following three:

- Fully virtualized emulated I/O devices: devices emulated entirely by QEMU pure software, with poor performance;

- Semi-virtualized emulated I/O devices: devices emulated using the VirtIO framework, bypassing the KVM kernel module and improving performance;

- PCI device pass-through: optimal performance with almost no performance loss

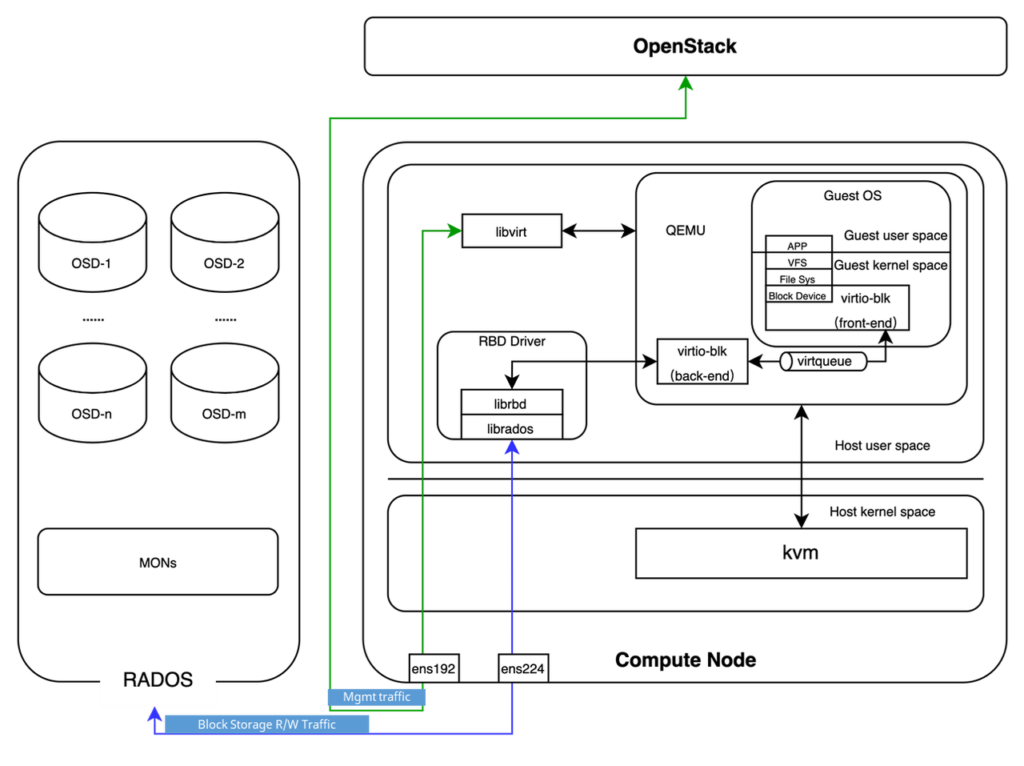

Comparing virtualization and PCI device pass-through, VirtIO is a more common solution for large cloud vendors in the industry today. Routinely, the software architecture of compute nodes is shown in the following diagram: Openstack-Cinder provides Elastic Block Storage Service (EBS) for virtual machines, while it can dock to the open source distributed storage system Ceph and use it as the storage backend of EBS.

In the above architecture, VirtIO front-end and back-end and Ceph’s RBD Driver are running on the host, consuming common arithmetic resources. So the industry started to introduce DPU in VirtIO solution to offload VirtIO backend.

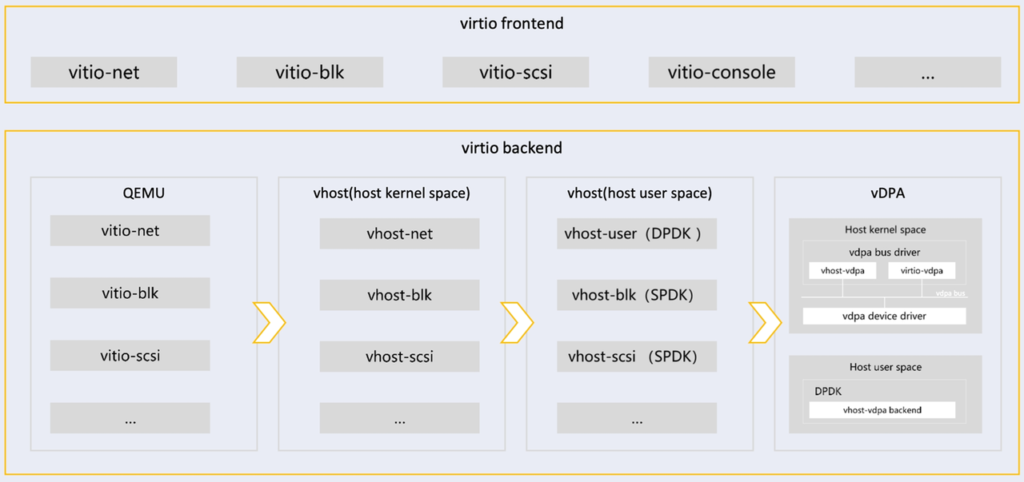

The standard framework for the DPU acceleration – vDPA(Virtio Data Path Acceleration)

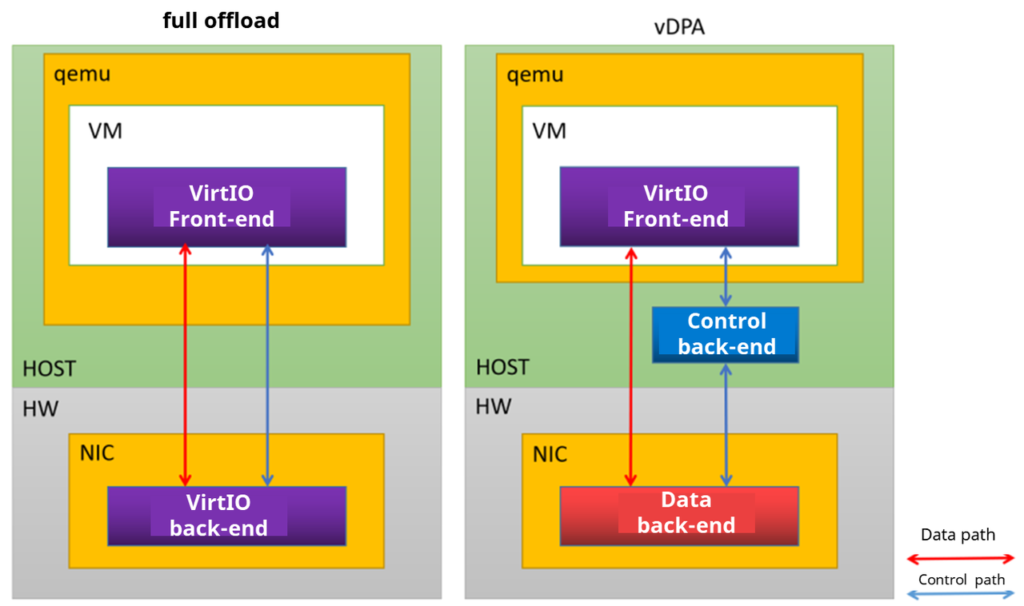

Virtio offload currently has two implementation options: full offload and vDPA.

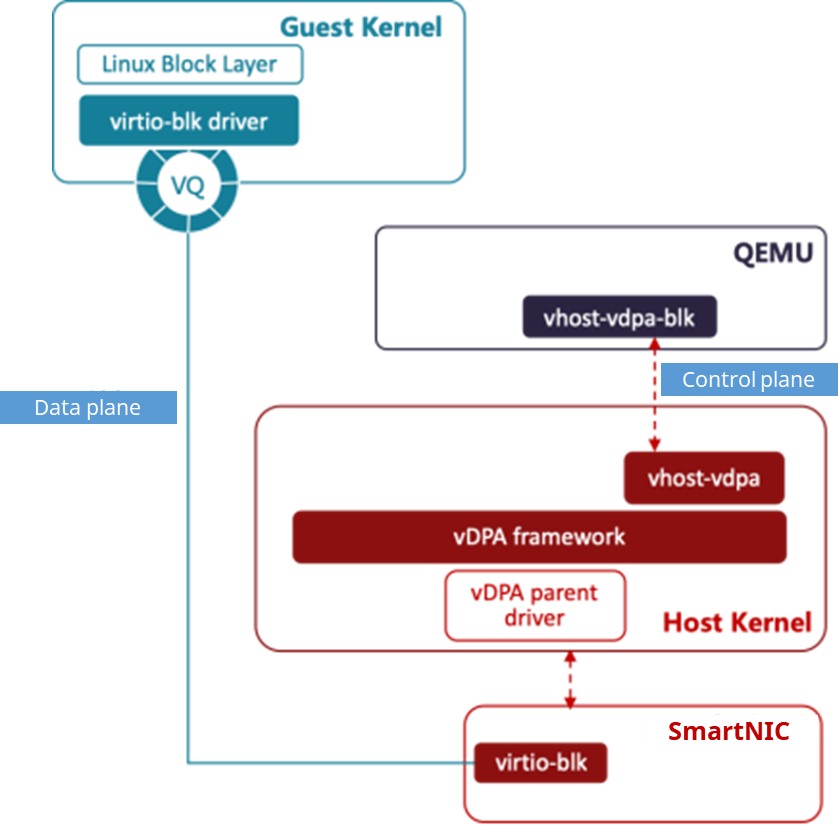

Among them, the full offload solution is a full hardening implementation of VirtIO backend, while the vDPA solution decouples the data path and control path, and only hardens the implementation of the data path backend.

Let’s focus on vDPA (Virtio Data Path Acceleration). vDPA is a virtual I/O path acceleration scheme based on Virtio offload, which was proposed by Redhat and merged into the Linux kernel mainline in 2020.

The vDBA scheme mainly decouples the data path and control path between the front and back ends of VirtIO, offloading the back end of the data path (“data back end” in the above figure) to the physical NIC and keeping the back end of the control path (“control back end” in the above figure) in the kernel or DPDK.

The vDBA solution integrates the high performance of the VFIO solution with the flexibility and broad compatibility of VirtIO, offering the following advantages:

- Zero loss of virtual I/O paths without additional host computing resources

- The control plane can be defined by the vendor, greatly simplifying the adaptation of the NIC control plane

- Data plane follows VirtIO standard, with pervasiveness and the ability to shield the underlying hardware;

- Support virtual machine hot migration

Compared to the full offload solution,the vDPA solution can be considered as the future direction, which can be used not only for virtual machines, but also for containers and bare metal servers, which is the ideal state for each cloud vendor.

Based on vDBA solution offload VirtIO data backend to DPU SmartNIC

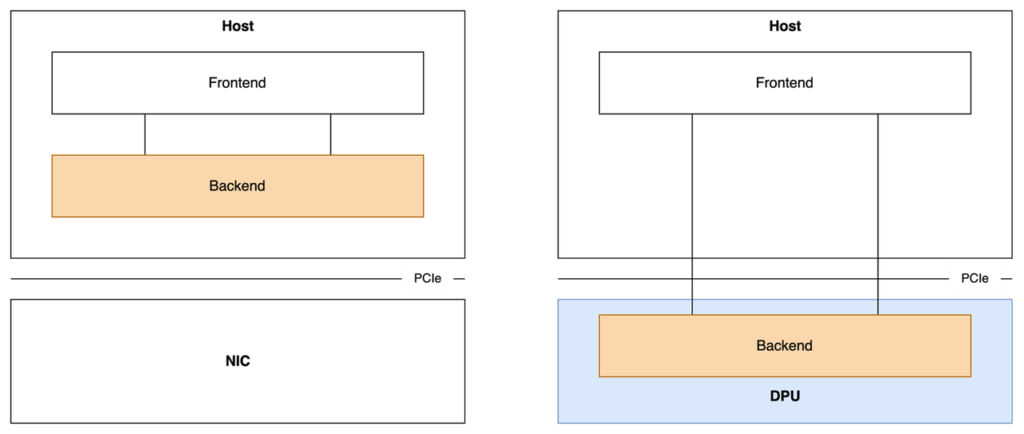

When we introduce DPU, VirtIO backend can be offloaded to DPU card as shown below:

Helium DPU card is designed based on high performance DPU chip, which is compliant with PCle and Ethernet protocol, provides PCle x 16 Gen3.0/4.0 channel interface and supports up to 100Gbps multi-functional service processing capability. You can contact us for more information.

visit https://asterfusion.com/product/helium_dpu/